Abstract

Typically, a Reinforcement Learning (RL) algorithm focuses in learning a single deployable policy as the end product. Depending on the initialization methods and seed randomization, learning a single policy could possibly leads to convergence to different local optima across different runs, especially when the algorithm is sensitive to hyper-parameter tuning. Motivated by the capability of Generative Adversarial Networks (GANs) in learning complex data manifold, the adversarial training procedure could be utilized to learn a population of good-performing policies instead. We extend the teacher-student methodology observed in the Knowledge Distillation field in typical deep neural network prediction tasks to RL paradigm. Instead of learning a single compressed student network, an adversarially-trained generative model (hypernetwork) is learned to output network weights of a population of good-performing policy networks, representing a school of apprentices. Our proposed framework, named Teacher-Apprentices RL (TARL), is modular and could be used in conjunction with many existing RL algorithms. We illustrate the performance gain and improved robustness by combining TARL with various types of RL algorithms, including direct policy search Cross-Entropy Method, Q-learning, Actor-Critic, and policy gradient-based methods.

Similar content being viewed by others

Notes

Not to be confused with action distribution, which typically refers to the policy itself.

We included a negative in the function to change from the original minimization to maximization problem.

Code could be found at https://github.com/maximecb/gym-minigrid.

This is an open source implementation of Roboschool and MuJoCo environments.

The term is also used interchangeably with deployment or inference.

The results align with the findings of Pybullet-Gym benchmark [61].

References

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., & Bengio, Y. (2014). Generative adversarial nets. In Advances in neural information processing systems 27 (NIPS).

Jin, Y., Zhang, J., Li, M., Tian, Y., & Zhu, H. (2017). Towards the high-quality anime characters generation with generative adversarial networks. In Proceedings of the machine learning for creativity and design workshop at NIPS.

Chen, Y., Shi, F., Christodoulou, A.G., Xie, Y., Zhou, Z., & Li, D. (2018). Efficient and accurate MRI super-resolution using a generative adversarial network and 3D multi-level densely connected network. In International conference on medical image computing and computer-assisted intervention (pp. 91–99). Springer.

Zhou, H., Cai, R., Quan, T., Liu, S., Li, S., Huang, Q., Ertürk, A., & Zeng, S. (2020). 3d high resolution generative deep-learning network for fluorescence microscopy imaging. Optics Letters, 45(7), 1695–1698.

Zhang, S., Wang, L., Chang, C., Liu, C., Zhang, L., & Cui, H. (2020). An image denoising method based on BM4D and GAN in 3D shearlet domain. Mathematical Problems in Engineering, 2020, 1–11.

Li, C., & Wand, M. (2016). Precomputed real-time texture synthesis with Markovian generative adversarial networks. In European conference on computer vision (pp 702–716). Springer.

Kumar, K., Kumar, R., de Boissiere, T., Gestin, L., Teoh, W. Z., Sotelo, J., de Brébisson, A., Bengio, Y., & Courville, A. C. (2019). Melgan: Generative adversarial networks for conditional waveform synthesis. In Advances in neural information processing systems 32.

Latifi, S., & Torres-Reyes, N. (2019). Audio enhancement and synthesis using generative adversarial networks: A survey. International Journal of Computer Applications, 182(35), 27.

Croce, D., Castellucci, G., & Basili, R. (2020). Gan-bert: Generative adversarial learning for robust text classification with a bunch of labeled examples. In Proceedings of the 58th annual meeting of the association for computational linguistics (pp. 2114–2119).

Hu, Z., Luo, F., Tan, Y., Zeng, W., & Sui, Z. (2019). WSD-GAN: Word sense disambiguation using generative adversarial networks. In Proceedings of the AAAI conference on artificial intelligence (vol. 33, pp. 9943–9944).

Mokhayeri, F., Kamali, K., & Granger, E. (2020). Cross-domain face synthesis using a controllable GAN. In Proceedings of the IEEE/CVF winter conference on applications of computer vision (pp. 252–260).

Spick, R., Demediuk, S., & Alfred Walker, J. (2020). Naive mesh-to-mesh coloured model generation using 3D GANs. In Proceedings of the Australasian computer science week multiconference (pp. 1–6).

Gao, R., Xia, H., Li, J., Liu, D., Chen, S., & Chun, G. (2019) DRCGR: Deep reinforcement learning framework incorporating CNN and GAN-based for interactive recommendation. In 2019 IEEE international conference on data mining (ICDM) (pp. 1048–1053). IEEE.

Tian, Y., Wang, Q., Huang, Z., Li, W., Dai, D., Yang, M., Wang, J., & Fink, O. (2020). Off-policy reinforcement learning for efficient and effective GAN architecture search. In European conference on computer vision (pp. 175–192). Springer.

Wang, Q., Ji, Y., Hao, Y., & Cao, J. (2020). GRL: Knowledge graph completion with GAN-based reinforcement learning. Knowledge-Based Systems, 209, 106421.

Sandfort, V., Yan, K., Pickhardt, P. J., & Summers, R. M. (2019). Data augmentation using generative adversarial networks (cycleGAN) to improve generalizability in CT segmentation tasks. Scientific Reports, 9(1), 1–9.

Hans, A., & Udluft, S. (2011). Ensemble usage for more reliable policy identification in reinforcement learning. In ESANN.

Duell, S., & Udluft, S. (2013). Ensembles for continuous actions in reinforcement learning. In ESANN.

Elliott, D., Santosh, K., & Anderson, C. (2020). Gradient boosting in crowd ensembles for Q-learning using weight sharing. International Journal of Machine Learning and Cybernetics, 11, 2275–2287.

Ha, D., Dai, A. M., & Le, Q. V. (2017). Hypernetworks. In International conference on learning representations (ICLR).

Tang, S. Y., Irissappane, A. A., Oliehoek, F. A., & Zhang, J. (2021). Learning complex policy distribution with CEM guided adversarial hypernetwork. In AAMAS (pp. 1308–1316).

von Oswald, J., Henning, C., Sacramento, J., & Grewe, B. F. (2020). Continual learning with hypernetworks. In International conference on learning representations (ICLR).

Louizos, C., & Welling, M. (2017). Multiplicative normalizing flows for variational bayesian neural networks. In International conference on machine learning (ICML), (pp. 2218–2227).

Pawlowski, N., Rajchl, M., & Glocker, B. (2017). Implicit weight uncertainty in neural networks. In Bayesian deep learning workshop, advances in neural information processing systems (NIPS).

Blundell, C., Cornebise, J., Kavukcuoglu, K., & Wierstra, D. (2015). Weight uncertainty in neural network. In International conference on machine learning (ICML) (pp. 1613–1622).

Pourchot, A., & Sigaud, O. (2018). Cem-rl: Combining evolutionary and gradient-based methods for policy search. arXiv preprint arXiv:1810.01222.

Mannor, S., Rubinstein, R. Y., & Gat, Y. (2003). The cross entropy method for fast policy search. In International conference on machine learning (ICML) (pp. 512–519).

Kalashnikov, D., Irpan, A., Pastor, P., Ibarz, J., Herzog, A., Jang, E., Quillen, D., Holly, E., Kalakrishnan, M., Vanhoucke, V, & Levine S. (2018). Scalable deep reinforcement learning for vision-based robotic manipulation. In Conference on robot learning (CoRL) (pp. 651–673).

Simmons-Edler, R., Eisner, B., Mitchell, E., Seung, S., & Lee, D. (2019). Q-learning for continuous actions with cross-entropy guided policies. In RL4RealLife workshop, international conference on machine learning (ICML).

Galanti, T., & Wolf, L. (2020). On the modularity of hypernetworks. Advances in Neural Information Processing Systems, 33, 10409–10419.

Zhang, C., Ren, M., & Urtasun, R. (2018). Graph hypernetworks for neural architecture search. In International Conference on Learning Representations.

Brock, A., Lim, T., Ritchie, J., & Weston, N. (2018). Smash: One-shot model architecture search through hypernetworks. In International conference on learning representations.

Navon, A., Shamsian, A., Fetaya, E., & Chechik, G. (2020). Learning the pareto front with hypernetworks. In International conference on learning representations.

Henning, C., von Oswald, J., Sacramento, J., Surace, S. C., Pfister, J. -P., & Grewe, B. F. (2018). Approximating the predictive distribution via adversarially-trained hypernetworks. In Bayesian deep learning workshop, advances in neural information processing systems (NeurIPS).

Skorokhodov, I., Ignatyev, S., & Elhoseiny, M. (2021). Adversarial generation of continuous images. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 10753–10764).

Buciluǎ, C., Caruana, R., & Niculescu-Mizil, A. (2006). Model compression. In Proceedings of the 12th ACM SIGKDD international conference on knowledge discovery and data mining (pp. 535–541).

Hinton, G., Vinyals, O., & Dean, J. et al. (2015). Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531

Adriana, R., Nicolas, B., Ebrahimi, K. S., Antoine, C., Carlo, G., & Yoshua, B. (2015). Fitnets: Hints for thin deep nets. In Proc. ICLR (pp. 1–13).

Yim, J., Joo, D., Bae, J., & Kim, J. (2017). A gift from knowledge distillation: Fast optimization, network minimization and transfer learning. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 4133–4141).

Lee, S. H., Kim, D. H., & Song, B. C. (2018). Self-supervised knowledge distillation using singular value decomposition. In Proceedings of the European conference on computer vision (ECCV) (pp. 335–350).

Komodakis, N., & Zagoruyko, S. (2017). Paying more attention to attention: Improving the performance of convolutional neural networks via attention transfer. In ICLR.

Kim, J., Park, S., & Kwak, N. (2018). Paraphrasing complex network: Network compression via factor transfer. In Advances in neural information processing systems 31.

Sun, D., Yao, A., Zhou, A., & Zhao, H. (2019). Deeply-supervised knowledge synergy. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 6997–7006).

Tian, Y., Krishnan, D., & Isola, P. (2019). Contrastive representation distillation. In International conference on learning representations.

Zhang, Y., Xiang, T., Hospedales, T. M., & Lu, H. (2018). Deep mutual learning. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 4320–4328).

Ho, J., & Ermon, S. (2016). Generative adversarial imitation learning. In Advances in neural information processing systems 29.

Li, Y., Song, J., & Ermon, S. (2017). Infogail: Interpretable imitation learning from visual demonstrations. In Advances in neural information processing systems 30.

Fei, C., Wang, B., Zhuang, Y., Zhang, Z., Hao, J., Zhang, H., Ji, X., & Liu, W. (2020). Triple-gail: A multi-modal imitation learning framework with generative adversarial nets. In: Bessiere, C. (ed.) Proceedings of the twenty-ninth international joint conference on artificial intelligence, IJCAI-20, pp. 2929–2935. International Joint Conferences on Artificial Intelligence Organization. https://doi.org/10.24963/ijcai.2020/405. Main track.

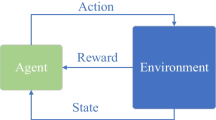

Sutton, R. S., & Barto, A. G. (2018). Reinforcement learning: an introduction. MIT press.

Williams, R. J. (1992). Simple statistical gradient-following algorithms for connectionist reinforcement learning. Machine Learning, 8(3–4), 229–256.

Faury, L., Calauzenes, C., Fercoq, O., & Krichen, S. (2019). Improving evolutionary strategies with generative neural networks. arXiv preprint arXiv:1901.11271.

Schwefel, H.-P. (1981). Numerical optimization of computer models. John Wiley & Sons Inc.

Kurtz, N., & Song, J. (2013). Cross-entropy-based adaptive importance sampling using Gaussian mixture. Structural Safety, 42, 35–44.

Geyer, S., Papaioannou, I., & Straub, D. (2019). Cross entropy-based importance sampling using Gaussian densities revisited. Structural Safety, 76, 15–27.

Deutsch, L. (2018). Generating neural networks with neural networks. arXiv preprint arXiv:1801.01952.

Ukai, K., Matsubara, T., & Uehara, K. (2018). Hypernetwork-based implicit posterior estimation and model averaging of CNN. In Asian conference on machine learning (pp. 176–191).

Roth, K., Lucchi, A., Nowozin, S., & Hofmann, T. (2017). Stabilizing training of generative adversarial networks through regularization. In Advances in neural information processing systems (NIPS) (pp. 2018–2028).

Wiering, M. A., & Van Hasselt, H. (2008). Ensemble algorithms in reinforcement learning. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), 38(4), 930–936.

Chevalier-Boisvert, M., Willems, L., & Pal, S. (2018). Minimalistic Gridworld Environment for OpenAI Gym. GitHub.

Ellenberger, B. (2018). Pybullet Gymperium, Open-source implementations of OpenAI Gym MuJoCo environments. GitHub.

Sung, J.-c. (2018) Benchmark results for TD3 and DDPG using the PyBullet reinforcement learning environments. GitHub.

Acknowledgements

Shi Yuan Tang acknowledges support from the Alibaba Group and the Alibaba-NTU Singapore Joint Research Institute.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Tang, S.Y., Irissappane, A.A., Oliehoek, F.A. et al. Teacher-apprentices RL (TARL): leveraging complex policy distribution through generative adversarial hypernetwork in reinforcement learning. Auton Agent Multi-Agent Syst 37, 25 (2023). https://doi.org/10.1007/s10458-023-09606-9

Accepted:

Published:

DOI: https://doi.org/10.1007/s10458-023-09606-9