Abstract

In this paper, we propose a novel overlapping domain decomposition method that can be applied to various problems in variational imaging such as total variation minimization. Most of recent domain decomposition methods for total variation minimization adopt the Fenchel–Rockafellar duality, whereas the proposed method is based on the primal formulation. Thus, the proposed method can be applied not only to total variation minimization but also to those with complex dual problems such as higher order models. In the proposed method, an equivalent formulation of the model problem with parallel structure is constructed using a custom overlapping domain decomposition scheme with the notion of essential domains. As a solver for the constructed formulation, we propose a decoupled augmented Lagrangian method for untying the coupling of adjacent subdomains. Convergence analysis of the decoupled augmented Lagrangian method is provided. We present implementation details and numerical examples for various model problems including total variation minimizations and higher order models.

Similar content being viewed by others

References

Aubert, G., Aujol, J.-F.: A variational approach to removing multiplicative noise. SIAM J. Appl. Math. 68(4), 925–946 (2008)

Chambolle, A.: An algorithm for total variation minimization and applications. J. Math. Imaging Vis. 20(1), 89–97 (2004)

Chambolle, A., Lions, P.-L.: Image recovery via total variation minimization and related problems. Numer. Math. 76(2), 167–188 (1997)

Chambolle, A., Pock, T.: A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40(1), 120–145 (2011)

Chan, T.F., Esedoglu, S.: Aspects of total variation regularized L1 function approximation. SIAM J. Appl. Math. 65(5), 1817–1837 (2005)

Chan, T.F., Esedoglu, S., Nikolova, M.: Algorithms for finding global minimizers of image segmentation and denoising models. SIAM J. Appl. Math. 66 (5), 1632–1648 (2006)

Chan, T.F., Vese, L.A.: Active contours without edges. IEEE Trans. Image Process. 10(2), 266–277 (2001)

Chan, T.F., Wong, C.-K.: Total variation blind deconvolution. IEEE Trans. Image Process. 7(3), 370–375 (1998)

Chang, H., Tai, X.-C., Wang, L.-L., Yang, D.: Convergence rate of overlapping domain decomposition methods for the rudin-Osher-Fatemi model based on a dual formulation. SIAM J. Imaging Sci. 8(1), 564–591 (2015)

Dong, Y., Hintermüller, M., Neri, M.: An efficient primal-dual method for L1tV image restoration. SIAM J. Imaging Sci. 2(4), 1168–1189 (2009)

Duan, Y., Chang, H., Tai, X.-C.: Convergent non-overlapping domain decomposition methods for variational image segmentation. J. Sci. Comput. 69(2), 532–555 (2016)

Evans, L.C., Gariepy, R.F.: Measure Theory and Fine Properties of Functions. CRC Press, Florida (1992)

Fornasier, M., Schönlieb, C.-B.: Subspace correction methods for total variation and l1-minimization. SIAM J. Numer. Anal. 47(5), 3397–3428 (2009)

He, B., Yuan, X.: On non-ergodic convergence rate of Douglas–Rachford alternating direction method of multipliers. Numer. Math. 130(3), 567–577 (2015)

Hestenes, M.R.: Multiplier and gradient methods. J. Optim. Theory Appl. 4 (5), 303–320 (1969)

Hong, M., Luo, Z.-Q., Razaviyayn, M.: Convergence analysis of alternating direction method of multipliers for a family of nonconvex problems. SIAM J. Optim. 26(1), 337–364 (2016)

Kim, D.: Accelerated proximal point method for maximally monotone operators. arXiv:1905.05149 [math.OC] (2019)

Le, T., Chartrand, R., Asaki, T.J.: A variational approach to reconstructing images corrupted by Poisson noise. J. Math. Imaging Vis. 27(3), 257–263 (2007)

Lee, C.-O., Nam, C.: Primal domain decomposition methods for the total variation minimization, based on dual decomposition. SIAM J. Sci. Comput. 39(2), B403–B423 (2017)

Lee, C.-O., Nam, C., Park, J.: Domain decomposition methods using dual conversion for the total variation minimization with L1 fidelity term. J. Sci. Comput. 78(2), 951–970 (2019)

Lee, C.-O., Park, E.-H.: A dual iterative substructuring method with a penalty term. Numer. Math. 112(1), 89–113 (2009)

Lee, C.-O., Park, E.-H., Park, J.: A finite element approach for the dual Rudin–Osher–Fatemi model and its nonoverlapping domain decomposition methods. SIAM J. Sci. Comput. 41(2), B205–B228 (2019)

Lee, C.-O., Park, J.: A finite element nonoverlapping domain decomposition method with Lagrange multipliers for the dual total variation minimizations. J. Sci. Comput. 81(3), 2331–2355 (2019)

Lysaker, M., Lundervold, A., Tai, X.-C.: Noise removal using fourth-order partial differential equation with applications to medical magnetic resonance images in space and time. IEEE Trans. Image Process. 12(12), 1579–1590 (2003)

Nikolova, M.: A variational approach to remove outliers and impulse noise. J. Math. Imaging Vis. 20(1), 99–120 (2004)

Rockafellar, R.T.: Monotone operators and the proximal point algorithm. SIAM J. Control Optim. 14(5), 877–898 (1976)

Rockafellar, R.T.: Convex Analysis. Princeton University Press, New Jersey (2015)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Physica D. 60(1-4), 259–268 (1992)

Shen, J., Chan, T.F.: Mathematical models for local nontexture inpaintings. SIAM J. Appl. Math. 62(3), 1019–1043 (2002)

Tikhonov, A.: Solution of incorrectly formulated problems and the regularization method. Sov. Math. Dokl. 4, 1035–1038 (1963)

Wang, Y., Yin, W., Zeng, J.: Global convergence of ADMM in nonconvex nonsmooth optimization. J. Sci. Comput. 78(1), 29–63 (2019)

Wu, C., Tai, X.-C.: Augmented Lagrangian method, dual methods, and split Bregman iteration for ROF, vectorial TV, and high order models. SIAM J. Imaging Sci. 3(3), 300–339 (2010)

Zhang, X., Chan, T.F.: Wavelet inpainting by nonlocal total variation. Inverse Probl. Imaging 4(1), 191–210 (2010)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by: Russell Luke

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Convergence analysis of Algorithm 1

In this appendix, we analyze the convergence behavior of the decoupled augmented Lagrangian method. Throughout this section, we assume that \(\widetilde {E} (\tilde {u})\) given in (2.3) is convex.

The proof of Theorem 3.4 is based on a Lyapunov functional argument, which is broadly used in the analysis of augmented Lagrangian methods [14, 16, 32]. That is, we show that there exists the Lyapunov functional that is bounded below and decreases in each iteration. The following lemma is a widely used property for convex optimization.

Lemma A.1

Let f:\(\mathbb {R}^{n} \rightarrow \bar {\mathbb {R}}\) be a convex function, A:\(\mathbb {R}^{n} \rightarrow \mathbb {R}^{m}\) a linear operator, and \(b \in \mathbb {R}^{m}\). Then, a solution \(x^{*} \in \mathbb {R}^{n}\) of the minimization problem:

is characterized by

Proof

It is straightforward from the fact that − αA∗(Ax∗− b) ∈ ∂f(x∗). □

We observe that if we choose an initial guess \(\lambda ^{(0)} \in \widetilde {V}^{*}\) such that \(J_{\widetilde {V}^{*}} \lambda ^{(0)} \in (\ker B)^{\bot }\), then we have \(J_{\widetilde {V}^{*}} \lambda ^{(n)} \in (\ker B)^{\bot }\) for all n ≥ 0.

Proposition A.2

In Algorithm 1, we have \(J_{\widetilde {V}^{*}} \lambda ^{(n)} \in (\ker B)^{\bot }\) for all n ≥ 1 if \(J_{\widetilde {V}^{*}} \lambda ^{(0)} \in (\ker B)^{\bot }\).

Proof

Since \(J_{\widetilde {V}^{*}} \left (\lambda ^{(n+1)} - \lambda ^{(n)} \right ) = (I -P_{B} ) \tilde {u}^{(n+1)} \in (\ker B)^{\bot }\) for all n ≥ 0, a simple induction argument yields the conclusion. □

With Lemma A.1 and Proposition A.2, we readily get the following characterization of \(\tilde {u}^{(n+1)}\) in Algorithm 1.

Lemma A.3

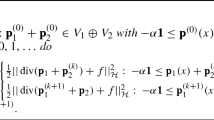

In Algorithm 1, \(\tilde {u}^{(n+1)} \in \widetilde {V}\) satisfies:

for n ≥ 0.

Proof

Take any \(\tilde {u} \in \widetilde {V}\). By (3.6), Lemma A.1, and Proposition A.2, we obtain:

which concludes the proof. □

Let \((\tilde {u}^{*} , \lambda ^{*}) \in \widetilde {V} \times \widetilde {V}^{*}\) be a critical point of (3.9). We define:

It is clear that the value dn measures the difference between two consecutive iterates \((\tilde {u}^{(n)} , \lambda ^{(n)})\) and \((\tilde {u}^{(n+1)}, \lambda ^{(n+1)})\), while en measures the error of the n th iterate \((\tilde {u}^{(n)} , \lambda ^{(n)})\) with respect to a solution \((\tilde {u}^{*}, \lambda ^{*})\). The following lemma presents the Lyapunov functional argument. We note that the Lyapunov functional that we use in the proof is motivated from [32].

Lemma A.4

The value en defined in (A.1b) is decreasing in each iteration of Algorithm 1. More precisely, we have:

for n ≥ 0, where dn is given in (A.1a).

Proof

By the definition of \((\tilde {u}^{*} , \lambda ^{*})\), we clearly have \((I - P_{B}) \tilde {u}^{*} = 0\). Furthermore, since:

by Lemma A.1, \(\tilde {u}^{*}\) is characterized by:

Taking \(\tilde {u} = \tilde {u}^{(n+1)}\) in (A.3) yields:

Let \(\bar {u}^{(n)} = \tilde {u}^{(n)} - \tilde {u}^{*}\) and \(\bar {\lambda }^{(n)} = \lambda ^{(n)} - \lambda ^{*}\). Taking \(\tilde {u} = \tilde {u}^{*}\) in Lemma A.3 yields:

Then, by adding (A.4) and (A.5) and using \(P_{B} \tilde {u}^{(n)} = P_{B} \bar {u}^{(n)}\), we have:

That is, we obtain:

Using \(\bar {\lambda }^{(n+1)} = \bar {\lambda }^{(n)} + \eta J_{\widetilde {V}} (I-P_{B} ) \bar {u}^{(n+1)}\), we get:

which yields (A.2). The last inequality is due to (A.6). □

Now, we present the proof of Theorem 3.4.

Proof Proof of Theorem 3.4

As \(\widetilde {E}\) is convex, Lemma A.4 ensures that (A.2) holds. Since \(\left \{e_{n} \right \}\) is bounded, we conclude that \(\left \{ P_{B} {\tilde {u}}^{(n)} \right \}\) and \(\left \{ \lambda ^{(n)} \right \}\) are bounded. We sum (A.2) from n = 0 to N − 1 and let \(N \rightarrow \infty \) to obtain:

which implies that \(P_{B}\left (\tilde {u}^{(n)} - \tilde {u}^{(n+1)}\right ) \rightarrow 0\) and \((I-P_{B}) \tilde {u}^{(n+1)} \rightarrow 0\). Therefore, \(\left \{ \tilde {u}^{(n)} \right \}\) is bounded and we have:

and

By the Bolzano–Weierstrass theorem, there exists a limit point \((\tilde {u}^{(\infty )}, \lambda ^{(\infty )})\) of the sequence \(\left \{ (\tilde {u}^{(n)} , \lambda ^{(n)}) \right \}\). We choose a subsequence \(\{ (\tilde {u}^{(n_{j})} , \lambda ^{(n_{j})}) \}\) of \(\left \{ (\tilde {u}^{(n)} , \lambda ^{(n)}) \right \}\) such that:

By (A.7a), we have:

In the λ-update step with n = nj − 1:

we readily obtain \((I -P_{B}) \tilde {u}^{(\infty )} = 0\) as j tends to \(\infty \). On the other hand, (3.6) with n = nj − 1 is equivalent to:

By the graph-closedness of \(\partial \widetilde {E}\) (see Theorem 24.4 in [27]), we get:

as \(j \rightarrow \infty \). By Proposition A.2, we conclude that:

Therefore, \(\left (\tilde {u}^{(\infty )}, \lambda ^{(\infty )}\right )\) is a critical point of (3.9).

Finally, it remains to prove that the whole sequence \(\left \{ \left (\tilde {u}^{(n)}, \lambda ^{(n)} \right ) \right \}\) converges to the critical point \(\left (\tilde {u}^{(\infty )}, \lambda ^{(\infty )}\right )\). Since the critical point \((\tilde {u}^{*}, \lambda ^{*})\) was arbitrarily chosen, Lemma A.4 is still valid if we set \((\tilde {u}^{*}, \lambda ^{*}) = \left (\tilde {u}^{(\infty )}, \lambda ^{(\infty )}\right )\) in (A.1b). That is, the sequence:

is decreasing. On the other hand, by (A.8), the subsequence \(\{ e_{n_{j}} \}\) tends to 0 as j goes to \(\infty \). Therefore, the whole sequence {en} tends to 0 and we deduce that \(\left \{ \left (\tilde {u}^{(n)}, \lambda ^{(n)} \right ) \right \}\) converges to \(\left (\tilde {u}^{(\infty )}, \lambda ^{(\infty )}\right )\). □

Remark A.5

In practice, local problems (3.8) are solved by iterative algorithms and an inexact solution \(\tilde {u}^{(n+1)}\) to (3.6) is obtained in each iteration of Algorithm 1. That is, for n ≥ 0, we have:

for some 𝜖n > 0, where:

One may refer to e.g. [27] for the definition of the 𝜖-subgradient ∂𝜖. In this case, the conclusion of Lemma A.3 is replaced by:

for all n ≥ 0. By slightly modifying the above proofs using (A.9), one can prove without major difficulty that the conclusion of Theorem 3.4 holds under an assumption:

The above summability condition of errors is popular in the field of mathematical optimization; see, e.g., [26].

To prove Theorem 3.5, we first show that dn is decreasing.

Lemma A.6

The value dn defined in (A.1a) is decreasing in each iteration of Algorithm 1.

Proof

Let n ≥ 1. Taking \(\tilde {u} = \tilde {u}^{(n)} \) in Lemma A.3 yields:

Also, substituting n by n − 1 and taking \(\tilde {u} = \tilde {u}^{(n+1)}\) in Lemma A.3, we have:

Summation of (A.10) and (A.11) yields:

where we used \(\lambda ^{(n)} = \lambda ^{(n-1)} + \eta J_{\widetilde {V}} (I-P_{B}) \tilde {u}^{(n)}\) in the equality. Therefore, we get:

On the other hand, direct computation yields:

which concludes the proof. The last inequality is due to (A.12). □

Combining Lemmas A.4 and A.6, we get the proof Theorem 3.5, which closely follows [14].

Proof Proof of Theorem 3.5

Invoking Lemmas A.6 and (A.2) yields:

This completes the proof. □

Appendix B: A remark on the continuous setting

As we noticed in Section 4, the proposed domain decomposition framework reduces to the one proposed in [11] when it is applied to the convex Chan–Vese model [5]. However, while the authors of [11] introduced their method as a nonoverlapping DDM, we classified it as an overlapping one. In this section, we claim that the proposed method belongs to a class of overlapping DDMs in the continuous setting.

For simplicity, we consider the case \(\mathcal {N} = 2\) only. Let \(\left \{ {\varOmega }_{s} \right \}_{s=1}^{2}\) be a nonoverlapping domain decomposition of Ω with the interface Γ = ∂Ω1 ∩ ∂Ω2. Recall the convex Chan–Vese model (4.2):

where g = (f − c1)2 − (f − c2)2 and TVΩ(u) is defined as:

In Section 3.1 of [11], it was claimed that a solution of (B.1) can be constructed by u = u1 ⊕ u2, where (u1,u2) is a solution of the constrained minimization problem:

Here, the condition u1 = u2 on Γ is of the trace sense [12]. Unfortunately, this argument is not valid since the solution space BV (Ω) of (B.1) allows discontinuities on Γ. We provide a simple counterexample inspired from [5].

Example 1

Let \(\varOmega = (-1, 1) \subset \mathbb {R}\), Ω1 = (− 1, 0), and Ω2 = (0, 1). We set

We will show that:

is a unique solution of (B.1) for sufficiently large α, while it cannot be a solution of (B.2) since it is not continuous on Γ. We clearly have TVΩ(u∗) = 1. There exists \(p^{*} \in {C_{0}^{1}} (\varOmega )\) with |p∗|≤ 1 which attains the supremum in the definition of total variation for u∗. Indeed, with p∗(x) = 1 − x2, we have:

Choose \(\alpha > 2 = \max \limits _{x \in \varOmega } |(p^{*})^{\prime }(x)|\). For any u ∈ BV (Ω) with 0 ≤ u ≤ 1, we have:

In addition, we have:

Since \(\alpha \pm (p^{*})^{\prime }\) is strictly positive, the equality holds if and only if u = u∗ a.e.. Therefore, u∗ is a unique solution of (B.1).

On the other hand, it is possible to construct an equivalent constrained minimization problem with an overlapping domain decomposition. Let S be a neighborhood of Γ with positive measure. Note that traces γ1u and γ2u of \(u \in BV(\mathcal {O})\) along Γ with respect to \(\mathcal {O} \cap {\varOmega }_{1}\) and \(\mathcal {O} \cap {\varOmega }_{2}\), respectively, are well-defined for any open subset \(\mathcal {O}\) of Ω such that \(S \subset \mathcal {O}\). Also, they satisfy the formula:

Set \(\widetilde {\varOmega }_{1} = {\varOmega }_{1} \cup S\) and \(\widetilde {\varOmega }_{2} = {\varOmega }_{2}\). Then, \(\{ \widetilde {\varOmega }_{s} \}_{s=1}^{2}\) forms an overlapping domain decomposition of Ω; i.e., \(\widetilde {\Gamma } = \widetilde {\varOmega }_{1} \cap \widetilde {\varOmega }_{2}\) has positive measure. We define local energy functionals Es: \(\widetilde {\varOmega }_{s} \rightarrow \mathbb {R}\) as follows:

Consider the following constrained minimization problem:

Then, we have the following equivalence theorem.

Theorem B.1

Let \((\tilde {u}_{1}^{*}, \tilde {u}_{2}^{*} ) \in BV(\widetilde {\varOmega }_{1}) \times BV(\widetilde {\varOmega }_{2})\) be a solution of (B.3). Then, u∗∈ BV (Ω) defined by:

is a solution of (B.1). Conversely, if u∗∈ BV (Ω) is a solution of (B.1), then \((\tilde {u}_{1}^{*} , \tilde {u}_{2}^{*}) = (u^{*}|_{\widetilde {\varOmega }_{1}} , u^{*}|_{{\widetilde {\varOmega }}_{2}}) \in BV({\widetilde {\varOmega }}_{1}) \times BV({\widetilde {\varOmega }}_{2})\) is a solution of (B.3).

Proof

First, suppose that \(\left (\tilde {u}_{1}^{*}, \tilde {u}_{2}^{*} \right )\) is a solution of (B.3). For any u ∈ BV (Ω) with 0 ≤ u ≤ 1, we have:

Hence, u∗ minimizes (B.1).

Conversely, we assume that u∗∈ BV (Ω) is a solution of (B.1) and set \(\left (\tilde {u}_{1}^{*} , \tilde {u}_{2}^{*}\right ) = \left (u^{*}|_{\widetilde {\varOmega }_{1}} , u^{*}|_{\widetilde {\varOmega }_{2}}\right )\). Take any \((\tilde {u}_{1} , \tilde {u}_{2}) \in BV(\widetilde {\varOmega }_{1}) \times BV(\widetilde {\varOmega }_{2})\) such that \(0 \leq \tilde {u}_{1} \leq 1\), \(0 \leq \tilde {u}_{2} \leq 1\), and \(\tilde {u}_{1} = \tilde {u}_{2}\) on \(\widetilde {\Gamma }\). Let:

Then, we have:

Therefore, \(\left (\tilde {u}_{1}^{*} , \tilde {u}_{2}^{*}\right )\) is a solution of (B.3). □

In conclusion, it is more appropriate to classify the proposed DDM in [11] as an overlapping one instead of a nonoverlapping one.

Rights and permissions

About this article

Cite this article

Park, J. An overlapping domain decomposition framework without dual formulation for variational imaging problems. Adv Comput Math 46, 57 (2020). https://doi.org/10.1007/s10444-020-09799-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10444-020-09799-7

Keywords

- Domain decomposition method

- Augmented Lagrangian method

- Variational imaging

- Total variation

- Higher order models