Abstract

In an arbitrage-free securities market, all state-contingent claims and the stochastic discount factors can be approximated appropriately by index options with a semi-nonparametric method. These index options are constructed by efficient algorithms and uniform approximation error under these efficient algorithms are derived. This paper suggests a method to examine state-contingent claims and stochastic discount factors using index options in financial market regardless the market is complete or not.

Similar content being viewed by others

Notes

Hutchinson et al. (1994) also suggests a neural network approach to derive the model-free option price and compares the option formula with the Black Scholes option formula.

It is well known that the state-price density can be derived from the second order derivative of option prices (Breeden and Litzenberger 1978).

By an index we mean a simple portfolio of primitive assets, so an index option is a option written on an index. Exchange-traded-products, market index and passive index are examples of index. There are considerably interests in index investing over the last 40 years. See, for example, Bogle (2016).

Previous studies on the spanning problem for an infinite state economy include Nachman (1987, 1988), Duan et al. (1992) and Galvani and Troitsky (2010). These authors either assume strong technical conditions on the securities market or make use of complex securities such as options on portfolios of index options to span the entire space of the contingent claims. The spanning theorem in Tian (2014), Theorem 2.1, does not need any such condition. It is further proved in Tian (2014) that the index option spanning theorem therein cannot be improved at all.

The bounded payoff function assumption can be relaxed such as index call option under certain circumstance. See, for instance, Tian (2014), Theorem 2.7. By contrast with Ross (1976), the crucial point in these approximation theorems in Tian (2014) is that the payoff function of the index options is a fixed one but many underlying indexes are required.

The curse of dimensionality is a widely studied issue in option pricing theory.

There are other greedy algorithms in addition to these two specialized algorithms. See, for instance, Barron et al. (2008) and Donahue et al. (1997), etc. We highlight these two greedy algorithms in this paper because these are among the algorithms with the best rate of convergence, as shown in Donahue et al. (1997).

According to the Options Clearing Corporation, there are over $3 trillion on US-based ETP alone, over 4000 ETFs and there are options on 647 ETFs/ETPs traded on the exchange. However, the volume on the options on ETPs is still relatively small comparing with the underlying ETPs.

Since \((x_1 = log S_1, x_2 = log S_2)\) has a bivariate Gaussian distribution, we are able to compute \(e^{-rT} \mathbb {E}^{Q}\left[ f(S_1, S_2) \right] \) directly, but the pricing formula itself is quite length given the expression of \(f(S_1, S_2)\). For this reason we use Monte Carlo simulation for the direct computation of this expectation.

Assume \(\zeta \) has a normal distribution with mean \(\mu \) and variance \(\sigma ^2\), then \(\mathbb {E}[\zeta ^4] = \frac{1}{2! 2^2} \sigma ^4 + \frac{1}{2! 2} \mu ^2 \sigma ^2 + \frac{1}{4!} \mu ^4\).

Briefly speaking, a frame sequence satisfies a pseudo-Plancherel formula. Therefore, the construction of the frames in a Hilbert space is highly non-trivial and a frame has some important properties for representation. We refer to Christensen (2003) for an introduction of frame theory.

Even though the payoff function in \(S^{1}_{2}\left( \mathbb {R}^{k}, \mu _{Q}\right) \) has continuous first-order derivative, Proposition 3.3 can be applied to a larger function space which includes the payoff functions of call and put option. See its proof in “Appendix A”.

If \(log S_t\) follows either a Gaussian process or an Ornstein–Uhlenbeck process under the risk-neutral probability measure, then it is easy to show that \(S_T = S_0^{a} \zeta \), where \(a {>} 0\) and the density function of the random variable \(\zeta \) is independent of \(S_0\).

We are grateful for an anonymous referee’s insightful suggestion to investigate the link between the index options approximation with the recovery problem studied in Ross (2015) and Borovicka et al. (2016). A detailed discussion on the recovery problem by the universal approximation with index options is beyond the scope of this paper.

References

Ait-Sahalia, Y., Lo, A.W.: Nonparametric estimation of state-price densities implicit in financial asset prices. J Finance 53(2), 499–547 (1998)

Bakshi, G., Madan, D.: Spanning and derivative-security valuation. J Financ Econ 55, 205–238 (2000)

Bansal, R., Viswanathan, S.: No arbitrage and arbitrage pricing: a new approach. J Finance 48(40), 1231–1262 (1993)

Barron, A.: Universal approximation bounds for superpositions of a sigmoidal function. IEEE Trans Inf Theory 39, 930–945 (1993)

Barron, A., Cohen, A., Dahmen, W., DeVore, E.: Approximation and learning by greedy algorithmis. Ann Stat 36(4), 64–94 (2008)

Bertsimas, D., Kogan, L., Lo, A.: Hedging derivatives securities and incomplete markes: an \(\epsilon \)-arbitrage approach. Oper Res 49(3), 372–397 (2001)

Bogle, J.C.: The index mutual fund: 40 years of growth, change, and challenge. Financ Anal J. 72, 9–13 (2016)

Borovicka, J., Hansen, L.P., Scheinkman, J.: Misspecified recovery. J Finance 71, 2493–2544 (2016)

Boyle, T., Tian, W.: Quadratic interest rate models as approximation to effective rate model. J Fixed Income 9, 69–80 (1999)

Breeden, D., Litzenberger, R.: Prices of state-contingent claims implicit in option prices. J Bus 51(4), 621–651 (1978)

Carr, P., Ellis, K., Gupta, V.: Static hedging of exotic options. J Finance 53(2), 1165–1190 (2002)

Christensen, O.: An Introduction to Frames and Riesz Bases. Boston: Birkauser (2003)

Christoffersen, P., Heston, S., Jacobs, K.: Capturing option anomalies with a variance-dependent pricing kernel. Rev Financ Stud 26, 1963–2006 (2013)

Cochrane, J., Saa-Requejo, J.: Beyond arbitrage: good-deal asset price bounds in incomplete markets. J Polit Econ 108(1), 79–119 (2000)

Cybenko, G.: Approximation by superposition of a sigmoidal function. Math Control Signals Syst 2, 303–314 (1989)

Detemple, J., Kitapbayev, Y.: On American VIX options under the generalized 3/2 and 1/2 models. Math Finance 28, 550–581 (2018)

Diaconis, P., Shahshahani, M.: On nonlinear functions of linear combinations. SIAM J Sci Stat Comput 5(1), 175–191 (1984)

Dittmar, R.: Nonlinear pricing kernels, kurtosis preference, and evidence from the cross section on equity return. J Finance 57(1), 369–403 (2002)

DeVore, R.: Optimal Computation. International Congress of Mathematicians, Plenary Lecture, Madrid (2006)

Donahue, M., Gurvits, L., Darken, C., Sontag, E.: Rates of convex approximation in non-Hilbert spaces. Constr Approx 13(2), 187–220 (1997)

Duan, J., Moreau, A., Sealey, C.W.: Spanning with index options. J Financ Quant Anal 27(2), 303–309 (1992)

Duffie, D., Pan, J., Singleton, K.: Transform analysis and asset pricing for affine jump-diffusions. Econometrica 68(6), 1343–1376 (2000)

Gagliardini, P., Gourieroux, C., Renault, E.: Efficient derivative pricing by the extended method of moments. Econometrica 79(4), 1181–1232 (2011)

Galvani, V., Troitsky, V.G.: Options and efficiency in spaces of bounded claims. J Math Econ 46(4), 616–619 (2010)

Hansen, L., Jagannathan, R.: Implications of security market data for models of dynamic economies. J Polit Econ 99, 225–262 (1991)

Harvey, C.R., Siddique, A.: Conditional skewness in asset pricing tests. J Finance 55(3), 1263–1295 (2000)

Hornik, K.: Approximation capabilities of multilayer feedforward networks. Neural Netw 4, 251–257 (1991)

Hornik, K., Stinchcombe, M., White, H.: Universal approximations of an unknown mapping and its derivatives using multilayer feedforward networks. Neural Netw 3, 551–560 (1990)

Hutchinson, J., Lo, A., Poggio, T.: A nonparametric approach to the pricing and hedging of derivative securities via learning networks. J Finance 49, 851–889 (1994)

Jarrow, R.A., Jin, X., Madan, D.B.: The second fundamental theorem of asset pricing. Math Finance 9(3), 255–273 (1999)

Kirkby, J.L., Deng, S.: Static hedging and pricing of exotic options with payoff frames. Math Finance (2018). https://doi.org/10.2139/ssrn.2501812 (forthcoming)

Nachman, D.C.: Efficient funds for meager asset spaces. J Econ Theory 43(2), 335–347 (1987)

Nachman, D.C.: Spanning and completeness with options. Rev Financ Stud 1, 311–328 (1988)

Ross, S.A.: Options and efficiency. Q J Econ 90, 75–89 (1976)

Ross, S.A.: Neoclassical Finance. Princeton University Press, Princeton (2005)

Ross, S.A.: The recovery theorem. J Finance 70(2), 615–648 (2015)

Stutzer, M.: A simple nonparametric approach to derivative security valuation. J Finance 51(5), 1633–1652 (1996)

Tian, W.: Spanning with index. J Math Econ 53, 111–118 (2014)

Author information

Authors and Affiliations

Corresponding author

Additional information

We would like to thank the editors and anonymous referees for their constructive comments and insightful suggestions that improve the paper.

Appendices

Appendix A: Proofs

Proof of Proposition 2.1

By its definition of \(P_nf\), we have, for any real number \(\alpha \in \mathbb {R}\),

Then

Since \(r_{n-1}\) is orthogonal to \(Span\{g_1, \ldots , g_{n-1}\}\) and \(f_{n-1} \in Span\{g_1, \ldots , g_{n-1}\}\), \({<}r_{n-1}, f_{n-1}{>} = 0\), then

where we choose any representation of \(f \equiv \sum _{g \in \mathcal{D}} b_g g\) and the last inequality follows from the definition of \(g_n\). Therefore,

By considering \(\alpha \in \mathbb {R}\) with \(b(\alpha ):= 2 \alpha - \alpha ^2 b^2 {>} 0\), then we plug the inequality into inequality (A-1) in regard to \(||r_{n-1}||^2\), we have

We prove, by induction on n, that

First, note that \(b(\alpha )\) chooses the highest value when \(\alpha = \frac{1}{b^2}\) for all real numbers \(\alpha \), then \(b(\alpha ) \leqslant \frac{1}{b^2}\) for all real numbers \(\alpha \). Then, for \(n = 0\),

where the first inequality follows from

Therefore, the inequality (A-4) holds for \(n=0\). Next, assuming (A-4) holds for \(n-1\), and assume that \(||r_{n-1}||^2 {>} \frac{||f||_{\mathcal{L}_1}^2}{(n+1) b(\alpha )}\). (Otherwise, \(||r_n||^2 \leqslant ||r_{n-1}||^2 \leqslant \frac{||f||_{\mathcal{L}_1}^2}{(n+1) b(\alpha )}\). So (A-4) holds for n.) By invoke of (A-3),

Hence, we have proved the inequality (A-4) which ensures that

The proof is completed. \(\square \)

Proof of Proposition 2.2

Given any \(f \in \mathcal{X}, h \in \mathcal{L}_1\) and any \(n \geqslant 1\), using \({<}r_{n-1}, f_{n-1}{>} = 0\),

where the first inequality follows from the definition of \(g_n\) while the second and the third inequality follows from the Cauchy-Schwartz inequality. Then,

and

Let \(a_{k} \equiv ||r_{k}||^2 - ||f-h||^2\) for each \(k \geqslant 0\). If \(||r_{n_0}|| \leqslant ||f-h||\) for a positive number \(n_0\), then \(||r_n|| \leqslant ||r_{n_0}|| \leqslant ||f-h||,\) for all \(n \geqslant n_0\), and thus Proposition 2.2 holds naturally. Therefore, we assume that \(a_n {>} 0\) for all n. Hence, (A-9) implies that

which again yields (by using (A-1))

Hence,

Next, we prove that, by an induction argument, for any \(n \geqslant 1\),

and then,

the proof is thus completed.

To the end, if \(a_0 \leqslant \frac{4 ||h||_{\mathcal{L}_1}^2}{b(\alpha )}\), then \(a_1 \leqslant a_0 \leqslant \frac{4 ||h||_{\mathcal{L}_1}^2}{b(\alpha )}\). If \(a_0 {>} \frac{4 ||h||_{\mathcal{L}_1}^2}{b(\alpha )}\), then by using (A-12),

thus \(a_1 \leqslant \frac{4 ||h||_{\mathcal{L}_1}^2}{b(\alpha )}\). Assuming the inequality (A-13) holds for \(n-1\) and \(a_{n-1} {>} \frac{4 ||h||_{\mathcal{L}_1}^2}{n b(\alpha )}\), then by (A-12), we obtain

\(\square \)

Proof of Proposition 2.3

As \(\mathcal{D}= - D\), any \(f \in \mathcal{L}_1\) can be written as \(f = \sum _{g \in \mathcal{D}} b_g g\) with \(b_g \geqslant 0\) for all \(g \in \mathcal{D}\). For any \(\epsilon {>} 0\), by its definition of \(\mathcal{L}_1\), there exists a finite subset \(\hat{\mathcal{D}} \subseteq \mathcal{D}\) such that

\(f = \hat{f} + h, \hat{f} = \sum _{g \in \hat{\mathcal{D}}}b_g g\);

\(||h||_{\mathcal{L}_1} \leqslant \epsilon \);

\(||\hat{f}||_{\mathcal{L}_1} = \sum _{g \in \hat{\mathcal{D}}}b_g = ||f||_{\mathcal{L}_1} + \delta , |\delta | \leqslant \epsilon \).

By the definition of \(f_n\), for any \(\beta \in \mathbb {R}\) and \(g \in \mathcal{D}\), we have

The inequality holds for all \(g \in \mathcal{D}\), so it holds on the average with weights \(\frac{b_g}{\sum _{g \in \hat{\mathcal{D}}} b_g}\), using \(||g|| \leqslant b\) for all \(g \in \mathcal{D}\), we have

Choosing \(\alpha _n = 1 -\frac{1}{n}, \beta = \frac{1}{n} \sum _{g \in \hat{\mathcal{D}}}b_g\), we obtain

Since

and similarly,

we obtain

where \(O(\epsilon )\) represents some quantity which converges to zero when \(\epsilon \rightarrow 0\). Letting \(\epsilon \downarrow 0\),

By an easy induction argument with respect to n, we have

\(\square \)

Proof of Proposition 2.4

As \(h \in \mathcal{L}_1\), for any \(\epsilon {>} 0\), there exists a decomposition \(h = \hat{h} + h^*\) where

\(\hat{h}= \sum _{g \in \hat{\mathcal{D}}} b_g g, b_g {>} 0\) and \(\hat{\mathcal{D}}\) is a finite subset of \(\mathcal{D}\);

\(\sum _{g \in \hat{\mathcal{D}}} b_g = ||h||_{\mathcal{L}_1} + \delta , |\delta | \leqslant \epsilon \);

\(||h^*||_{\mathcal{L}_1} \leqslant \epsilon \).

Given any \(\alpha \in (0,1)\) and \( \beta \in \mathbb {R}\), by using the same argument in Proposition 2.3, we have

By using the above equality for each \(g \in \hat{D}\) on the average with weights \(\frac{b_g}{\sum _{g \in \hat{\mathcal{D}}} b_g}\), choosing \(\beta := (1-\alpha )\sum _{g \in \hat{\mathcal{D}}}b_g\) and using the same argument as in Proposition 2.3 while letting \(\epsilon \downarrow 0\), we have

where \(M:= b^2 ||h||_{\mathcal{L}_1}^2 - ||h||^2\). Hence, for any \(\alpha \in (0,1)\),

Choosing \(\alpha = 0\) for \(n=1\), then (A-19) implies that \(||r_1||^2 - ||f-h||^2 \leqslant M\). Assuming for \(n \geqslant 2\),

then by using \(\alpha = 1 - \frac{2}{n}\) and (A-19), we derive

Therefore, the proof is completed by an induction argument. \(\square \).

Proof of Proposition 3.1

See Tian (2014), Theorem 2.1, for \(p=2\). This approximation theorem is inspired by the uniform approximation theorem in artificial neural network. See Cybenko (1989) and Hornik (1991). \(\square \)

Proof of Proposition 3.2

Since \(\mathcal{F}= \mathcal{F}_x\), by Proposition 3.1, any state-contingent claim in \(L^2(\Omega , \mathcal{F},P)\) can be approximated well by an element of \(Span\mathcal{H}\). If the index options \(\sigma (m \cdot x + \theta )\) are marketable assets with a corresponding (market) price functional \(\pi : \mathcal{H}\rightarrow \mathbb {R}\), then the securities market \(\left( \Omega , \mathcal{F}, \mathcal{H}, \pi \right) \) is a complete market in the sense of Jarrow et al. (1999). Equivalently, the market is complete in the sense of Harrison and Kreps (1979), that is, there exists a unique stochastic discount factor \(\phi \in L^2(\Omega , \mathcal{F})\) such that the price of any contingent claim, x, at time zero, is \(\mathbb {E}[\phi x]\). Therefore, there exists a unique dual \(\phi \), in \(L^2(\Omega , \mathcal{F}, P)\) such that \(\mathbb {E}[\phi g] = \pi (g)\) for any \(g \in \mathcal{H}\). Moreover, if the securities market is arbitrage free in the sense of Harrison and Kreps (1979), the price of any state-contingent claim \(f \in L^2(\Omega , \mathcal{F}, P)\) is given by \(\mathbb {E}[\phi f]\).

We first prove this proposition for \(f \in \mathcal{L}_1\). By Proposition 2.3, there exists a portfolio of index options, \(g_n\), with at most n elements in \(\mathcal{D}\) such that

where \(C = ||f||_{\mathcal{L}_1}^2 b^2 - ||f||^2 \) is a nonnegative number. Thus,

Next, with a general contingent claim \(f \in L^2(\Omega , \mathcal{F}, P)\), Proposition 3.1 ensures that f can be approximated arbitrary well by elements of \(Span \mathcal{H}\), thus, elements of \(\mathcal{L}_1\). Then there exists an element \(h \in \mathcal{L}_1\) such that \(||f - h|| \leqslant \frac{\epsilon }{\mathbb {E}\left[ \phi ^2\right] ^{1/2}}\). Then, we use the first part of this proposition for h to obtain

where C is a nonnegative number in (A-21) in which the element \(f \in \mathcal{L}_1\) is replaced by \(h \in \mathcal{L}_1\). Finally, given the greedy algorithms in Sect. 2, the construction of the portfolio of index options with at most n index options, \(g_n\), is effective. \(\square \)

Proof of Proposition 3.3

This result belongs to Hornik (1990), Corollary 3.6. By its definition, any function in \(S^{1}_{2}\left( \mathbb {R}^{k}, \mu \right) \) has continuous first-order derivative. But many contingent claims such as call-type derivatives have no first-order derivative everywhere. Therefore, the function space \(S^{1}_{2}\left( \mathbb {R}^{k}, \mu \right) \) might be too strong to be applied to the contingent claim analysis. For this purpose, we consider the Sobolev space \(W^{1}_{2}(\mathbb {R}^{k})\) in which each function has distribution derivatives \(\partial ^{\alpha }f, \forall |\alpha | \leqslant 1\). The Sobolev space \(W^{1}_{2}(\mathbb {R}^{k})\) is large enough to include most contingent claims. Moreover, it is a Hilbert space associated with a norm \( || \cdot ||_{1,2,S}\). By applying Hornik (1990), Theorem 2.1 and Corollary 3.8, \(Span\mathcal{H}(\sigma )\) generates the Sobolev space \(W^{1}_{2}(\mathbb {R}^{k})\) under the norm \( || \cdot ||_{1,2,S}\). \(\square \)

Proof of Proposition 4.1

We first prove that for any \(x, y \in L^2\), the following inequality holds:

To show it, let \(A = \left\{ x^{+} \geqslant y^{+} \right\} \) denotes the event on which \(x^{+}\) is larger than \(y^{+}\). Since \(x^{+} - y^{+} \leqslant (x-y)^{+}\), we obtain

(1) For any \(\phi \in (L^{2})^{+}\), by Proposition 3.2, there exists \(h_n \in Span\mathcal{H}\) such that \(||\phi - h_n|| \leqslant \frac{1}{n}\). Then, since \(\phi = \phi ^{+}\), we have

Therefore, we have proved that \((L^{2})^{+} = \overline{(Span \mathcal{H})^{+}}\).

(2). By the effective construction of the index option approximation follows from the effective construction of \(\phi \in L^2\) in terms of the index options, by Proposition 2.2 and Proposition 2.4.

(3). It follows from the first part and the Lebesgue dominated convergence theorem. \(\square \)

Proof of Proposition 4.2

The proof depends on a frame theory in a finite dimensional space, and we refer to Christensen (2003), Chapter 1 for details. Let \(V = L^{2}(\Omega , P)\), V is a finite dimensional space with dimension n. Since V is spanned by \(f_l, l = l_1, \ldots , l_m\), then the family \(\left\{ f_l: l = l_1, \ldots , l_m \right\} \subseteq V\) is a frame (Christensen (2003), Corollary 1.1.3). We define the frame operator

Equivalently, \(S (f) = A \cdot f\). By Christensen (2003), Theorem 1.1.5, S is invertible and self-adjoint, and for any \(f \in V\),

In particular, for the unique stochastic discount factor \(f = \phi \), we obtain

since \(a_l = \mathbb {E}[\phi f_l]\) by the equation of the stochastic discount factor. Notice that \(A^{-1} \cdot f = S^{-1}(f)\). The proof of this proposition is completed. \(\square \)

Proof of Proposition 4.3

By Proposition 3.1, for any \(\epsilon {>} 0\), there exists an index option portfolio, \(\phi _0\), in \(Span \mathcal{H}\) such that

\(\phi _0\) is merely an element of \(Span \mathcal{H}\) satisfying (A-28), which might not be entirely positive let alone a stochastic discount factor; but it can still be used to price a general state-contingent claim via index options up to a uniform error. To see this, choose an index option portfolio \(g_n\) in \(Span_{n} \mathcal{H}\) such that (by Proposition 3.2)

Henceforth, we have,

where we choose \(\epsilon \leqslant ||\phi ||\) and both C and \(C'\) are two positive numbers independent of n. \(\square \)

Proof of Proposition 4.4

It is the same as Proposition 3.2 since \(\mathbb {E}[\phi ^2]\) is bounded by a constant A, for all \(\phi \in \Phi \). \(\square \)

Proof of Proposition 5.1

By Tian (2014), Theorem 2.1, there exists a \(f_1 \in Span \mathcal{H}\subseteq \mathcal{L}_1\) such that \(||f - f_1|| \leqslant \frac{\epsilon }{||\phi ||}\). Since \(f_1 \in \mathcal{L}_1\), there exists a positive number L such that \(f_1 = L f_2\) with \(f_2 \in \overline{co \mathcal{H}}\), the closure of the convex hull of \(\mathcal{H}\). Since \(\sigma (t)\) is bounded by a positive number b, we see easily that \(||f_2 - g|| \leqslant K \equiv 2 b\) for any \(g \in \mathcal{H}\). By using Donahue et al. (1997), Corollary 3.6, there exists a sequence \(h_n \in Span_{n} \mathcal{H}\) such that

for \(1 {<} p \leqslant 2\) and

for \( 2 {<} p {<} \infty \). Then \(g_n = L h_n \in Span_{n} \mathcal{H}\) is what is desired. The proof for the incomplete market in a good-deal setting is similar and omitted. \(\square \)

Proof of Proposition 5.2

Given any \(\phi \in (L^{p})^{+}\), by virtue of Proposition 5.1, there exists a sequence \(r_n \in Span\mathcal{H}\) such that \(||\phi - r_n|| \rightarrow 0\). Therefore, the set \(\left\{ ||r_{n}||: n = 1, \ldots \right\} \) as well as the set \(\left\{ ||r_{n}^{+} ||: n = 1, 2, \right\} \) is bounded. Then, there exists a convergent subsequence of \(r_n\), we write it as \(h_n\), and \(h_n \rightarrow \phi \) almost surely. Moreover, \(h_n^{+} \rightarrow \phi \) almost surely since \(\phi = \phi ^{+}\). We next apply the Banach-Saks theorem for the Banach space \(L^p\), there exists a subsequence of \(h_n^{+}\), written as \(h_{n_k}^{+}\) such that

converges to some \(z \in L^{p}\) under the norm topology when \(k \rightarrow \infty \). Write \(s_k = h_{n_k}^{+} \in (Span \mathcal{H})^{+}\). Since \(h_{n}^{+}\) converges to \(\phi \) almost surely,

almost surely, then \(\phi = z\) with probability one. Therefore,

converges to \(\phi \) under the norm topology. We have thus proved that \((L^{p})^{+} = \overline{co((Span \mathcal{H})^{+}) }\). \(\square \)

Appendix B: Numerical examples

We present the details of the construction of the universal approximation in Examples.

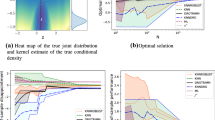

Example 3.2.

We implement the orthogonal greedy algorithm for the contingent claim \(f(x,y) = e^{x}y\), where (x, y) has a standard bivariate Gaussian distribution, and the activation function is \(\sigma (t) = 1, t \geqslant 0; \sigma (t) = 0, t {<} 0\).

The index is written as \(w_1 x + w_2 y + \theta \). Because \(\sigma (at) = \sigma (t), \forall a {>} 0\), we can assume that \(|w_i| \leqslant 1, |\theta | \leqslant 1\) (See Tian 2014, Theorem 2.7). Moreover, we can assume that \(w_2 = 0\), or \(w_2 = 1\). When \(w_2 = 0\), the index is written as \(x - \theta \); when \(w_2 = 1\), the index is written as \(y - \beta x - \theta \) for \(|\beta | \leqslant 1, |\theta | \leqslant 1\).

Step 1.

Let \(f_0 = 0\). We choose \(g \in \mathcal{H}\) to maximize \(\mathbb {E}\left[ fg\right] \). When \(g = \sigma (x - \theta )\), \(\mathbb {E}[fg] = 0\). When \(g = \sigma (y - \beta x - \theta )\), it is straightforward to derive that

Then, it reduces to calculate

and the optimum values are \(\beta = 0, \theta = 0\). In other words, \(g_{1}(x,y) = \sigma (y)\). Therefore, the projection \(f_1 = Pr{<}g_1{>}\) is

Step 2.

Let \(r_1 = f - f_1\). Since \(\mathbb {E}[f \sigma (x - \theta )] = 0, \mathbb {E}[r_1 \sigma (x -\theta )] {<} 0\), we reduce to compute

By straightforward calculation,

By computation, the optimum values are \(\beta ^* = 0.03, \theta ^* = -0.05\). Therefore, the subspace to approximate f is spanned by \(g_1 = \sigma (y), g_2(y) = \sigma (y - 0.03x + 0.05)\). Therefore, \(f_2 = Pr(f)\), the projection of f to this subspace is

Let \(r_2 = f - f_2\). Similarly, we find

and continue to find \(f_3(x)\) and so on.

Example 3.5.

We implement the orthogonal greedy algorithm for the contingent claim \(f(x) = e^{2x} 1_{\{x \leqslant 0\} } + 1_{\{ x {>} 0 \}}\), where x has a standard normal distribution, and the activation function is \(\sigma (t) = 1, t \geqslant 0; \sigma (t) = 0, t {<} 0\). The index is written as \(\beta x + \theta \). Similar to Example 3.2, it suffices to consider all index options with the payoff \(\sigma (x - \theta ), |\theta | \leqslant 1\).

Step 1.

Let \(f_0 = 0\), \(r_0 = f -f_0\). We choose \(g = \sigma (x - \theta ) \in \mathcal{H}\) to maximize \(\mathbb {E}\left[ r_0 g\right] \). By straightforward calculation,

We find that \(\theta _1^* = Argmax_{|\theta | \leqslant 1} \mathbb {E}\left[ f(x) \sigma (x - \theta )\right] = -1\). Then \(g_1(x) = \sigma (x - \theta _1^*) = \sigma (x + 1)\). By calculation, the first-order approximation is

Step 2.

Let \(r_1(x) = f(x) - f_1(x)\). By similar calculation, we obtain

Therefore, \(f_2 \in Span\left\{ g_1(x), g_2(x) \right\} \) where \(g_2(x) \equiv \sigma (x + 0.12)\). Precisely, \(f_2(x) = c_1 g_1(x) + c_2 g_2(x)\), and the coefficients \(c_1, c_2\) are determined by the following linear system:

We find \(c_1 = 0.3940\) and \(c_2 = 0.5964\). Then, the second-order approximation is \(f_2(x) = 0.3940 \sigma (x+1) + 0.5964 \sigma (x+0.12)\).

Step 3.

Let \(r_2(x) = f(x) - f_2(x)\). We obtain

Then, \(f_3(x)\) is the projection of the function f(x) to the space \(Span \left\{ g_1(x), g_2(x), g_3(x) \right\} \) where \(g_3(x) = \sigma (x+0.14)\). A straightforward calculation derives that the third-order approximation is \(f_3(x) = 0.2488\sigma (x+1) + 0.4213\sigma (x+0.12) + 0.3203 \sigma (x + 0.14)\). \(\square \)

Rights and permissions

About this article

Cite this article

Jiang, J., Tian, W. Semi-nonparametric approximation and index options. Ann Finance 15, 563–600 (2019). https://doi.org/10.1007/s10436-018-0341-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10436-018-0341-4