Abstract

In the present article we investigate how geometric microstructures of a domain can affect the diffusion on the macroscopic level. More precisely, we look at a domain with additional microstructures of two kinds, the first one are periodically arranged “horizontal barriers” and the second one are “vertical barriers” which are not periodically arranged, but uniform on certain intervals. Both structures are parametrized in size by a small parameter \(\varepsilon \). Starting from such a geometry combined with a diffusion(-reaction) model, we derive the homogenized limit and discuss the differences of the resulting limit problems for various particular arrangements of the microstructures.

Similar content being viewed by others

1 Introduction

The aim of the present work is to study the question whether and how geometric microstructures of the domain on which a diffusion process takes place are reflected in the macroscopic description of the same process.

The macroscopic description is related to the microscopic one by a homogenization limit.

So, reformulated we want to investigate the effects of geometric microstructures on the partial differential equation and boundary conditions obtained through homogenization.

Thus, since we want to “separate” the effect of the underlying geometry from other effects we do not include reactions in our diffusion process.

This is also due to the observation that in particular nonlinear reaction terms can have a strong influence on, e.g., the stability properties or the bifurcation behavior (see, e.g., [22, 30] as well as [11] and [12, 13]).

So, we start our considerations with a diffusion model on a suitable domain.

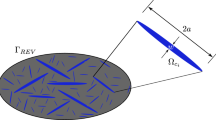

We model (in two dimensions) the geometric microstructure by two classes of “barriers,” horizontal and vertical ones. The first class can be seen as the various layers of the microstructure and the second class as the possible bridgings between them. Mathematically, for this latter class we have an additional liberty of whether or not these connections are present. In fact, we will show that depending on where and how often these connections are open we get different limit problems.

This whole geometric structure is parametrized by basically one parameter \(\varepsilon \) which is small. And in particular we are interested in what happens if this parameter tends to zero.

This microstructure is situated in the interior of a “container.” All these geometric properties are explained in full detail in Sect. 2.

Starting from a classical diffusion equation on the domain equipped with a geometric microstructure as described above, we pass to the limit as \(\varepsilon \) tends to zero.

This homogenization is performed as a combination of classical homogenization techniques and the concept of concentration capacity. The combination of these two techniques was first used in the seminal works of Andreucci and collaborators (see [1–5]).

The difference between their geometry and our here is that in their work only the barriers of the first class were present (see, e.g., [3]).

In the present work, we impose an additional constraint on the positions of the vertical “barriers.”

The limit behavior obtained through homogenization depends crucially on the properties of the microstructure we have at level \(\varepsilon \ne 0\). Three particular situations are depicted in Fig. 4, and it turns out that the corresponding limits are genuinely different. These differences are discussed in Sect. 6.

As mentioned earlier, passing from the problem at fixed level \(\varepsilon \ne 0\) to the homogenization limit can be viewed as the passage from the microscopic to the macroscopic level.

The precise formulation of these various limit scenarios as well as the precise hypotheses is presented in Sect. 3.

In Sects. 4 and 5 we give the derivation of the limits and show some additional properties of solutions to these limit problems.

Although at first glimpse, our setting might be comparable with other homogenization problems (see, e.g., [7, 9, 26], or [14]; or [6, 10, 23] and [28] for more general monographs), our setting here and the combination of homogenization and concentrated capacity are quite particular.

2 Our model

In this section we will explain our model, which as announced in the Introduction, consists of two parts: A suitable description of the underlying geometry and a PDE describing the diffusion process on the domain with these geometric properties.

In addition, we will discuss the problem in the case that the small parameter \(\varepsilon \) encoding the geometry is still strictly positive, i.e., the so-called \(\varepsilon \)-problem.

2.1 The underlying geometry

In this part we present the geometric idea behind our model and we give the precise description of it as well.

The idea is the following: In an outer container we insert our microstructure.

The first one is just a rectangle \(\varOmega \). The latter is modelled by horizontal and vertical obstacles—or “barriers”—as displayed in Fig. 1.

In the following discussion we assume that the thickness of the horizontal and the vertical obstacles is the same. The case of different thicknesses can be treated along the same lines—as long as they are of the same order of magnitude as \(\varepsilon \).

Note that when \(\varepsilon \) decreases the number of layers of our microstructure increases. This dependence is given by the following relation:

The number \(n=n(\varepsilon )\) of horizontal barriers is given by

where \(\theta _0\) has the following geometric-volumetric interpretation

As indicated with different colors, i.e., black and gray in Fig. 1, in contrast to the horizontal barriers, denoted by \(C_j\), the vertical ones—\(V_{j,l}\) and \(V_{j,r}\)—may be present or not (see also figures at the end of this subsection).

In order to indicate this, we introduce the following functions:

Let \(I_j\) denote the space between the horizontal barrier \(C_{j}\) and \(C_{j+1}\) (respectively between the bottom \(\left\{ z=0 \right\} \) and \(C_1\) and between \(C_n\) and the top). More precisely we have \(I_0= \left\{ \vert x \vert< R \right\} \times \left\{ 0< z < \frac{\nu \varepsilon }{2} \right\} \), \(I_j= \left\{ \vert x \vert< R \right\} \times \left\{ \frac{\nu \varepsilon }{2} + (j-1) \nu \varepsilon + j \varepsilon< z < \frac{\nu \varepsilon }{2} + j (1+ \nu ) \varepsilon \right\} \) and \(I_n = \left\{ \vert x \vert< R \right\} \times \{ \frac{\nu \varepsilon }{2} + (n-1) \nu \varepsilon + n \varepsilon< z< H\} = \left\{ \vert x \vert< R \right\} \times \left\{ H - \frac{\nu \varepsilon }{2}< z < H \right\} \).

Then define

\({\mathscr {B}}_{j,r}\) is defined analogously.

For the sake of simplicity, we assume that there are no “completely closed compartments.” More precisely we assume that

and vice versa

At this point, let us also summarize the further notation concerning our underlying geometry:

We will use the following notation:

-

\({\tilde{\varOmega }}_{\varepsilon }\) denotes the big (outer) cylinder

$$\begin{aligned} {\tilde{\varOmega }}_{\varepsilon }= ( -\sigma \varepsilon -R , R + \sigma \varepsilon ) \times (0,H ) \end{aligned}$$ -

The “free space,” i.e., the space where diffusion can take place, is denoted by \(\varOmega _{\varepsilon }\), i.e.,

$$\begin{aligned} \varOmega _{\varepsilon } = {\tilde{\varOmega }}_{\varepsilon } \backslash \Big ( \cup _j \overline{C_j} \bigcup \cup _j {\mathscr {B}}_{j,l}\overline{V_{j,l}} \bigcup \cup _j {\mathscr {B}}_{j,r}\overline{V_{j,r}} \Big ) \end{aligned}$$ -

An additional index “T” denotes the parabolic cylinder defined by the product of the given domain times the time interval (0, T ], e.g.,

$$\begin{aligned} {\tilde{\varOmega }}_{\varepsilon ,T}= ( -\sigma \varepsilon -R , R + \sigma \varepsilon ) \times (0,H ) \times (0,T] \end{aligned}$$ -

The “outer shell” \(S_{\varepsilon }\) is the following set

$$\begin{aligned} S_{\varepsilon }=( -\sigma \varepsilon -R , -R ) \times (0,H) \cup (R , R + \sigma \varepsilon ) \times (0,H) \equiv S_{\varepsilon , l} \cup S_{\varepsilon ,r} \end{aligned}$$ -

On the horizontal barriers \(C_j\) (of the form \(\left\{ -R< x< R \right\} \times \big \{ \frac{\nu \varepsilon }{2} + (j-1) (\nu +1)\varepsilon< z < \frac{\nu \varepsilon }{2} + (j-1) (\nu +1)\varepsilon + \varepsilon \big \}\)) we use the following notation (as displayed in Fig. 2):

-

The space between two horizontal barriers, say between \(C_j\) and \(C_{j+1}\) is denoted by \(I_{j}\),

-

The upper boundary is denoted by \(\partial I^-_{j}\),

-

The lower boundary is denoted by \(\partial I^+_{j-1}\),

-

The lateral boundary parts are denoted by \(L^l_j\) (for the left boundary part) and respectively \(L^r_j\) (for the right boundary part).

-

-

On the vertical barriers \(V_{j,l}\) and \(V_{j,r}\) (of the form \(\left\{ -R< x< -R + \varepsilon \right\} \times \left\{ \frac{\nu \varepsilon }{2} + (j-1) \nu \varepsilon + j \varepsilon< z < \frac{\nu \varepsilon }{2} + j (1+ \nu ) \varepsilon \right\} \), respectively, \(\left\{ R - \varepsilon< x< R \right\} \times \left\{ \frac{\nu \varepsilon }{2} + (j-1) \nu \varepsilon + j \varepsilon< z < \frac{\nu \varepsilon }{2} + j (1+ \nu ) \varepsilon \right\} \) ) we use the following notation:

The lateral boundary parts are denoted by \(l^o_{j,l}\), \(l^i_{j,l}\), \(l^i_{j,r}\) and \(l^o_{j,r}\) as indicated in Fig. 3.

Here, \(l^o_{j,l}\) and \(l^i_{j,l}\) are the boundaries of \(V_{j,l}\) and similar for the boundaries of \(V_{j,r}\).

Here, R, H, \(\nu \) and \(\sigma \) are fixed whereas \(\varepsilon \) is a small parameter which will tend to zero.

At this point, let us introduce one additional notation: As can easily been seen, as \(\varepsilon \) tends to zero the outer container \(\varOmega _{\varepsilon }\) shrinks to

And the outer shell \(S_{\varepsilon }= S_{\varepsilon ,l} \cup S_{\varepsilon ,r}\) shrinks to

Remark

-

A similar geometry has been studied by Andreucci et al (see [2, 3] and [5], and with some variants [1]) in the context of visual transduction. The difference is that here as a second class of barriers we have the vertical ones. If none of the vertical barriers is present and if one looks at the two-dimensional reduction of their three-dimensional model, our model coincides with the one studied in [3] and our main result coincides in the case of vanishing source terms.

-

Note that we do not impose any periodicity condition, but we assume a certain uniform distribution of the vertical barriers in a sense to be explained later on when we state our main result.

Before we pass to the formulation of the PDE part of our model we would like to point out the two main possible configuration one should have in mind. Moreover, we will also give one example of a possible combination of them.

The fist possibility is that there are no vertical barriers on one side, e.g., on the right-hand side. This case is called model A. In this case \({\mathscr {B}}_{j,l}=1\) for all j and \({\mathscr {B}}_{j,r}=0\) for all j.

The second case is the possibility that there are no vertical barriers. This case is referred to as model B. This correspond to the geometry appearing in [3].

The last example is of mixed nature: For the under half, i.e., for \(0 \le z \le H/2\), there are no vertical barriers present and in the upper half, i.e., for \(H/2 \le z \le H\), all the possible openings on the left side are closed whereas on the right side they are still open.

These particular cases are displayed in Fig. 4.

2.2 The PDE model at level \(\varepsilon \)

On the domain described in the previous section, we will look at the following problem—where \(\varepsilon \) is fixed.

Before we come to the statement of the problem at level \(\varepsilon \), let us start with the following observation.

The total volume V of the “free space between the horizontal barriers” in the region \( \left\{ \vert x \vert \le R \right\} \) is asymptotically preserved, more precisely

But on the other hand, the total volume of the outer shell S tends to zero, i.e.,

In order to compensate the vanishing volume of the outer shell, we introduce the following function which allows us to track also the contribution of u in this outer shell

where \(\varepsilon _0 \in (0,1]\) is fixed and \(\varepsilon \in (0, \varepsilon _0 ]\).

Then \(u_{\varepsilon } \in C(0,T; L^2(\varOmega _{\varepsilon })) \cap L^2(0,T; W^{1,2}(\varOmega _{\varepsilon }))\) denotes the weak solution of the following PDE

with the following boundary condition

where as usual n denotes the normal vector, and the initial condition

where we assume that the given \(u(x,z,0)=u_0(x,z) \ge 0\) is bounded and smooth (\(C^1\) would we sufficient, but for the sake of simplicity we assume smoothness).

Here, \(L^s(0,T; W^{k,p})\) denotes the Bochner space of \(L^s\)-functions with values in the Sobolev space \(W^{k,p}\), the space of distributions such that the derivatives up to oder k belong to \(L^p\). The corresponding norms are given by

with the usual modifications in the case \(s=\infty \), respectively \(p=\infty \).

The space \(C(0,T; L^2(\varOmega _{\varepsilon })) \) is defined analogously.

Note that for \(\varepsilon = \varepsilon _0\) we have the usual diffusion equation in \(\varOmega _{\varepsilon _0}\).

In our situation where we are particularly interested in the interplay between the geometry and the PDE, the choice of zero fluxes across the boundaries is most natural.

Moreover, since we are interested in the effects of the geometry, more precisely the placements of the horizontal and vertical “barriers” we do not take into account any reaction terms.

Remark

-

What about other boundary conditions? In the general situation one could impose that \(\nabla u_{\varepsilon } \cdot n = f_o\; \text {on} \; (\cup _j L_i^l) \bigcup (\cup _j L_j^r) \bigcup (\cup _j l^o_{j,l}) \bigcup (\cup _j l^o_{j,r})\), \(\nabla u_{\varepsilon } \cdot n = f_i \; \text {on} \; (\cup _j l^i_{j,l}) \bigcup (\cup _j l^i_{j,r})\) and \(\nabla u_{\varepsilon } \cdot n = f_h\; \text {on} \; (\cup _{j=0}^{n-1} \partial I^+_j) \bigcup (\cup _{j=1}^n \partial I^-_j)\) where \(f_o\), \(f_i\) and \(f_h\) extend to \(W^{1,1}\)-functions on \({\tilde{\varOmega }}_{\varepsilon }\). (Note that in the above formulation by abuse of notation we mean that the boundary condition applies only if the corresponding vertical barriers are present.) In doing so, one has to be a little bit careful. If, for example, \(f_h\) is strictly positive, this production rate has to be scaled by \(\varepsilon \), i.e., one has to impose \(\nabla u_{\varepsilon } \cdot n = \varepsilon f_h\; \text {on} \; (\cup _{j=0}^{n-1} \partial I^+_j) \bigcup (\cup _{j=1}^n \partial I^-_j)\). Otherwise, the total production rate blows up due to the fact that as \(\varepsilon \) tends to zero the total surface \(\mathscr {H}^1(\bigcup \partial I^+_j \cup I^-_j)=2R2n\) becomes arbitrarily large.

-

The initial data are assumed to be smooth for the sake of simplicity, and actually \(C^1\)-regularity would be sufficient.

-

General background material about parabolic problems of the kind of our model can be found in [18, 19] or [29]. For important results about Bochner spaces the reader is referred to, e.g., [17, 20, 25] or [29]. The results related to the corresponding elliptic problem (trace theorems, Sobolev embeddings, etc.) are presented in [18] or [21].

Our goal is to describe the limit of the above problem as \(\varepsilon \rightarrow 0\).

But before we perform this passage to the limit, let us say a few words about the above problems for fixed \(\varepsilon \).

One of the first and most natural questions is whether the solutions remain positive—for positive initial data. In fact, we have the following result.

Proposition 1

For each fixed \(\varepsilon > 0\) the above problem has a unique solution \(u_{\varepsilon }\) which satisfies the following properties.

-

(i)

The solution \(u_{\varepsilon }\) is positive and bounded, i.e.,

$$\begin{aligned} 0 \le u_{\varepsilon }(x,z,t) \le C \quad \forall \, (x,z,t) \in \varOmega _{\varepsilon ,T}. \end{aligned}$$ -

(ii)

Moreover, we have the energy estimate

$$\begin{aligned} \sup _{0 \le t \le T}\vert \vert \sqrt{a_{\varepsilon }}u_{\varepsilon }(\cdot , \cdot , t) \vert \vert _{L^2(\varOmega _{\varepsilon })} + \vert \vert \sqrt{a_{\varepsilon }} \nabla u_{\varepsilon } \vert \vert _{L^2(\varOmega _{\varepsilon ,T})} \le C. \end{aligned}$$ -

(iii)

The following uniform “time-regularity property” holds

$$\begin{aligned} \int _0^{T-h}\int _{\varOmega _{\varepsilon }}\frac{1}{h}a_{\varepsilon }(u_{\varepsilon }(t+h) -u_{\varepsilon }(t))^2 \; dx dz \,dt \le C. \end{aligned}$$

Here, C does not depend on \(\varepsilon \). This constant depends only on the data \(u(x,y,t=0)\) and on the constants R, H, T, \(\sigma \), \(\nu \) and \(\varepsilon _0\).

Since this result is standard, we will not give a detailed proof here.

Nevertheless, we would like to point out some important aspects.

Remark

-

The assertion about existence and uniqueness of the solution to the \(\varepsilon \)-problem is standard. The interested reader is referred to Ladyženskaja–Solonnikov–Ural’ceva, chapter III, §5, Theorem 5.2 ([24], see also [19]). A detailed presentation of the above regularity results with all the calculations can be found, e.g., in [27].

-

The positivity follows from a weak version of the maximum principle (see, e.g., [15, 24] or [18]) in combination with the use of \(u_{\varepsilon }\) itself as a test function—upon suitable modifications. This latter point is achieved as follows: Look at \(-u_{\varepsilon }^-\), the negative part of \(u_{\varepsilon }\) and in addition take its Steklov (time) average. This average is defined as follows: Let \(f \in L^ 1(\varOmega _T)\) and let \(h > 0\) be sufficiently small. Then the Steklov (time) average \(f^h\) of f with respect to the time variable t is given by

$$\begin{aligned} f^h(\cdot , t) := \frac{1}{h} \int _t^{t+h} f(\cdot , \tau ) \, d\tau \; \text {where} \, 0< t < T- h. \end{aligned}$$One of the important features of such an average is that it has a regularizing effect. In fact, \(f_h \in W^{1,p}\) for \(f \in L^p\) (\(p < \infty \)). Similar averages can also been taken with respect to other (space) variables. Further details and proofs about Steklov averages can be found, e.g., in [24] or [16].

-

What concerns the strategy to prove the boundedness of \(u_{\varepsilon }\), it is quite the same as for the positivity. The basic idea is that again \(u_{\varepsilon }\) itself—up to an additive constant and up to passing to the Steklov averages—can be used as a test function.

-

The energy estimate as well as the uniform “time-regularity” property is very classical, and the interested reader is referred to the book of Ladyženskaja–Solonnikov–Ural’ceva [24]. In fact, the more refined time-regularity estimate holds

$$\begin{aligned} \int _0^T \int _{\varOmega _{\varepsilon }} \frac{1}{h} a_{\varepsilon } (u_{\varepsilon }(t+h) - u_{\varepsilon }(t))^2 \rightarrow 0 \; \text {as} \; h \rightarrow 0. \end{aligned}$$From that one can deduce (by classical regularity theory) that \(\sqrt{a_{\varepsilon }}u_{\varepsilon } \in C(0,T;L^2(\varOmega _{\varepsilon }))\).

-

Note that \(\sqrt{a_{\varepsilon }}u_{\varepsilon }\) has a special role; in particular, the energy estimate holds for this quantity. In this view, one could see \(\sqrt{a_{\varepsilon }} u_{\varepsilon }\)—restricted to either the outer shell \(S_{\varepsilon }\) or the region \(\left\{ \vert x \vert < R \right\} \)—as solutions of the classical diffusion problem (heat equation) with constant coefficients. And such solutions are smooth. But the physically relevant quantity is \(u_{\varepsilon }\) itself.

3 The limit problem

In this section we present and state our main result.

For the limit problem when \(\varepsilon \rightarrow 0\) we have the following description.

Theorem 2

Assume that there exist intervals \(J_l \subset (0,H)\) and \(J_r \subset (0,H)\)—possibly empty—such that

respectively

and

Then the solutions \(u_{\varepsilon }\) of the \(\varepsilon \)-problems from above—suitably extended—converge in the sense of distributions to u where u solves

with boundary conditions

and

In addition, u satisfies

And the restrictions of \(u_{\varepsilon }\) to the outer shell \(S_{\varepsilon }\), more precisely, \(v_{\varepsilon , r}= \frac{1}{\sigma \varepsilon }\int _R^{R+ \sigma \varepsilon } u_{\varepsilon }(x,z,t) \; dx\), converge in the sense on distributions to \(v_r\), respectively, \(v_{\varepsilon ,l}\) converges to \(v_l\), with \(v_{l,z}=0=v_{r,z}\) for \(z=0\) and \(z=H\), and they are related to u in the following way

Moreover, we have the following transition condition

Remark

-

Note that since we assumed that there are no closed compartments the intervals \(J_l\) and \(J_r\) are disjoint.

-

The boundary–transition condition can be summarized as follows: The normal derivative \(\nabla u \cdot n\) has to vanish on \(J_l\), respectively, on \(J_r\). And on the complement of \(J_l\), respectively, \(J_r\), u has to coincide with \(v_r\), respectively, with \(v_l\).

-

In our particular model cases, the boundary–transition conditions read as follows

-

Model A:

$$\begin{aligned} u_x =0 \; \text {on} \; \left\{ x =-R \right\} \; \text {and} \; u=v_r \; \text {on} \; \left\{ x =R \right\} \end{aligned}$$Note that it holds \(J_l=(0,H)\) and \(J_r= \emptyset \).

-

Model B:

$$\begin{aligned} u =v_l \; \text {on} \; \left\{ x =-R \right\} \; \text {and} \; u=v_r \; \text {on} \; \left\{ x =R \right\} \end{aligned}$$since \(J_l=J_r= \emptyset \).

-

Model M:

$$\begin{aligned} u_x=0 \; \text {on} \; \left\{ x =-R \right\} \cap \left\{ z > \frac{H}{2} \right\} \; \text {and} \; u=v_l \; \text {on} \; \left\{ x =-R \right\} \cap \left\{ z \le \frac{H}{2} \right\} \end{aligned}$$and

$$\begin{aligned} u=v_r \; \text {on} \; \left\{ x =R \right\} . \end{aligned}$$In this case we have \(J_l=\Big (\frac{H}{2}, H \Big )\) and \(J_r= \emptyset \).

-

-

The assumptions on the vertical barriers can be seen as information of “where they are” (i.e., the location of the intervals \(J_l\) und \(J_r\)) and which percentage of the connecting open spaces between the interior (\(\left\{ \vert x \vert < R \right\} \)) and the exterior region (\(\left\{ \vert x \vert > R \right\} \)) are open, respectively, closed (i.e., the size if \(J_l\) and \(J_r\)).

-

The limit problem in weak formulation reads

$$\begin{aligned}&(1- \theta _0)\Big ( - \int _{\varOmega _{0,T}} u \varphi _t -\int _{\varOmega _0} u \varphi (t=0) + \int _{\varOmega _{0,T}} u_x \varphi _x \Big ) \nonumber \\&\quad +\, \sigma \varepsilon _0 \Big ( - \int _{S_T} v \varphi _t - \int _S v \varphi (t=0) + \int _{S_T} v_z \varphi _z \Big ) =0 \end{aligned}$$(1)for all test function \(\varphi \in C^1(\varOmega _{0,T})\) vanishing at \(t=T\).

In addition, we can show improved regularity properties of the limit. More precisely, we have the following assertions.

The first one concerns time-regularity.

Proposition 3

The limit satisfies the “time-regularity”

The next two assertions regard space-regularity.

Proposition 4

The limit v in the outer shell satisfies the “space-regularity”

Proposition 5

The limit satisfies the “space-regularity”

Furthermore, the solution of the limit problem is unique.

Proposition 6

The solution of the limit problem is unique in the class \(W^{1,2}(0,T; L^2)\) .

At this point, recall that the solutions of the problem at level \(\varepsilon \) satisfy the inequality \(0 \le u_{\varepsilon } \le C\) for some constant C. A natural question then is to ask whether the limit as well satisfies a positivity assertion. The answer again is positive.

Proposition 7

The solution (u, v) of the limit problem satisfies

for some positive constant C.

4 Proof of the main theorem: homogenization

The proof of Theorem 2 will be done basically in three steps.

First, we consider test functions whose support is disjoint from the “outer shell” \(S_{\varepsilon }\). This will basically give us the behavior “between the horizontal barriers.”

Second, we consider another class of particular test functions which now have a support which intersects the whole cylinder \(\varOmega _{\varepsilon }\) but which do not depend on x in the region \(\left\{ \vert x \vert > R \right\} \).

Finally, we will analyze how the “inner boundary condition” on \(\cup _j l^i_{j,l}\) and on \(\cup _j l^i_{j,r}\) passes to the limit. And we will establish the transition condition.

But first of all, we need some additional (technical) lemmata. This will be the content of the first subsection.

4.1 Some technical lemmata

As announced above, we would like to start with some observations which will turn out to be very useful when we perform the calculation of the limit problem.

The first result concerns the limit behavior of the space between the horizontal barriers. This result is a variant of an analogous assertion in [3].

Lemma 8

With the same notation as above we have for \(p \in (1, \infty )\)

Proof

In order to show weak convergence, first of all, look at a characteristic function \(\phi \) of a subinterval (a, b) of (0, H). For such a “test function” one immediately calculates

From that, the claimed weak convergence follows also immediately for step functions.

Finally, since by density any arbitrary test function \(\varphi \in L^{p'}(0,H)\) can be approximated by step functions, the assertion of weak convergence follows. \(\square \)

In order to actually compute the “inner limit” in the region \(\left\{ \vert x \vert < R \right\} \) we have to overcome the problem that a priori we have a sequence of functions \(u_{\varepsilon }\) whose support changes with \(\varepsilon \). In order to handle this inconvenience, we will show that the functions \(u_{\varepsilon }\) can be extended to (at least) \(\varOmega _{0} \cap \left\{ -R + \varepsilon< x < R- \varepsilon \right\} \) in such a way that the norms of the new extended functions can be uniformly bounded. This is the content of the following lemma.

Lemma 9

Assume that \(1< p < 2\).

There exist extensions \({\tilde{u}}_{\varepsilon }\) of \(u_{\varepsilon }\), defined on \(\varOmega _0 \cap \left\{ -R + \varepsilon< x < R - \varepsilon \right\} \) such that

-

(i)

$$\begin{aligned} {\tilde{u}}_{\varepsilon }= u_{\varepsilon } \quad \text {in } \bigcup _j I_j \end{aligned}$$

-

(ii)

$$\begin{aligned} {\tilde{u}}_{\varepsilon } \in L^2(0,T;W^{1,1}(\varOmega _0 \cap \left\{ -R + \varepsilon< x < R - \varepsilon \right\} )) \end{aligned}$$

with

$$\begin{aligned} \vert \vert {\tilde{u}}_{\varepsilon } \vert \vert _{L^2(0,T;W^{1,1}(\varOmega _0 \cap \left\{ -R + \varepsilon< x < R - \varepsilon \right\} ))} \le C \end{aligned}$$ -

(iii)

$$\begin{aligned} {\tilde{u}}_{\varepsilon } (\cdot , \cdot , t) \in L^p(\varOmega _0 \cap \left\{ -R + \varepsilon< x < R - \varepsilon \right\} ) \quad \forall \, t \in (0,T) \end{aligned}$$

with

$$\begin{aligned} \vert \vert {\tilde{u}}_{\varepsilon } \vert \vert _{L^p(\varOmega _0 \cap \left\{ -R + \varepsilon< x < R - \varepsilon \right\} )} \le C \end{aligned}$$ -

(iv)

$$\begin{aligned} \vert \vert {\tilde{u}}_{\varepsilon }(t + h) - {\tilde{u}}_{\varepsilon }(t) \vert \vert _{L^2(0,T-h;L^p(\varOmega _0 \cap \left\{ -R + \varepsilon< x < R - \varepsilon \right\} ))} \le C \sqrt{h} \quad \forall \, h \in (0,T) \end{aligned}$$

where the constants C do not depend on \(\varepsilon \).

Proof

The basic idea how to construct an extension with the properties as claimed above is the following: In a first step, for each space \(I_j\) between two subsequent horizontal barriers we reflect on one hand at the boundary \(\partial I^-_j\) and \(\partial I^+_j\) combined with a suitable scaling. Then, in a second step we multiply the function we have obtained in the first step—and which is now defined on \(C_j \cup I_j \cup C_{j+1}\)—by a cutoff function which equals 1 on \(I_j\) and vanishes on \(\partial I^-_{j+1}\) and \(I^+_{j-1}\). Finally, in a third step, this procedure is repeated for all \(I_j\) and the extension we are looking for is the superposition of all the single extensions from the preceding steps.

More precisely, we define \({\tilde{u}}_{\varepsilon }\) as follows:

Let the heights of the interfaces between the \(I_j\)’s and the \(C_j\)’s be parametrized by \(\xi ^+_j\) and \(\xi ^-_j\), i.e., \(\xi ^-_0=0\), \(\xi ^+_0=\frac{\nu \varepsilon }{2}\), \(\xi ^-_1=\frac{\nu \varepsilon }{2} + \varepsilon \), \(\xi +_1=\frac{\nu \varepsilon }{2} + \varepsilon + \nu \varepsilon \) and so on.

Now, let z belong to some \(C_j\). This means that \(z \in (\xi ^+_{j-1}, \xi ^-_j )\).

Then, \({\tilde{u}}_{\varepsilon }\) is given by

For \(z \in I_j\) for some j we set \({\tilde{u}}_{\varepsilon }(x,z,t)=u_{\varepsilon }(x,z,t)\).

First of all, note that the so constructed extensions are continuous. Moreover, property (i) is obviously true.

Next, we shall verify the other claimed properties.

The \(L^p\)-regularity follows immediately from the fact that \(u_{\varepsilon }\) are uniformly bounded in \(L^{\infty }\). Thus, we have

where C does not depend on \(\varepsilon \). Here, we used also the fact that \(\varPsi _+\) and \(\varPsi _-\) are bounded by 1.

Summarized we have shown (iii), even more, namely that the extensions are uniformly bounded in any \(L^p\).

Note also, that we can estimate as well

where the constants C may depend on the size of \(\varOmega _0\), the length T of the time interval and the parameter \(\nu \) but do not depend on \(\varepsilon \).

From this observation, the Lipschitz property (iv) follows immediately from the corresponding property of \(u_{\varepsilon }\), see again Proposition 1.

It remains to prove property (ii). First, we observe that from the definition of \({\tilde{u}}_{\varepsilon }\) and the properties of \(u_{\varepsilon }\) it follows immediately

where again the constants may depend on the size of \(\varOmega _0\) and the parameter \(\nu \) but do not depend on \(\varepsilon \).

We are left with the derivative in z-direction.

Note that on the \(I_j\) we have \({\tilde{u}}_{\varepsilon ,z}= u_{\varepsilon ,z}\), and the integrability—restricted to \(\bigcup _j I_j\) follows from the corresponding property of \(u_{\varepsilon }\) and Hölder’s inequality.

For the z-derivative and \(z \in C_j\) for some j we find

For the first two terms, the claimed integrability in \(L^2(L^1)\) follows again from the integrability in \(L^2(L^1)\) of \(u_{\varepsilon }\) and Hölder’s inequality.

It remains to estimate

This quantity can be reformulated—up to a constant which does not depend on \(\varepsilon \)—as

At this point, we distinguish four cases:

-

(i)

The index j is such that \({\mathscr {B}}_{j,l}= {\mathscr {B}}_{j+1,l}={\mathscr {B}}_{j,r}= {\mathscr {B}}_{j+1,r} =0\).

-

(ii)

The index j is such that \({\mathscr {B}}_{j,l}=1= {\mathscr {B}}_{j+1,l}\).

-

(iii)

The index j such that \({\mathscr {B}}_{j,r}=1= {\mathscr {B}}_{j+1,r}\).

-

(iv)

None of the conditions in (i) to (iii) hold.

We observe that due to our hypothesis, the number of indices j such that case iv) applies is finite and independent on \(\varepsilon \).

For these cases we exploit the fact that \({\tilde{u}}_{\varepsilon }\) are uniformly bounded in \(L^{\infty }\). Moreover, the size of the region \(I_j \cap \left\{ -R + \varepsilon< x < R - \varepsilon \right\} \) is proportional to \(\varepsilon \). Altogether we see that the \(L^1\)-norm on these \(I_j\) is uniformly bounded by a constant which is independent on \(\varepsilon \).

For the cases (i) to (iii), by Hölder’s inequality the desired uniform bound follows immediately once we can show that

and

Thanks to Lemma 10 below the quantities on the left-hand sides can be estimated as follows

and

Using Lemma 11 below, Proposition 1 and the fact that the total number of horizontal barriers and thus also the number of free spaces between two such barriers is limited by \(\frac{H\theta _0}{\varepsilon }\) the conclusion follows easily. \(\square \)

Lemma 10

With the same notation as before we have the following assertions.

-

(i)

For j such that \({\mathscr {B}}_{j,r}= {\mathscr {B}}_{j+1,r} = {\mathscr {B}}_{j,l}= {\mathscr {B}}_{j+1,l} =0\) it holds

$$\begin{aligned}&\int _{I_j,T} \vert u(x, z + (1 + \nu )\varepsilon ,t) - u(x,z,t) \vert ^2 \; dx dz \, dt \\&\quad \le C \int _{\partial I_j \cap \left\{ \vert x \vert = R \right\} ,T} \vert u(x, z + (1 + \nu )\varepsilon ,t) - u(x,z,t) \vert ^2 \;dz \, dt + C \varepsilon ^3. \end{aligned}$$ -

(ii)

For j such that \({\mathscr {B}}_{j,l}=1= {\mathscr {B}}_{j+1,l}\) it holds

$$\begin{aligned}&\int _{I_j,T} \vert u(x, z + (1 + \nu )\varepsilon ,t) - u(x,z,t) \vert ^2 \; dx dz \, dt \\&\quad \le C \int _{\partial I_j \cap \left\{ x= R \right\} ,T} \vert u(x, z + (1 + \nu )\varepsilon ,t) - u(x,z,t) \vert ^2 \;dz \, dt+ C \varepsilon ^3. \end{aligned}$$ -

(iii)

For j such that \({\mathscr {B}}_{j,r}=1= {\mathscr {B}}_{j+1,r}\) it holds

$$\begin{aligned}&\int _{I_j,T} \vert u(x, z + (1 + \nu )\varepsilon ,t) - u(x,z,t) \vert ^2 \; dx dz \, dt \\&\quad \le C \int _{\partial I_j \cap \left\{ x= -R \right\} ,T} \vert u(x, z + (1 + \nu )\varepsilon ,t) - u(x,z,t) \vert ^2 \;dz \, dt + C \varepsilon ^3. \end{aligned}$$

In all cases the constant C does not depend on \(\varepsilon \). It depends only on the initial data and \(\nu \).

Proof

The assertion of this lemma is proved along the same lines as the corresponding assertion (Lemma A2.1) in [3]. In the cases (ii) and (iii) one just has to look at the following comparison problem

respectively

In addition, due to the fact that we allow initial data \(u_0\) which do not necessarily have to be constant the starting point is in each case

respectively

where \(\varphi \) is a solution of the comparison problem.

The first terms on the right-hand sides are treated as in Lemma A2.1 in [3], and for the second terms on the right-hand sides we use the regularity of the initial data. \(\square \)

Lemma 11

Under the same notation as before, it holds

where the constant C is independent of \(\varepsilon \).

Proof

The proof of this assertion follows the same lines as the analogous assertion in [3] (see chapter 9 in the cited article). \(\square \)

4.2 The limit in the interior region \((-R,R) \times (0,H)\)

In order to study the limit behavior in the region \((-R,R) \times (0,H)\), we use the weak formulation of the \(\varepsilon \)-problem and use suitable test functions.

The strategy is to look at tests functions \(\varphi \) which satisfy for a fixed, but arbitrarily small \(\delta > 0\)

-

$$\begin{aligned} \varphi \in C^{2}_0(\varOmega _{0,T}) \end{aligned}$$

-

$$\begin{aligned} x \rightarrow \varphi (x,z,t) \in C^{2}_0(\left\{ \vert x \vert \le R-\delta \right\} ) \end{aligned}$$

Note that in particular we have \(\varphi (t=0)=0=\varphi (t=T)\).

For such test functions, from the weak formulation of the \(\varepsilon \)-problem we find—recall also that in the region \(\left\{ \vert x \vert < R \right\} \) we have \(a_{\varepsilon }=1\)

Our goal is to study the limit as \(\varepsilon \rightarrow 0\). In order to achieve this we will study each term in the above equation separately.

Note that without loss of generality we may assume that \(\varepsilon \le \delta \).

Limit of \(- \int _0^T \int _{\varOmega _{\varepsilon } \cap \left\{ \vert x \vert < R - \delta \right\} } u_{\varepsilon ,t}\varphi \):

Note that this term can be written as follows using the extensions \({\tilde{u}}_{\varepsilon }\)

In this expression, we have the following properties:

On one hand, on \(\varOmega _0 \cap \left\{ \vert x \vert < R- \delta \right\} \) we can see our extensions \({\tilde{u}}_{\varepsilon }\) as Lipschitz maps in \(L^2(0,T; L^p) \subset L^p(0,T; L^p)\) where \(p < 1^*=2\) and where the latter space can be identified with \(L^p((\varOmega _0 \cap \left\{ \vert x \vert < R- \delta \right\} )_{,T})\) by Fubini–Tonelli.

Due to the properties of \({\tilde{u}}_{\varepsilon }\) we established in Proposition 9 the Lebesgue space version of the Arzelà–Ascoli theorem due to Riesz–Fréchet–Kolmogorov (see, e.g., [8]) tells us that we have sufficient (pre-)compactness in order to pass to the limit in the appearing products in (2), i.e., we can extract a strongly convergent subsequence which we still denote by \(\left\{ {\tilde{u}}_{\varepsilon } \right\} \).

In view of the uniqueness of the limit which we will establish later on, passing to a subsequence does not give rise to any problem. On the other hand, recall from Lemma 8 that \(\sum _j \chi _{I_j}\) converges weakly in \(p'\).

Thus, we find that

Remark

Proving first an additional improved time-regularity assertion for \(u_{\varepsilon }\) and combining this with the above constructed extensions, one could apply the Aubin–Lions–Lemma (see, for instance, [20] or [25]) to alternatively deduce the existence of a strongly convergent subsequence in \(L^p\).

Limit of \(- \int _0^T \int _{\partial \varOmega _{\varepsilon } \cap \left\{ \vert x \vert < R - \delta \right\} } ( \nabla u_{\varepsilon } \cdot n ) \varphi \):

In a first step, we will rewrite this term as

The terms I and II vanish identically (for all \(\varepsilon \)) due to the boundary conditions we impose on the boundaries of the horizontal barriers.

What concerns the terms III and IV, note that for \(\varepsilon < \delta \) we have

and

Thus, the terms III and IV vanish in the limit as \(\varepsilon \rightarrow 0\).

Summarized we have

Limit of \(\int _0^T \int _{\varOmega _{\varepsilon } \cap \left\{ \vert x \vert < R - \delta \right\} } u_{\varepsilon ,x} \varphi _x \):

First of all, we integrate once more by part. Exploiting the fact that we look at derivatives in x-direction and the properties of our test function \(\varphi \) this leads to

And again, we rewrite the term we have found using the extensions \({\tilde{u}}_{\varepsilon }\)

Following the same lines as in the calculation of the limit of \(- \int _0^T \int _{\varOmega _{\varepsilon } \cap \left\{ \vert x \vert < R - \delta \right\} } u_{\varepsilon }\varphi _t\) we finally find

Limit of \(\int _0^T \int _{\varOmega _{\varepsilon } \cap \left\{ \vert x \vert < R - \delta \right\} } u_{\varepsilon ,z} \varphi _z\):

Here too, we will slightly rewrite the term before passing to the limit. In the present case, we write

In order to calculate the limit, we have to carefully analyze the behavior or \(\sum _j \chi _{I_j} u_{\varepsilon ,z}\). In fact, we have

Lemma 12

It holds

Proof

First of all, recall that

where C does not depend of \(\varepsilon \).

Thus, there exists a subsequence—still denoted by \(\left\{ \sum _j \chi _{I_j} u_{\varepsilon ,z} \right\} _{\varepsilon }\)—such that it converges weakly to a limit g.

Next, we will take into account the differential equation involving \(u_{\varepsilon ,z}\), (2), and look at two particular test functions. Doing so, we have two terms which both equal to zero and a linear combination of them leads to the conclusion that \(g=0\).

More precisely, in (2) we will look at \(\phi _1(x,z):= \varphi \int _0^z \sum _j \chi _{C_j}\) and \(\phi _2(x,z)= \varphi z\) where \(\varphi \) is an arbitrary test function.

First of all, we observe that

This follows from a straightforward calculation.

Using this information, we turn our attention to (2) and take into account also the results from the preceding steps.

For \(\phi _1\) we get

Observe here that the last term on the right-hand side vanishes identically.

For the other test function \(\phi _2\) we find

Finally, we look at the difference of these two expressions. This immediately leads to the conclusion—by the fundamental lemma of the calculus of variations—that

Thus, we have

\(\square \)

Summary:

For this interior region \((-R,R) \times [0,H]\) and for test functions with the properties described above we find

Note that so far \(\delta \) was fixed. But the arguments do not depend on the choice of \(\delta \). We can choose this parameter arbitrarily, and thus by an exhaustion of \(\varOmega _0\) by compact sets we can conclude.

4.3 The full limit

In this subsection we will look at another class of test functions, namely at test functions whose support contains \(\varOmega _0\), but which do not depend on x for \(\vert x \vert \ge R\) and which vanish only at \(t=T\).

In doing so we not only capture the limiting behavior in the interior of \(\varOmega _0\) (as we did in the previous subsections) but we take into account also the behavior in the outer shell, respectively, on the boundary \(\partial \varOmega _0\).

In addition, we will make use of the information we have already found before.

We start with the weak formulation of the \(\varepsilon \)-problem

Rearranging and splitting terms we get the equivalent form—recall also the definition of \(a_{\varepsilon }\)-

Again, our goal is to study the limit as \(\varepsilon \rightarrow 0\).

For the terms in the first row, we already know its limit

In addition, due to the boundary conditions we impose the term in the second row vanishes identically.

So, it remains to calculate the limit as \(\varepsilon \rightarrow 0\) of

In what follows, we will look at \(S_{\varepsilon ,r}\). The calculation of the limit in \(S_{\varepsilon ,l}\) is completely analogous.

Note that the assumption we made about the test function \(\varphi \) implies that in \(S_{\varepsilon }\) we have

Now, we introduce the new function

Similarly, we define \(v_{\varepsilon ,l}\).

For these functions we have the following properties: Due to the energy estimates and the time-regularity established in Proposition 1, \(\left\{ v_{\varepsilon ,r} \right\} \) and \(\left\{ v_{\varepsilon ,l} \right\} \) are precompact in \(L^2(S_{r,T})\), respectively, in \(L^2(S_{l,T})\) (recall the compactness criterion of Riesz–Fréchet–Kolmogorov).

So there exist strongly convergent subsequences which we still denote by \(\left\{ v_{\varepsilon ,r} \right\} \) and \(\left\{ v_{\varepsilon ,l} \right\} \) since in view of the uniqueness passing to a subsequence is immaterial.

Note that the derivatives of \(v_{\varepsilon ,r}\), respectively, of \(v_{\varepsilon .l}\) converge weakly in \(L^2\).

Now, we will calculate the limits as \(\varepsilon \rightarrow 0\) of the term in (3).

Limit of \(- \int _0^T \int _{S_{\varepsilon ,r}} \frac{\varepsilon _0}{\varepsilon } u_{\varepsilon } \varphi _t\):

Rewriting this term leads to

Limit of \(\int _0^T \int _{S_{\varepsilon ,r}} \frac{\varepsilon _0}{\varepsilon } u_{\varepsilon ,z} \varphi _z\):

Following the same lines as in the previous step we get

Note that due to the properties of our test function \(\varphi \) the term \(\int _0^T \int _{S_{\varepsilon ,r}} \frac{\varepsilon _0}{\varepsilon } u_{\varepsilon ,x} \varphi _x\) vanishes identically.

Limit of \(-\int _{S_{\varepsilon ,r}} \frac{\varepsilon _0}{\varepsilon }u \varphi (x,z,0)\):

For the remaining term \(-\int _{S_{\varepsilon ,r}} \frac{\varepsilon _0}{\varepsilon } u \varphi (x,z,0)\) we proceed as follows.

In a fist step we observe that we have

since \(- \frac{(\sigma \varepsilon )^2}{\sigma \varepsilon } \sup \vert \nabla u(t=0) \vert \le \frac{1}{\sigma \varepsilon }\int _R^{R+ \sigma \varepsilon } \Big ( \int _0^{x-R} \frac{\partial }{\partial x} u(R+s,z,0) \, ds \Big ) \; dx \le \frac{(\sigma \varepsilon )^2}{\sigma \varepsilon } \sup \vert \nabla u(t=0) \vert . \)

Thus,

Summary:

Altogether we have seen that the limit of (3) is

where \(S:= \left\{ x=-R \right\} \cup \left\{ x=R \right\} \) and

Finally, we have the full limit

which is exactly the weak formulation of the limit equation we claimed in our main theorem. \(\square \)

4.4 Derivation of the “interior boundary condition”

In this subsection we will show that

and

This property is an immediate consequence of the construction of the extensions \({\tilde{u}}_{\varepsilon }\).

Note that in the interior region we have continuity up to the boundary and the boundary conditions hold in the classical sense (see, e.g., [19]) and can be seen as special cases of constant Neumann boundary conditions on \(\left\{ x = -R \right\} \cap J_l\), respectively, on \(\left\{ x = R \right\} \cap J_r\).

Moreover, the functions \(\varPsi _+\) and \(\varPsi _-\) are continuous.

Altogether we see that the extensions we have constructed above have derivatives in x-direction which are continuous up to the boundary.

In particular, since the boundary conditions we imposed at level \(\varepsilon \) are homogeneous Neumann boundary data the way in which the extensions \({\tilde{u}}_{\varepsilon }\) are constructed [see Lemma 9, in particular equation (2)] implies that the extension inherit the same boundary conditions, i.e., that the extensions satisfy homogeneous Neumann boundary data on \(\left\{ x = -R \right\} \cap J_l\), respectively, on \(\left\{ x = R \right\} \cap J_r\). The boundary data for the extensions \({\tilde{u}}_{\varepsilon }\) in a first step hold in the sense of variational boundary conditions. But in a second step the regularity we have for \(u_{\varepsilon }\) in fact can be used to give sense to this boundary condition in a classical sense. Note that in x-direction the extensions inherit the same regularity as the initial functions \(u_{\varepsilon }\).

4.5 Proof of the “transition condition”

In a first step one has to show that u has a trace on \(\left\{ \vert x \vert =R \right\} \). But this can be achieved as in the article of Andreucci et al.

Once we know about the existence of a trace, we can show the coincidence with v on the part which is “morally free of vertical barriers.” The proof of the transition condition is then basically the same as the corresponding assertion in the above-cited article [3].

More precisely, in the complement of the support of \(J_r\), respectively, of \(J_l\), by passing if necessary to a further subsequence we can assume that there are no vertical barriers—recall also that we excluded closed compartments, i.e., situations such that \({\mathscr {B}}_{j,l}={\mathscr {B}}_{j,r}=1\).

This means that we have the same geometry as Andreucci et al. (see [3])—at least on one side.

Then it suffices to show that

But this follows immediately from the observation that we can rewrite

(and similar for the right boundary). Here, the first addend can be rewritten using the (weak) fundamental theorem of calculus. Once this is done, the a priori estimates we have at hand in combination with Hölder’s inequality imply that this term vanishes in the limit. The second addend tends to zero due to the strong convergence of \(v_{\varepsilon ,l}\) and thus in particular almost everywhere convergence (upon possibly passing to a further subsequence).

5 Proofs of the additional properties of the solution to the homogenized problem

In this section, we will give the proofs of the additional properties of the solution of the homogenized problem.

First of all, we will show the “time-regularity.” More precisely we will prove Proposition 3.

Proof of Proposition 3

First observe that it remains only to control \(u_t\) and \(v_t\) in \(L^2\).

There are two possible approaches.

Either one shows first the corresponding assertion at level \(\varepsilon \), shows that the time derivatives are uniformly bounded in norm and deduces the desired assertion from the weak convergence and uniqueness of weak limits.

The second one is to look directly at the limit problem in weak formulation. Then u and v are replaced by their Steklov time averages \(u^h\) and \(v^h\) and multiplied by a suitable function of t. Next, the so constructed function can be used as test function and one observes that the same equation as before holds—up to an error of order O(h). This finally leads to the desired estimates of the time derivatives. This approach is explained, e.g., in [3]. \(\square \)

Next, we will study the “space-regularity.”

Proof of Proposition 4 and the corresponding assertion for u

Recall that we can uniformly control the first derivative in x-direction of our extensions \({\tilde{u}}_{\varepsilon }\) on \(\varOmega _0\) (see Lemma 9)—if necessary by a further extension by reflection at the boundaries \(l^i_{j,l}\), respectively, \(l^i_{j,r}\).

From this we can pass to the limit and find the desired assertion for the x-derivative of u.

The control of \(v_z\) is proved analogously. \(\square \)

Next, we study the limit behavior of the second derivatives in x-direction. More precisely, we will prove Proposition 5.

Proof of Proposition 5

From the equation of the “interior limit” and the fact that \(u_t \in L^2\) we have

for any test function \(\varphi \in C^1_0(\varOmega _{0,T})\) vanishing for \(t=0\) and \(t=T\). Thus, T can be extended by density to a linear functional on \(L^2(\varOmega _{0,T})\). Finally, by the Riesz theorem we conclude that there exists a function \(l \in L^2(\varOmega _{0,T})\) such that

for all \(\varphi \in L^2(\varOmega _{0,T})\). But this implies that \(u_{xx} \in L^2(\varOmega _{0,T})\). \(\square \)

Remark

A similar argument to the above proof shows that

Our next point is to prove the uniqueness of the limit, i.e., we will give a proof of Proposition 6.

Proof of Proposition 6

By contradiction we assume that there are two solutions of the limit problem. Using the weak formulation of the limit and exploiting the properties of the limit u, v we find a contradiction.

More precisely, we assume that there are two solutions to the limit problem, \(\left\{ u_1,v_1 \right\} \) and \(\left\{ u_2, v_2 \right\} \) satisfying the same initial and boundary condition.

Then we use the weak formulation of our limit problem which leads to the following equalities,

for \(k=1,2\).

Next, look at the difference of these two equalities

Furthermore, we can take \((u_1 - u_2)\phi (z)\) as a test function where the cutoff function \(\phi \) depends only on z, is positive and satisfies \(\phi \equiv 0 \; \text {on} \overline{(J_l^c)}\).

This in particular leads to

Thus, rewriting the remaining terms, integrating by parts where suitable, taking into account the equation satisfied by v and the fact that \(u_x=0\) on \(J_l\) we are left with

respectively

Then by a careful inspection of the signs of all the terms in the above equation and by standard arguments the claimed uniqueness follows for the part \((-R,R) \times J_l\).

More precisely, the terms on the left-hand side are all positive whereas the term on the right-hand side has no specific sign—could be made even negative (recall the freedom we have for \(\phi \)). Thus, both sides have to be equal to zero.

In a next step, by similar arguments the uniqueness on \((-R,R) \times J_r\) and finally by similar reasonings on the whole domain \((-R,R) \times (0,H)\) follows. \(\square \)

Remark

Alternatively, the assertion of Proposition 6 could be derived by a Gronwall-type inequality.

Finally, we prove that as for the \(\varepsilon \)-problem, the solution of the limit problem is bounded and positive.

Proof of Proposition 7

The claimed property follows immediately from the property that for all \(\varepsilon \) the solution \(u_{\varepsilon }\) (respectively \(v_{\varepsilon }\)) is positive and bounded (by the fact that we have strong convergence in \(L^2\) and thus also pointwise convergence almost everywhere—if necessary by passing to a further subsequence). \(\square \)

Remark

If one combines the proof of Proposition 7 with the proof of Proposition 3 one can deduce that the positivity and boundedness hold everywhere.

6 Quantitative results

In the present section we will focus on the three models A, B and M (see Sect. 2.1 for their definition).

First of all, we look at stationary solutions (also called steady states), i.e., at solutions for which \(\partial _t u=0\) and \(\partial _t v=0\).

Doing so, one can easily observe that by the conservation of mass

-

for model A this stationary solution \((u_{\infty }, v_{\infty })\) is given by

$$\begin{aligned} \left\{ \begin{array}{ll} v_{l,\infty } (z) &{}\equiv \frac{1}{H}\int _{S_l} v_l(t=0) \quad \text {independently of }{} { z} \\ u_{\infty } (x,z) = v_{r,\infty }(z) &{}\equiv \frac{1}{\vert \varOmega _0 \vert } \int _{\varOmega _0} u_0 \quad \text {independently on }{} { x} \text { and } { z}\\ \end{array}\right. \end{aligned}$$ -

for models B and M, due to the transition condition the stationary solution \((u_{\infty }, v_{\infty })\) is given by

$$\begin{aligned} u_{\infty } (x,z) = v_{l,\infty }(z)= v_{r,\infty }(z)\equiv \frac{1}{\vert \varOmega _0 \vert } \int _{\varOmega _0} u_0 \end{aligned}$$

This already gives a first instance in how far the limit models are different.

A next attempt to investigate or quantify the differences of the various models would be to freeze the time derivative for one equation and keep the second equation unchanged.

The weak point in this idea is that the transition condition \(u=v\) on S would on one hand lead to a time-dependent function and on the other hand to a time-independent function.

A further instance that actually the different models lead to different behaviors is given by the following observation.

The classical energy method for v leads to an estimate of the form

In this latter formula we can see the influence of the norm of \(\vert \vert u_x \vert \vert _{L^2(0,T;L^2(S))}\), in particular whether it vanishes identically or not.

But a more striking difference arises from the following observation.

-

In the case of model A it holds

$$\begin{aligned} v_{l,t} - v_{l,zz} = 0 \; \text {on }S_l \end{aligned}$$with homogeneous Neumann boundary condition. In this situation one can easily verify that the total amount of “mass” \(\int _{S_l} v_l\) is constant in time. On the right side, \(S_r\), the concentration varies with time since we have a nontrivial source term \(u_x\) (unless the initial condition is identically zero).

-

In models B and M we have nontrivial source terms on both sides, \(S_l\) and \(S_r\). Thus, the concentrations on both sides vary with time.

Summarized, we see that the additional “interior boundary condition”—in dependence on the precise configuration of the vertical barriers—may lead to the separation of one side from the interior region \(\varOmega _0\).

7 Outlook

The obvious question of extending our model to a three-dimensional one as well as more detailed numerical simulations and even further models is subject to ongoing research.

Of course, it would be possible also to think of other—nonlinear—relations between the horizontal distance of the obstacles to the outer cylinder \(\sigma \varepsilon \), the vertical distance between the “layers” of the horizontal obstacles \(\nu \varepsilon \) and \(\varepsilon \).

One restrictions about the Ansatz is that the thickness of the outer shell \(\sigma \varepsilon \) should have the same scaling as the prefactor \(a_{\varepsilon }\). This follows from a simple and straightforward calculation.

In addition to the point mentioned above, the possible interpretation from a biological point of view is the subject of ongoing research.

References

Andreucci, D., Bisegna, P., DiBenedetto, E.: Homogenization and concentration of capacity in the rod outer segment with incisures. Appl. Anal. 85(1–3), 303–331 (2006)

Andreucci, D., Bisegna, P., DiBenedetto, E.: Homogenization and concentrated capacity in reticular almost disconnected structures. C. R. Math. Acad. Sci. Paris 335(4), 329–332 (2002)

Andreucci, D., Bisegna, P., DiBenedetto, E.: Homogenization and concentrated capacity for the heat equation with non-linear variational data in reticular almost disconnected structures and applications to visual transduction. Ann. Mat. Pura Appl. 182(4), 375–407 (2003)

Andreucci, D., Bisegna, P., DiBenedetto, E.: Some mathematical problems in visual transduction. In: Rodrigues, J.F., Seregin, G., Urbano, J.M. (eds.) Trends in Partial Differential Equations of Mathematical Physics. Progress in Nonlinear Differential Equations and Their Applications, vol 61, pp. 65–80. Birkhäuser, Basel (2005)

Andreucci, D., Bisegna, P., Caruso, G., Hamm, H.E., DiBenedetto, E.: Mathematical model of the spatio-temporal dynamics of second messengers in visual transduction. Biophys. J. 85, 1358–1376 (2003)

Bensoussan, A., Lions, J.-L., Papanicolaou, G.: Asymptotic Analysis for Periodic Structures, Studies in Mathematics and its Applications, 5. North-Holland Publishing Co., Amsterdam (1978)

Blanchard, D., Gaudiello, A.: Homogenization of highly oscillating boundaries and reduction of dimension for a monotone problem. ESAIM Control Optim. Calc. Var. 9, 449–460 (2003)

Brezis, H.: Analyse Fonctionnelle. Dunod, Paris (1999)

Brizzi, R., Chalot, J.-P.: Boundary homogenization and Neumann boundary value problem. Ricerche Mat. 46(2), 341–387 (1997)

Cioranescu, D., Donato, P.: An introduction to homogenization, Oxford Lecture Series in Mathematics and its Applications, 17. The Clareson Press, Oxford University Press, New York (1999)

Dancer, E.N.: The effect of domain shape on the number of positive solutions of certain nonlinear equations. J. Differ. Equ. 74, 120–156 (1988)

Dancer, E.N.: The effect of domain shape on the number of positive solutions of certain nonlinear equations. II. J. Differ. Equ. 87, 316–339 (1990)

Dancer, E.N.: Addendum: the effect of domain shape on the number of positive solutions of certain nonlinear equations. II. J. Differ. Equ. 87, 316–339 (1990)

Daners, D.: Domain perturbation for linear and semi-linear boundary value problems. Handb. Differ. Equ.: Station. Part. Differ. Equ. VI, 1–81 (2008). Handb. Differ. Equ., Elsevier/North-Holland, Amsterdam

DiBenedetto, E.: Partial Differential Equations. Birkhäuser, Switzerland (1995)

DiBenedetto, E.: Real Analysis. Birkhäuser, Switzerland (2002)

Diestel, J., Uhl Jr., J.J.: Vector Measures. American Mathematical Society, Providence (1977)

Evans, L.C.: Partial Differential Equations. American Mathematical Society, Providence (1998)

Friedman, A.: Partial differential equations of parabolic type. Dover books on mathematics, (2008)

Folland, G.B.: Real analysis. Modern techniques and their applications. Pure and Applied Mathematics. Wiley, New York (1984)

Gilbarg, D., Trudinger, N.S.: Elliptic Partial Differential Equations of Second Order. Springer, Berlin (2001)

Hale, J.K., Vegas, J.: A nonlinear parabolic equation with varying domain. Arch. Rational Mech. Anal. 86(2), 99–123 (1984)

Jikov, V.V., Kozlov, S.M., Olenik, O.A.: Homogenization of Differential Operators and Integral Functionals (Translated from the Russian by G. A. Yosifian). Springer, Berlin (1994)

Ladyženskaja, O.A., Solonnikov, V.A., Ural’ceva, N.N.: Linear and Quasilinear Equations of Parabolic Type. American Mathematical Society, Providence (1968)

Lions, J.-L., Magenes, E.: Problèmes aux limites non homogènes. Dunod, Paris (1968)

Neuss-Radu, M., Jäger, W.: Effective transmission conditions for reaction-diffusion processes in domains separated by an interface. SIAM J. Math. Anal. 39(3), 687–720 (2007)

Oberbichler, D.: Homogenization and concentration capacity for the heat equation for the case of a certain reticular almost disconnected structure, Diploma Thesis (2007)

Tartar, L.: The General Theory of Homogenization: A Personalized Introduction. Springer, Heidelberg (2009)

Temam, R.: Navier-Stokes Equations. North Holland, Amsterdam (1977)

Vegas, J.M.: Bifurcation Caused by Perturbing the Domain in an Elliptic Equation. J. Differ Equ 48, 189–226 (1983)

Acknowledgments

The author would like to thank Professor A. Stevens at the University of Munster, Germany, for having motivated the present problem and for many interesting discussions about mathematical problems motivated from the life sciences. Moreover, the author would like to thank the University of Munster where the present work was started for the friendly and nice working atmosphere. In addition, the author would like to thank also the unknown referee for many indications of possible improvements in the manuscript in order to make the presentation more clear and reader-friendly.

Author information

Authors and Affiliations

Corresponding author

Additional information

Research partially supported by Swiss National Science Foundation Grant PBEZP2_137396.

Rights and permissions

About this article

Cite this article

Keller, L.G.A. Homogenization and concentrated capacity for the heat equation with two kinds of microstructures: uniform cases. Annali di Matematica 196, 791–818 (2017). https://doi.org/10.1007/s10231-016-0596-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10231-016-0596-1