Abstract

Background

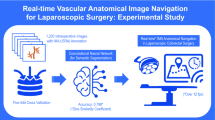

In laparoscopy, the digital camera offers surgeons the opportunity to receive support from image-guided surgery systems. Such systems require image understanding, the ability for a computer to understand what the laparoscope sees. Image understanding has recently progressed owing to the emergence of artificial intelligence and especially deep learning techniques. However, the state of the art of deep learning in gynaecology only offers image-based detection, reporting the presence or absence of an anatomical structure, without finding its location. A solution to the localisation problem is given by the concept of semantic segmentation, giving the detection and pixel-level location of a structure in an image. The state-of-the-art results in semantic segmentation are achieved by deep learning, whose usage requires a massive amount of annotated data. We propose the first dataset dedicated to this task and the first evaluation of deep learning-based semantic segmentation in gynaecology.

Methods

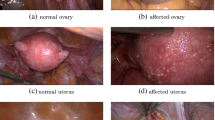

We used the deep learning method called Mask R-CNN. Our dataset has 461 laparoscopic images manually annotated with three classes: uterus, ovaries and surgical tools. We split our dataset in 361 images to train Mask R-CNN and 100 images to evaluate its performance.

Results

The segmentation accuracy is reported in terms of percentage of overlap between the segmented regions from Mask R-CNN and the manually annotated ones. The accuracy is 84.5%, 29.6% and 54.5% for uterus, ovaries and surgical tools, respectively. An automatic detection of these structures was then inferred from the semantic segmentation results which led to state-of-the-art detection performance, except for the ovaries. Specifically, the detection accuracy is 97%, 24% and 86% for uterus, ovaries and surgical tools, respectively.

Conclusion

Our preliminary results are very promising, given the relatively small size of our initial dataset. The creation of an international surgical database seems essential.

Similar content being viewed by others

References

Goodfellow I (2016) Yoshua Bengio and Aaron Courville, deep learning. MIT, Cambridge

Haenssle HA, Fink C, Schneiderbauer R, Toberer F, Buhl T, Blum A, Kalloo A, Hassen ABH, Thomas L, Enk A, Uhlmann L, Reader study level-I and level-II Groups (2018) Man against machine: diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists. Ann Oncol 29:1836–1842. https://doi.org/10.1093/annonc/mdy166

Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, Thrun S (2017) Dermatologist-level classification of skin cancer with deep neural networks. Nature 542:115–118

Ting DSW, Cheung CY-L, Lim G, Tan GSW, Quang ND, Gan A, Hamzah H, Garcia-Franco R, San Yeo IY, Lee SY, Wong EYM, Sabanayagam C, Baskaran M, Ibrahim F, Tan NC, Finkelstein EA, Lamoureux EL, Wong IY, Bressler NM, Sivaprasad S, Varma R, Jonas JB, He MG, Cheng C-Y, Cheung GCM, Aung T, Hsu W, Lee ML, Wong TY (2017) Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA 318:2211–2223

Petscharnig S, Schöffmann K (2018) Learning laparoscopic video shot classification for gynecological surgery. Multimed Tools Appl 77:8061–8079

Leibetseder A, Petscharnig S, Primus MJ, Kletz S, Münzer B, Schoeffmann K, Keckstein J (2018) Lapgyn4: A Dataset for 4 Automatic Content Analysis Problems in the Domain of Laparoscopic Gynecology. In: Proceedings of the 9th ACM Multimedia Systems Conference. ACM, New York, NY, USA, pp 357–362

Bourdel N, Chauvet P, Calvet L, Magnin B, Bartoli A, Michel C (2019) Use of augmented reality in Gynecologic surgery to visualize adenomyomas. J Minim Invasive Gynecol 26(6):1177–1180

Chauvet P, Collins T, Debize C, Novais-Gameiro L, Pereira B, Bartoli A, Canis M, Bourdel N (2018) Augmented reality in a tumor resection model. Surg Endosc 32:1192–1201

Bourdel N, Collins T, Pizarro D, Debize C, Grémeau A-S, Bartoli A, Canis M (2017) Use of augmented reality in laparoscopic gynecology to visualize myomas. Fertil Steril 107:737–739

Bourdel N, Collins T, Pizarro D, Bartoli A, Da Ines D, Perreira B, Canis M (2017) Augmented reality in gynecologic surgery: evaluation of potential benefits for myomectomy in an experimental uterine model. Surg Endosc 31:456–461

He K, Gkioxari G, Dollár P, Girshick R (2017) Mask R-CNN. In: 2017 IEEE International Conference on Computer Vision (ICCV). pp 2980–2988

Supervisely—Web platform for computer vision. Annotation, training and deploy. https://supervise.ly

(2018) FAIR’s research platform for object detection research, implementing popular algorithms like Mask R-CNN and RetinaNet.: facebookresearch/Detectron. Facebook Research

Deng J, Dong W, Socher R, Li L-J, Li K, Fei-Fei L ImageNet: A Large-Scale Hierarchical Image Database

Jesse Davis, Mark Goadrich (2006) The relationship between Precision-Recall and ROC curves. In: International Conference on Machine Learning (ICML) 2006 Proceedings of the 23rd ICML, pp 233–240

Chen L, Papandreou G, Kokkinos I, Murphy K, Yuille AL (2018) DeepLab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans Pattern Anal Mach Intell 40:834–848

Islam M, Atputharuban DA, Ramesh R, Ren H (2019) Real-time instrument segmentation in robotic surgery using auxiliary supervised deep adversarial learning. IEEE Robot Autom Lett 4:2188–2195

Choi B, Jo K, Choi S, Choi J (2017) Surgical-tools detection based on Convolutional Neural Network in laparoscopic robot-assisted surgery. 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). IEEE, Seogwipo, pp 1756–1759

EndoVisSub-Instrument-Results.https://endovissub-instrument.grand challenge.org/Results

García-Peraza-Herrera LC, Li W, Fidon L, Gruijthuijsen C, Devreker A, Attilakos G, Deprest J, Poorten EV, Stoyanov D, Vercauteren T, Ourselin S (2017) ToolNet: Holistically-nested real-time segmentation of robotic surgical tools. In: 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). pp 5717–5722

Stauder R, Ostler D, Kranzfelder M, Koller S, Feußner H, Navab N (2016) The TUM LapChole dataset for the M2CAI 2016 workflow challenge. arXiv:161009278 [cs]

Lin T-Y, Maire M, Belongie S, Bourdev L, Girshick R, Hays J, Perona P, Ramanan D, Zitnick CL, Dollár P (2014) Microsoft COCO: Common Objects in Context. arXiv:14050312 [cs]

Fazal MI, Patel ME, Tye J, Gupta Y (2018) The past, present and future role of artificial intelligence in imaging. Eur J Radiol 105:246–250

Twinanda P, Yengera G, Mutter D, Marescaux J, Padoy N (2019) Learning to predict remaining surgery duration from laparoscopic videos without manual annotations. IEEE Trans Med Imaging 38(4):1069–1078

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Disclosures

S. Madad Zadeh, T. François, L. Calvet, P. Chauvet, M. Canis, A. Bartoli and N. Bourdel have no conflict of interest or financial ties to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Madad Zadeh, S., Francois, T., Calvet, L. et al. SurgAI: deep learning for computerized laparoscopic image understanding in gynaecology. Surg Endosc 34, 5377–5383 (2020). https://doi.org/10.1007/s00464-019-07330-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00464-019-07330-8