Abstract

All organisms must be able to adapt to changes in the environment. To this end, they have developed sophisticated regulatory mechanisms to ensure homeostasis. Control engineers, who must design similar regulatory systems, have developed a number of general principles that govern feedback regulation. These lead to constraints which impose trade-offs that arise when developing controllers to minimize the effect of external disturbances on systems. Here, we review some of these trade-offs, particularly Bode’s integral formula. We also highlight its connection to information theory, by showing that the constraints in sensitivity minimization can be cast as limitations on the information transmission through a system, and these have their root in causality. Finally, we look at how these constraints arise in two biological systems: glycolytic oscillations and the energy cost of perfect adaptation in a bacterial chemotactic pathway.

Similar content being viewed by others

1 Introduction

Crucial to the survival of all organisms is the ability to sense external stimuli and respond. To ensure homeostasis, cells have developed sophisticated regulatory mechanisms whose operation often mirrors those designed by engineers. The connection between these biological and engineering dynamical systems was one of the motivations for Norbert Wiener’s development of cybernetics and has been one of the underpinnings of the systems biology revolution of the past two decades.

It is now widely appreciated that biological systems face the same constraints and trade-offs that are confronted by engineers designing control and communications systems. It has been known for nearly a century that a principal requisite of any system that achieves robust regulation is the ability to employ negative feedback (Black 1934; Cannon 1932). This requires that the level of any signal that is to be controlled be sensed. Crucial to the performance of this system is an accurate sensor. Feedback sensors only provide imperfect information about the signal that is to be controlled. The measured signal will be affected by stochastic noise, lack of responsiveness, as well as nonlinearities. As any control action is carried out based on this imperfect signal, a well-functioning regulator must take into account these limitations.

Almost as important, however, is the requirement that the sensed information be relayed with high fidelity to the controller and that the control system output be conveyed to the system that is to be controlled. Though the requirement that information be transmitted with high fidelity mirrors the problems that communication engineering has long dealt with, it is only recently that control engineers have begun to consider the role that information theory plays in control systems. Here, we review some of this literature, while emphasizing the connections with biological regulation.

2 Results

2.1 Disturbance rejection: the internal model principle

In biological regulatory problems, considerable attention has been placed on perfect adaptation and its connection with the internal model principle of control engineering (Francis and Wonham 1976; Yi et al. 2000; Sontag 2003; Andrews et al. 2008). Briefly, the idea is to have a system that detects a persistent, environmental disturbance and then sets in motion a cascade of reactions that, over time, eliminates the effect of this disturbance. The best studied such system is the chemotactic pathway of E. coli. In this context, the environmental disturbance represents changes in the chemoattractant concentration of the surrounding media. Alon et al. (1999) showed that this system displayed perfect adaptation in spite of genetic mutations that greatly changed the expression levels of various components of the signaling pathway. Yi et al. (2000) analyzed the equations that govern adaptation in bacteria (Barkai and Leibler 1997) and demonstrated that this robustness could be attributed to the presence of an integral control feedback element in the loop. It is important to note that perfect adaptation characterizes a specific response to a specific disturbance. In this case, the stimulus changes from one constant value to another constant value, and the response is measured as the steady-state value. Whereas cells adapt to this step change in chemoattractant concentration, they do not necessarily adapt to other stimuli. For example, E. coli chemotactic activity does not adapt when stimulated by ramps or sinusoidally changing signals (Block et al. 1983; Shimizu et al. 2010). In general, perturbations can be imposed upon the cell, such as in the chemotactic signaling system of E. coli but may also be internal. For example, deviations away from a steady state caused by the stochastic nature of chemical reactions can be considered as unmeasurable perturbations on the system.

Sensitivity minimization through negative feedback. a Simple three-node network in which an external signal (U) cascades through two nodes (X and Y). Negative feedback is provided through node Z. Each node exists in two states, active and inactive, and is stimulated through Michaelis–Menten-like activation and deactivation terms. Equations for the system are provided in the text. b Simulations of a constant change in the signal U, in which the negative feedback strength is varied. The system is initially assumed to be at rest. Plotted is the normalized concentration of y(t) as a function of time for the open-loop case (\(k_{ZX}=0\); darkest) and five other feedback strengths. The parameters used are: \(k_{UX}=k_{X}=k_{XY}=k_{Y}=k_{YZ}=1\), \(k_{-X}=k_{X}^\prime =k_{-Y}=k_{Y}^\prime =0.2\), \(k_{-Z}=0.5\), \(k_{Z}=k_{Z}^\prime = 0.05\). c Plot of the steady-state value of y as a function of \(k_{ZX}\). The inset shows a greater range of feedback gains on a logarithmic scale

Going forward, it is useful to consider a specific signaling system, shown in Fig. 1a. As in Ma et al. (2009), we use a three-node network in which an external stimulus (U) gives rise to a response (Y). The nodes in the network represent signaling elements that toggle between active and inactive states, according to Michaelis–Menten kinetics. Whereas positive interactions increase the rate of the enzymatic reaction activating the product, negative interactions increase the rate of inactivation. The network includes a negative feedback loop achieved through node Z, whose strength can be changed by varying the parameter \(k_{ZY}\). The following set of differential equations describes the system, where x, y, z and u denote suitably normalized concentrations of the active states of the X, Y and Z components, and the fraction of inactive states is therefore \(1-x\), \(1-y\) and \(1-z\), respectively

All coefficients in these equations are assumed to be positive, but their specific values will not be needed.

We can define the sensitivity of the system as the fractional change in the system output relative to the fractional change in a parameter of interest. For example, if we wish to determine how sensitive the system is to a change in the constant level of the stimulus u(t) from \(\bar{u}\) to \(\bar{u}+\Delta u\) with a resultant change in the output from \(\bar{y}\) to \(\bar{y} + \Delta y\), we define:

In the case where the changes are infinitesimal, this is equivalent to

This steady-state sensitivity has been used by a number of researchers in the analysis of biological systems. In the context of adaptation to constant signals, Ma et al. (2009) called this steady-state sensitivity precision. Shibata and Fujimoto (2005) used it to analyze ultrasensitive signal transduction pathways and referred to it as the system gain. Finally, Hornung and Barkai (2008) called it susceptibility and used it to study the effect of feedback attenuation in noise in biological circuits.

It has been customary to assume that the system is at rest, \(u(t)=\bar{u}=0\) leading to \(\bar{x}=\bar{y}=\bar{z}=0\). In this case, the definition of Eq. 1 is not suitable. Instead, we consider \(\Delta {y}/\Delta {u}\). In either case, if the system returns to its prestimulus level, it is completely insensitive to the external stimulus and is said to achieve perfect adaptation. When the recovery is incomplete, we have partial adaptation. In Fig. 1b, c, we see that the sensitivity of the system can be lowered by increasing the strength of the negative feedback path; with stronger feedback, the steady-state value of node y decreases. This figure shows that, for sufficiently high negative feedback, the system starts displaying damped oscillations.

It is clear that lowering of the steady-state gain further requires additional increase in the feedback gain strength. In fact, perfect adaptation entails an infinite magnitude feedback loop. However, while the steady-state level decreases with extremely high feedback gains, this also leads to a decrease in the initial response (Fig. 1b). This could be desirable in some cases, for example, if the cell is to completely ignore the external disturbance. However, in many cases it is important to detect the external disturbance, act accordingly and then adapt so as to be able to detect further signals. This requires the cell to be initially highly sensitive to the disturbance but become insensitive over longer time periods. The key to understand the trade-off lies in noting that two different feedback strengths are needed to achieve the diverse levels of sensitivity. For short-time scales following the initial detection of the signal, a small gain is desirable. However, for longer time scales, the gain needs to be larger. In fact, for perfect adaptation, which is a steady-state (\(t\rightarrow \infty \)) consideration, it should approach infinity. Of course, this is precisely the nature of an integral control feedback. Specifically, we replace the feedback input \(-k_{ZX}z(t)\) that appears at the end of the equation for \(\frac{\mathrm{d}x}{\mathrm{d}t}\) with an integrator

that can be described in the frequency domain (see, for example, Iglesias and Ingalls (2010)) by

This expression shows the time-scale decomposition of the gain. For short-time scales (equivalent to \(\omega =\infty \) in Eq. 2), the gain is infinitesimally small. On the other hand, for longer time scales (equivalent to \(\omega =0\)), the gain is infinite. To achieve integral feedback within this model structure, we first assume that the forward reaction for Z is at saturation: \(1-z(t) \gg k_z=\epsilon \), with \(\epsilon \ll 1\), and the reverse equation is in the linear range: \(z(t)\ll k_Z^\prime \). In this case, the equation for Z,

describes a first-order linear system, with transfer function

The magnitude of this function shows the time-scale dependency of the gain:

Now, if \(k_Z^\prime =\epsilon \), with \(\epsilon \ll 1\) the feedback gain \(|H_{ZY}(i\omega )|\) approaches infinity at \(\omega =0\), which represents the steady-state case. On the other hand, at high frequencies, which correspond to the initial times after the stimulus, the feedback gain is zero. We see from this how integral control achieves the control gains that we seek.

That a control system must have integral control to reject constant disturbances robustly with zero steady-state error is well known and is a special case of the much broader internal model principle (Francis and Wonham 1976). It is important to note that integral control only ensures that constant signals are rejected. Other persistent signals, such as sinusoidal signals, require other components in the control loop.

2.1.1 Motifs achieving perfect adaptation

In the previous section, we have considered a specific means of achieving perfect adaptation, based on an explicit negative feedback mechanism that implements integral control. This is not the only means of achieving this perfect regulation. Koshland (1977) suggested a means for explaining the chemoattractant-mediated regulation of bacterial tumbling frequencies based on the complementary activation of a response regulator. In the scheme, positive and delayed negative receptor-mediated signals act on a common signal. This scheme, which can also achieve perfect adaptation, is now typically referred to as an incoherent feedforward regulator (IFF) (Milo et al. 2002) and has been used to describe the adaptation properties of various systems (Levchenko and Iglesias 2002; Mangan et al. 2006; Silva-Rocha and de Lorenzo 2011; Takeda et al. 2012; Chen et al. 2013). Though not explicitly expressed as a negative feedback controller, the IFF motif can also be represented as such (Krishnan and Iglesias 2003; Shoval et al. 2010). Ma et al. (2009), by using an extensive simulation of all possible three node enzymatic networks, showed that the only two motifs achieving perfect adaptation to step changes in the stimulus are the negative feedback loop of Fig. 1 and the IFF. However, though the two schemes show adaptive behavior to step changes in stimulus, their response to other signals can vary (Iglesias and Shi 2014). For example, whereas the IFF scheme also adapts perfectly to other signals such as ramps, the NFB scheme does not (Iglesias and Shi 2014). Moreover, when considering stochastic signals, neither adapts perfectly when looking at the variance, rather than the mean (Bostani et al. 2012; Iglesias and Shi 2014; Shankar et al. 2015; Buzi and Khammash 2016). Recently, Briat et al. (2016) proposed the antithetic integral feedback mechanism that reduces the sensitivity of the variance to changes in the stimulus.

2.2 Limits on sensitivity minimization

Under the assumptions made at the end of the previous section, the feedback loop is a linear system, which allows us to use a frequency domain analysis based on the Fourier and Laplace transforms. We can also use these transforms to describe the rest of the system if we linearize it so that we consider only small deviations from the steady state. The system can be separated in the traditional plant controller form as shown in Fig. 2a, where the open-loop system (ignoring the role of the feedback Z) is

and the feedback transfer function is

where

and

Sensitivity function. a The linearized closed-loop system can be described by these transfer functions. The three systems are equivalent and show how the sensitivity function acts to modify the open-loop system. b Bode plot of the sensitivity function for the various feedback strengths considered in Fig. 1. Note how increasing the feedback strength leads to greater low frequency attenuation, but an increased peak at the higher frequencies. c This waterbed effect is characterized by Bode’s integral, which states that the area of sensitivity attenuation (gain below 0 dB; in light gray) must be matched by areas of increased sensitivity (gain above 0 dB; in dark gray)

Note that the subscripts in the coefficients of Eq. 5 are used to denote their respective location in the Jacobian matrix, and that the values \(\bar{x}\), \(\bar{y}\), \(\bar{z}\) and \(\bar{u}\) are the steady-state concentrations at which the linearization takes place (Iglesias and Ingalls 2010). After closing the feedback loop, the closed-loop system, from disturbance u(t) to output y(t), is

We write the closed-loop transfer function as the product of two components: \(\widetilde{H}_{YU}(i\omega ))=bH_{YU}(i\omega )S(i\omega )\) where

The function \(S(i\omega )\) is known as the sensitivity function; it describes how feedback affects the transfer function of the closed-loop system. In the frequency domain, this transfer function exhibits a biphasic response (Fig. 2b). The (infinitely) small gains at low frequency arise because of the presence of integral control. However, at mid-frequencies, the gain increases, signifying that there are periodic stimuli whose response is actually enhanced. In the time domain, this is manifested as the oscillatory response shown in Fig. 1b. If the disturbance represents perturbations with a constant frequency spectrum, as would be expected in the case of stochastic fluctuations seen in chemical reactions, particularly at low copy numbers, the output will amplify the mid-range of these frequencies; thus, negative feedback can shift the observed frequencies of the disturbances (Simpson et al. 2003; Cox et al. 2006; Hansen et al. 2018). While negative feedback is chosen to lessen the sensitivity of the closed-loop system relative to the open-loop one, this corresponds to a gain less than one: \(|S(i\omega )|<1\). However, this is not always achievable. More precisely, for any feedback system, if the sensitivity is reduced at certain frequency values \(\omega \), then there will be other regions in frequency space in which the sensitivity will actually increase. The precise relationship was first given by Bode (1945):

and is now known as Bode’s Integral Formula (Fig. 2). More familiarly, it has come to be known as the “conservation of dirt,” following a celebrated Bode lecture by Gunter Stein (2003), or the waterbed effect. Bode derived this formula for a specific type of system: linear, time-invariant systems that were open-loop stable and had a relative degree (the difference between the degrees of the open-loop numerator and denominator polynomials) two or greater.

In the 75 years, since Bode’s result was published, there have been numerous extensions. Freudenberg and Looze (1985) published one of the most significant extensions, by including the effect of open-loop unstable poles; in particular, they showed that

where \({\text {Re}}(p_k)\) are the real parts of any unstable poles of the open-loop system and L(s) is the open-loop transfer function of the system. This indicates that the frequency ranges over which sensitivity reduction can be achieved were actually smaller than that over which sensitivity was amplified. The effect of time delays was considered in Freudenberg and Looze (1987). For systems described by difference equations, there is a corresponding discrete-time sensitivity integral, which mirrors that of Bode (Sung and Hara 1988).

The results given above deal only with the systems that can be described by transfer functions; namely, those that are linear and time-invariant. However, there was much interest in studying whether these restrictions applied to other more general classes of systems. A series of papers considered some of the relevant concepts, including the notion of zeros and poles of linear time-varying systems (O’Brien and Iglesias 2001; Padoan and Astolfi 2020) and how these could be used to generalize Bode’s integral (Iglesias 2001b, 2002), as well as other related concepts including Jensen’s formula (Jensen 1899; Iglesias 2001a) and Szegö’s first limit theorem (Szegö 1915; Iglesias 2002).

2.3 Connections with information theory

The logarithm that appears in Bode’s integral is related to one that is found in information theory. To express this, we recall a few definitions from information theory (Cover and Thomas 2006). For a random variable with probability density function f(x), the differential entropy is given by the function

If \(x=\{x_n\}_{n=1}^\infty \) is a sequence, with discrete-time index n, then the entropy rate is given by

For a system with asymptotic stationary input \(u=\{u_n\}_{n=1}^\infty \) and output \(z=\{z_n\}_{n=1}^\infty \) that are related through the discrete-time transfer function \(F(e^{i\omega })\), the entropy rates of the input and output satisfy (Kolmogorov 1956):

Moreover, if F(z) has no zeros outside the unit circle, and \(\lim _{z\rightarrow \infty } F(z)=1\), then \(h_\infty (y)=h_\infty (u)\). If we apply this result to the sensitivity function, this integral is the same as in the discrete-time version of Bode’s integral (Sung and Hara 1988). In particular, the difference in entropy rates between the error, which is measured by the sensitivity function, and the input is bounded.

Based on this interpretation of the integral, Iglesias and Zang (2001) showed that the trade-offs in sensitivity minimization amount to limits in the ability to process information in the feedback loop. The advantage of this interpretation is that as it involves only time-domain terms, it is well defined even for systems that do not admit a transfer function, and this includes nonlinear systems such as those arise in biological systems. Such an interpretation was first used by Iglesias and Zang (2001) to show that if the open-loop nonlinear system has a globally exponentially stable equilibrium, then the difference in entropy rates is zero, thus matching the original result of Bode. This was followed up by showing that if there are unstable dynamics, the difference in entropy rates is strictly positive (Zang and Iglesias 2003).

This information-theoretic approach to Bode’s integral constraint has received considerable support and led to new Bode-like generalizations. Martins et al. (2007) extended the sensitivity integral results to consider a case where the information available to the controller is provided through a finite capacity communication channel. Under asymptotic stationarity assumptions, they show that these new fundamental limitations amount to an additive constant term that quantifies the information rate through this communication system. Ishii et al. (2011) considered feedback control systems in which the feedback system includes nonlinear elements. Extensions to continuous-time systems have also been made where, instead of using the entropy rate, the results are based on the difference in the mutual information rates (Li and Hovakimyan 2013; Wan et al. 2020).

A further connection between sensitivity and information theory can be made through the Fisher information matrix (FIM) (Cover and Thomas 2006)

The FIM is used to quantify the information that an observable random variable y carries about an unobservable parameter x, assuming that f(x, y) is the likelihood function of x. In analyzing biological signaling, Jetka et al. (2018) viewed x as the unobservable input of system with observable output y and supposed that \(\hat{x}(y)\) is an estimator for x. As the number of measurements N increases, the variance \({\varSigma }(\hat{y_N})\) of the estimate is given by the FIM according to

This approach was used to discriminate between signaling of type I and type III interferons (Jetka et al. 2018) and NF-\(\kappa \)B signaling responses to TNF-\(\alpha \) (Jetka et al. 2019). Recently, Anders et al. (2020) used the FIM to demonstrate how the MAPK signaling cascade balances energetic costs against the accuracy of information transmission in budding yeast. Relations between the FIM and Bode’s integral are presented in Liu and Elia (2014).

2.4 Causality constraints

It is interesting to consider why the limitations described by Bode’s integral arise. Through the derivations of the information-theoretic description, it can be appreciated that the limitations have their root in causality (Martins and Dahleh 2008; Li and Hovakimyan 2013). The explanation for this, however, sometimes gets lost in the intricate mathematical derivations. Here, we provide a heuristic explanation which, though less rigorous, may be easier to understand. We restrict ourselves to the discrete-time case, as it is somewhat easier.

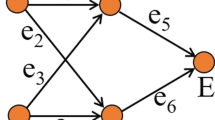

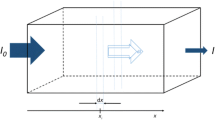

By looking at the closed-loop system of Fig. 3, we see that rejecting a disturbance u(t) amounts to providing a negative feedback input which precisely cancels that disturbance. How could one achieve this if the actual disturbance u is not available, but one has a perfect measurement of the output y? Naïvely, one way would be to place the inverse of the system in the feedback path. This inverse system would take the output \(y_n\) and generate the signal \(u_n\) that led to it. This does not work exactly as planned because the feedback loop alters the signal going into the system and hence changes \(y_n\). However, if we apply this approach, we find that the sensitivity function becomes:

While this does not eliminate the signal, we see that it is independent of frequency, and hence, the sensitivity is reduced for all frequencies, circumventing the limitations described by Bode’s integral. The inclusion of an additional gain \(k>0\) in the feedback path makes \(S(i\omega ) = 1/(1+k)\). Sufficiently high k guarantees adaptation to any desired level. So, what prevents us from doing this? The premise is based on being able to obtain the inverse of the open-loop transfer function which, for realistic systems, leads to causality being violated. As a simple example, suppose that the open-loop system represents a simple exponential decay, given by

The transfer function of this system is

If we set the feedback controller to be the inverse:

we end up with an acausal system, in which the control input \(z_n\) is

That is, the control input at time n depends on system outputs at time \(n+1\).

Clearly, this is not physically realizable. How then can the system reduce sensitivity? The internal model principle provides one answer. By having a model of the disturbance in the loop, it is able to generate a signal that cancels out the disturbance. However, this only happens for specific disturbances that have models inside the loop; it is not possible for arbitrary unknown disturbances. What then in this case? The best that can be done, under a causality constraint on the feedback, is to apply a control signal \(u_n\) at time k that depends on past observations of the signal \(y_n\), which cannot cancel arbitrary signals. It is interesting to note that, for open-loop stable systems, the inverse of the sensitivity function is stable, and hence, by measuring \(y_n\), the input \(u_n\) could be estimated. If the system includes open-loop unstable systems, the inverse of the sensitivity function is no longer stable—unstable open-loop poles of the plant appear as unstable closed-loop poles of the sensitivity function. In computing the inverse, these would reappear as unstable poles of the inverse of the sensitivity function. This induces even greater error in any estimate of \(u_n\) and exacerbates the information loss between input and output.

Reducing sensitivity by an inverse system. The scheme poses the question of whether the effect of the disturbance can be reduced, or even eliminated by using the information of the disturbance u(t) in the measured signal y(t). If the feedback system is set at \(H_{YU}^{-1}\), then the sensitivity function is constant across frequencies and reduced to 1/2. This, however, violates causality constraints

2.5 Biological examples

Here, we will illustrate how the concepts discussed so far can be used to understand various trade-offs in specific biological systems and how they affect system dynamics. Our first example comes from a minimal two-state model of glycolysis (Fig. 4a) from Chandra et al. (2011):

where the system state x(t) represents the concentration of intermediate metabolites, and the output y(t) is the concentration of ATP. The input to the system, u(t), represents changes in the demanded ATP. The system parameters are the autocatalysis stoichiometry (q), forward reaction rate (k), ATP feedback constants for Phosphofructokinase (PFK) (g) and Pyruvate kinase (PK) (h) and the Hill coefficient for ATP-PFK binding (a). PFK and PK are the regulatory enzymes in glycolysis. Nonlinear systems are often studied using linearized models. Linearization around the steady-state (1/k, 1) leads us to the following model:

Theoretical study of glycolysis. a Minimal model. b Transfer function model of the linearized system and simplified representation using sensitivity function. c Plots of log sensitivity function versus feedback strength, h for varying number of positive and negative feedbacks. Values with \(q>0\) and \(g>0\) represent the presence of a positive feedback and the double negative feedbacks, respectively. The other parameters values are: \(a = 1\), \(k = 3\). Increased h results lower steady-state error (\(\text {ln} |S_h(0)|\)) but increases the peak amplitude inducing ringing. The feedback gain, \(g>0\), suppresses the ringing effect. In the third panel, \(h = 4\) corresponds to the critical value \(h_c = a+(k+g(1+q))/q\) resulting in the high peak, which is substantially suppressed with \(g=1\) in the fourth panel. For \(q = 0\), the Bode integral value is independent of h and equal to the real part of the unstable pole of \(H_{YU,g}\). d Respective temporal profiles of output, y(t). The colors match those of c. Note that in the third plot (\(q=1\), \(g=0\)), the system is unstable for \(h=5\), and hence, the plot was omitted

Stability requires that the eigenvalues of \((A+F_1+F_2)\) have negative real parts. On the other hand, robustness requires that the steady-state ratio, \({\Delta y_{ss}}/{u_{ss}}\rightarrow 0\). Together, these requirements result in contradictory constraints:

This indicates the existence of a trade-off: The improved robust performance imposes an energy inefficient highly complex enzymatic reaction dynamics, whereas for preserving stability, the complexity must be bounded by an upper bound \((h_c = a+(k+g(1+q))/q)\), which sets the system output, y, to oscillate. (Eigenvalues of \((A+F_1+F_2)\) become purely imaginary.) This also indicates that the oscillations can be avoided at the expense of extra energy consumption. The criticality of this trade-off can be further explained through the transfer function model. The closed-loop transfer function, \(\widetilde{H}_{YU}(i\omega )\), of the system can be written as:

where

is the transfer function of the open-loop plant, and

and

are two parallel feedback elements (Fig. 4b). As the steady-state error does not depend directly on g, we can consider \(G(i\omega )\) to be the part of the internal feedback loop and \(H(i\omega )\) as a tunable external feedback element. This enables us to write the closed-loop transfer function as the product of the modified plant model, \(H_{YU,g}(i\omega )\) and the sensitivity function, \(S_h(i\omega )\). Now, \(H_{YU,g}(i\omega )\) has an unstable zero, \(z = k/q\) and an unstable pole, p. Using Eq. 7, the Bode integral can be written as:

Thus, in the presence of the positive feedback (\(q>0\)), the peak response of log sensitivity increases resulting in oscillatory responses which can be subdued by the choice of \(g>0\) (Fig. 4c).

2.5.1 Energy consumption and perfect adaptation

In the previous example, we saw how the energy expenditure plays a critical role in the trade-off. A similar scenario is observed during adaptation of bacterial chemotaxis in E. coli. Lan et al. (2012) developed the following stochastic description of the system, involving external input, u(t), controller state x(t) (slow) and output state y(t) (fast):

where \(\alpha \in [0,1]\) and \(\beta \in \mathbb {R}_+\) are constants and \(\bar{y}\) is the desired steady-state value of the output. The signals \(w_x\) and \(w_y\) represent white noise processes affecting the respective states. The function \(F(u,x) = (1+u/e^{2x})^{-1}\) is the mean activity and has opposite dependency on input and the controller, i.e., \(\frac{\partial F(u,x)}{\partial x}>0, \frac{\partial F(u,x)}{\partial u}<0\). Finally, \(\varepsilon _x\) and \(\varepsilon _y\) are the time scales associated with the x and y dynamics, respectively. In this system, \(\varepsilon _x\) dictates the adaptation speed and satisfies \(\varepsilon _x\ll \varepsilon _y\).

Through linear stability analysis, Lan et al. (2012) showed that the steady state is only stable for sufficiently high values of \(\beta \). Furthermore, they developed a relationship between the rate of the energy dissipation (W) associated with adaptation and the relative adaptation error, \(\Delta y = |(y-\bar{y})/\bar{y}|\):

This indicates that adaptation is an energy expensive process; the cost increases with adaptation speed and accuracy. If the accuracy is to remain unaltered, then for limited supply of energy, the adaptation speed needs to decrease. In fact, experiments in which E. coli cells are starved (in nutrient-free tethering buffer) show that the adaptation time increases while keeping the accuracy unaltered (Lan et al. 2012).

This extra energy requirement has some connection with the trade-offs seen in Bode’s integral. As we are rejecting a constant external disturbance, integral control is required, and this forces the magnitude of the sensitivity function toward zero (\(-\,\infty \), when expressed in dB) as the frequency approaches zero. However, different controllers can achieve perfect adaptation at different speeds. Faster adaptation times are achieved when the graph of the magnitude is lower, essentially achieving a greater bandwidth (Fig. 2b). According to Bode’s integral, this comes at a higher price, in terms of sensitivity magnification at higher frequencies. In terms of energy consumption, Lan et al. (2012) show that there is also a penalty.

3 Discussion

The well-known Indian parable of the blind men and the elephant teaches us that when we come across an object that is new to us, we tend to rely on what is familiar to us to describe and understand it. While the concepts of feedback control, information theory and thermodynamics arose separately to describe problems in widely varying fields, it is perhaps not surprising that they are so intimately connected.

During the last twenty years, there has been great interest in using theories from control systems to understand biological processes. This was likely prompted by the work of S. Leibler and coworkers describing robustness of biological regulation (Barkai and Leibler 1997; Alon et al. 1999). The interpretation of the origin of this robustness, which could be done on well-known results of control theory, led to increased interaction between biologists and control theorists. This continues to date, likely having its greatest impact in the field of synthetic biology, which aims to design de novo regulatory systems that can achieve this desired regulation (Del Vecchio et al. 2016; Olsman et al. 2019).

Though more recently, an information-theoretic view of biological signaling constraints has attracted considerable attention (Rhee et al. 2014; Iglesias 2016). Particularly, these studies have led to an appreciation that information—acquiring it, transmitting it, and possibly storing it—is an important part of an organism’s physiology. Of course, information transmission, like regulation, requires energy. The seminal work of Edwin Jaynes established a firm connection between information theory and thermodynamics (Jaynes 1957).

It should be noted that many of the analyses used here are based on linearizations of the system dynamics. The advantage of this is that it opens up a wealth of engineering tools, many which rely on the linear, time-invariant assumptions. These work well, but clearly have their limitations, as there are interesting behaviors that cannot be represented by such systems. While we have suggested that there are extensions to nonlinear systems, this is still very much an open field.

This paper was to be presented at a workshop Mathematical Models in Biology: from Information Theory to Thermodynamics. Our goal has been to demonstrate how control theory, together with information theory and statistical thermodynamics, forms the legs of a sturdy tripod on which a mathematical understanding of biology can have a firm foundation.

References

Alon U, Surette MG, Barkai N, Leibler S (1999) Robustness in bacterial chemotaxis. Nature 397(6715):168–71. https://doi.org/10.1038/16483

Anders A, Ghosh B, Glatter T, Sourjik V (2020) Design of a MAPK signalling cascade balances energetic cost versus accuracy of information transmission. Nat Commun 11(1):3494. https://doi.org/10.1038/s41467-020-17276-4

Andrews BW, Sontag ED, Iglesias PA (2008) An approximate internal model principle: applications to nonlinear models of biological systems. IFAC Proc Vol 41(2):15873–15878. https://doi.org/10.3182/20080706-5-KR-1001.02683

Barkai N, Leibler S (1997) Robustness in simple biochemical networks. Nature 387(6636):913–7. https://doi.org/10.1038/43199

Black HS (1934) Stabilized feedback amplifiers. Bell Syst Tech J 13(1):1–18

Block SM, Segall JE, Berg HC (1983) Adaptation kinetics in bacterial chemotaxis. J Bacteriol 154(1):312–323. https://doi.org/10.1128/jb.154.1.312-323.1983

Bode HW (1945) Network analysis and feedback amplifier design. D. Van Nostrand, New York

Bostani N, Kessler DA, Shnerb NM, Rappel WJ, Levine H (2012) Noise effects in nonlinear biochemical signaling. Phys Rev E Stat Nonlinear Soft Matter Phys 85(1 Pt 1):011901. https://doi.org/10.1103/PhysRevE.85.011901

Briat C, Gupta A, Khammash M (2016) Antithetic integral feedback ensures robust perfect adaptation in noisy biomolecular networks. Cell Syst 2(2):133. https://doi.org/10.1016/j.cels.2016.02.010

Buzi G, Khammash M (2016) Implementation considerations, not topological differences, are the main determinants of noise suppression properties in feedback and incoherent feedforward circuits. PLoS Comput Biol 12(6):e1004958. https://doi.org/10.1371/journal.pcbi.1004958

Cannon WB (1932) The wisdom of the body. W.W. Norton & Company, New York

Chandra FA, Buzi G, Doyle JC (2011) Glycolytic oscillations and limits on robust efficiency. Science 333(6039):187–92. https://doi.org/10.1126/science.1200705

Chen SH, Masuno K, Cooper SB, Yamamoto KR (2013) Incoherent feed-forward regulatory logic underpinning glucocorticoid receptor action. Proc Natl Acad Sci USA 110(5):1964–9. https://doi.org/10.1073/pnas.1216108110

Cover TM, Thomas JA (2006) Elements of information theory. Wiley, New York

Cox CD, McCollum JM, Austin DW, Allen MS, Dar RD, Simpson ML (2006) Frequency domain analysis of noise in simple gene circuits. Chaos 16(2):026102. https://doi.org/10.1063/1.2204354

Del Vecchio D, Dy AJ, Qian Y (2016) Control theory meets synthetic biology. J R Soc Interface. https://doi.org/10.1098/rsif.2016.0380

Francis B, Wonham W (1976) The internal model principle of control theory. Automatica 12(5):457–465. https://doi.org/10.1016/0005-1098(76)90006-6

Freudenberg J, Looze D (1985) Right half plane poles and zeros and design tradeoffs in feedback systems. IEEE Trans Autom Control 30(6):555–565. https://doi.org/10.1109/TAC.1985.1104004

Freudenberg J, Looze D (1987) A sensitivity tradeoff for plants with time delay. IEEE Trans Autom Control 32(2):99–104. https://doi.org/10.1109/TAC.1987.1104547

Hansen MMK, Wen WY, Ingerman E, Razooky BS, Thompson CE, Dar RD, Chin CW, Simpson ML, Weinberger LS (2018) A post-transcriptional feedback mechanism for noise suppression and fate stabilization. Cell 173(7):1609–1621.e15. https://doi.org/10.1016/j.cell.2018.04.005

Hornung G, Barkai N (2008) Noise propagation and signaling sensitivity in biological networks: a role for positive feedback. PLoS Comput Biol 4(1):e8. https://doi.org/10.1371/journal.pcbi.0040008

Iglesias PA (2001a) A time-varying analogue of Jensen’s formula. Integr Equ Oper Theory 40(1):34–51. https://doi.org/10.1007/BF01202953

Iglesias PA (2001b) Tradeoffs in linear time-varying systems: an analogue of Bode’s sensitivity integral. Automatica 37(10):1541–1550. https://doi.org/10.1016/S0005-1098(01)00103-0

Iglesias PA (2002) Logarithmic integrals and system dynamics: an analogue of Bode’s sensitivity integral for continuous-time, time-varying systems. Linear Algebra Appl 343:451–471. https://doi.org/10.1016/S0024-3795(01)00360-3

Iglesias PA (2016) The use of rate distortion theory to evaluate biological signaling pathways. IEEE Trans Mol Biol Multiscale Commun 2(1):31–39. https://doi.org/10.1109/TMBMC.2016.2623600

Iglesias PA, Ingalls BP (2010) Control theory and systems biology. MIT Press, Cambridge

Iglesias PA, Shi C (2014) Comparison of adaptation motifs: temporal, stochastic and spatial responses. IET Syst Biol 8(6):268–281. https://doi.org/10.1049/iet-syb.2014.0026

Iglesias PA, Zang G (2001) Szegö’s first limit theorem in terms of a realization of a continuous-time time-varying system. Int J Appl Mat Comput Pol 11:1261–1276

Ishii H, Okano K, Hara S (2011) Achievable sensitivity bounds for MIMO control systems via an information theoretic approach. Syst Control Lett 60(2):111–118. https://doi.org/10.1016/j.sysconle.2010.10.014

Jaynes E (1957) Information theory and statistical mechanics. Phys Rev 106(4):620–630. https://doi.org/10.1103/PhysRev.106.620

Jensen JLWV (1899) Sur un nouvel et important théorème de la théorie des fonctions. Acta Math 22:359–364. https://doi.org/10.1007/BF02417878

Jetka T, Nienałtowski K, Filippi S, Stumpf MPH, Komorowski M (2018) An information-theoretic framework for deciphering pleiotropic and noisy biochemical signaling. Nat Commun 9(1):4591. https://doi.org/10.1038/s41467-018-07085-1

Jetka T, Nienałtowski K, Winarski T, Błoński S, Komorowski M (2019) Information-theoretic analysis of multivariate single-cell signaling responses. PLoS Comput Biol 15(7):e1007132. https://doi.org/10.1371/journal.pcbi.1007132

Kolmogorov A (1956) On the Shannon theory of information transmission in the case of continuous signals. IRE Trans Inf Theory 2(4):102–108. https://doi.org/10.1109/TIT.1956.1056823

Koshland DE Jr (1977) A response regulator model in a simple sensory system. Science 196(4294):1055–63. https://doi.org/10.1126/science.870969

Krishnan J, Iglesias PA (2003) Analysis of the signal transduction properties of a module of spatial sensing in eukaryotic chemotaxis. Bull Math Biol 65(1):95–128. https://doi.org/10.1006/bulm.2002.0323

Lan G, Sartori P, Neumann S, Sourjik V, Tu Y (2012) The energy-speed-accuracy trade-off in sensory adaptation. Nat Phys 8(5):422–428. https://doi.org/10.1038/nphys2276

Levchenko A, Iglesias PA (2002) Models of eukaryotic gradient sensing: application to chemotaxis of amoebae and neutrophils. Biophys J 82(1 Pt 1):50–63. https://doi.org/10.1016/S0006-3495(02)75373-3

Li D, Hovakimyan N (2013) Bode-like integral for continuous-time closed-loop systems in the presence of limited information. IEEE Trans Autom Control 58(6):1457–1469. https://doi.org/10.1109/TAC.2012.2235721

Liu J, Elia N (2014) Convergence of fundamental limitations in feedback communication, estimation, and feedback control over Gaussian channels. Commun Inf Syst 14(3):161–211. https://doi.org/10.4310/CIS.2014.v14.n3.a2

Ma W, Trusina A, El-Samad H, Lim WA, Tang C (2009) Defining network topologies that can achieve biochemical adaptation. Cell 138(4):760–73. https://doi.org/10.1016/j.cell.2009.06.013

Mangan S, Itzkovitz S, Zaslaver A, Alon U (2006) The incoherent feed-forward loop accelerates the response-time of the gal system of Escherichia coli. J Mol Biol 356(5):1073–81. https://doi.org/10.1016/j.jmb.2005.12.003

Martins NC, Dahleh MA (2008) Feedback control in the presence of noisy channels: “Bode-like” fundamental limitations of performance. IEEE Trans Autom Control 53(7):1604–1615. https://doi.org/10.1109/TAC.2008.929361

Martins NC, Dahleh MA, Doyle JC (2007) Fundamental limitations of disturbance attenuation in the presence of side information. IEEE Trans Automat Control 52(1):56–66. https://doi.org/10.1109/TAC.2006.887898

Milo R, Shen-Orr S, Itzkovitz S, Kashtan N, Chklovskii D, Alon U (2002) Network motifs: simple building blocks of complex networks. Science 298(5594):824–7. https://doi.org/10.1126/science.298.5594.824

O’Brien R, Iglesias PA (2001) On the poles and zeros of linear, time-varying systems. IEEE Trans Circuits I 48(5):565–577. https://doi.org/10.1109/81.922459

Olsman N, Baetica AA, Xiao F, Leong YP, Murray RM, Doyle JC (2019) Hard limits and performance tradeoffs in a class of antithetic integral feedback networks. Cell Syst 9(1):49–63.e16. https://doi.org/10.1016/j.cels.2019.06.001

Padoan A, Astolfi A (2020) Singularities and moments of nonlinear systems. IEEE Trans Autom Control 65(8):3647–3654. https://doi.org/10.1109/TAC.2019.2951297

Rhee A, Cheong R, Levchenko A (2014) Noise decomposition of intracellular biochemical signaling networks using nonequivalent reporters. Proc Natl Acad Sci USA 111(48):17330–5. https://doi.org/10.1073/pnas.1411932111

Shankar P, Nishikawa M, Shibata T (2015) Adaptive responses limited by intrinsic noise. PLoS ONE 10(8):e0136095. https://doi.org/10.1371/journal.pone.0136095

Shibata T, Fujimoto K (2005) Noisy signal amplification in ultrasensitive signal transduction. Proc Natl Acad Sci USA 102(2):331–6. https://doi.org/10.1073/pnas.0403350102

Shimizu TS, Tu Y, Berg HC (2010) A modular gradient-sensing network for chemotaxis in Escherichia coli revealed by responses to time-varying stimuli. Mol Syst Biol 6(1):382. https://doi.org/10.1038/msb.2010.37

Shoval O, Goentoro L, Hart Y, Mayo A, Sontag E, Alon U (2010) Fold-change detection and scalar symmetry of sensory input fields. Proc Natl Acad Sci USA 107(36):15995–6000. https://doi.org/10.1073/pnas.1002352107

Silva-Rocha R, de Lorenzo V (2011) A composite feed-forward loop I4-FFL involving IHF and Crc stabilizes expression of the XylR regulator of Pseudomonas putida mt-2 from growth phase perturbations. Mol BioSyst 7(11):2982–90. https://doi.org/10.1039/c1mb05264k

Simpson ML, Cox CD, Sayler GS (2003) Frequency domain analysis of noise in autoregulated gene circuits. Proc Natl Acad Sci USA 100(8):4551–6. https://doi.org/10.1073/pnas.0736140100

Sontag ED (2003) Adaptation and regulation with signal detection implies internal model. Syst Control Lett 50(2):119–126. https://doi.org/10.1016/S0167-6911(03)00136-1

Stein G (2003) Respect the unstable. IEEE Control Syst Mag 23(4):12–25. https://doi.org/10.1109/MCS.2003.1213600

Sung HK, Hara S (1988) Properties of sensitivity and complementary sensitivity functions in single-input single-output digital control systems. Int J Control 48(6):2429–2439. https://doi.org/10.1080/00207178808906338

Szegö G (1915) Ein Grenzwertsatz über die Toeplitzschen Determinanten einer reellen positiven Funktion. Math Ann 76(4):490–503. https://doi.org/10.1007/BF01458220

Takeda K, Shao D, Adler M, Charest PG, Loomis WF, Levine H, Groisman A, Rappel WJ, Firtel RA (2012) Incoherent feedforward control governs adaptation of activated Ras in a eukaryotic chemotaxis pathway. Sci Signal 5(205):ra2. https://doi.org/10.1126/scisignal.2002413

Wan N, Li D, Hovakimyan N (2020) A simplified approach to analyze complementary sensitivity tradeoffs in continuous-time and discrete-time systems. IEEE Trans Autom Control 65(4):1697–1703. https://doi.org/10.1109/TAC.2019.2934010

Yi TM, Huang Y, Simon MI, Doyle J (2000) Robust perfect adaptation in bacterial chemotaxis through integral feedback control. Proc Natl Acad Sci USA 97(9):4649–53. https://doi.org/10.1073/pnas.97.9.4649

Zang G, Iglesias PA (2003) Nonlinear extension of Bode’s integral based on an information-theoretic interpretation. Syst Control Lett 50(1):11–19. https://doi.org/10.1016/S0167-6911(03)00119-1

Acknowledgements

This review arose as a consequence of planned workshop on “Mathematical Models in Biology: from Information Theory to Thermodynamics” that was to be held at the Banff International Research Station (BIRS) during July 2020. Alas, COVID-19 prevented us from meeting in person, and the conference was only virtual. Nevertheless, we thank BIRS and the organizers for their willingness to host us.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by Brian Ingalls.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by DARPA under Contract No. HR0011-16-C-0139.

Rights and permissions

About this article

Cite this article

Biswas, D., Iglesias, P.A. Sensitivity minimization, biological homeostasis and information theory. Biol Cybern 115, 103–113 (2021). https://doi.org/10.1007/s00422-021-00860-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00422-021-00860-2