Abstract

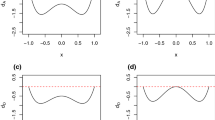

We study approximate K-optimal designs for various regression models by minimizing the condition number of the information matrix. This minimizes the error sensitivity in the computation of the least squares estimator of regression parameters and also avoids the multicollinearity in regression. Using matrix and optimization theory, we derive several theoretical results of K-optimal designs, including convexity of K-optimality criterion, lower bounds of the condition number, and symmetry properties of K-optimal designs. A general numerical method is developed to find K-optimal designs for any regression model on a discrete design space. In addition, specific results are obtained for polynomial, trigonometric and second-order response models.

Similar content being viewed by others

References

Berger MPF, Wong WK (2009) An introduction to optimal designs for social and biomedical research. Wiley, New York

Boyd S, Vandenberghe L (2004) Convex optimization. Cambridge University Press, New York

Bretz F, Dette H, Pinheiro JC (2010) Practical considerations for optimal designs in clinical dose finding studies. Stat Med 29:731–742

Charnes A, Cooper WW (1962) Programming with linear fractional functionals. Naval Res Logist Q 9:181–186

Dean A, Morris M, Stufken J, Bingham D (2015) Handbook of design and analysis of experiments. CRC Press, Boca Raton

Dette H, Melas VB, Shpilev P (2007) Optimal designs for estimating the coefficients of the lower frequencies in trigonometric regression models. Ann Inst Stat Math 59:655–673

Fedorov VV (1972) Theory of optimal experiments. Academic Press, New York

Frenk H, Schaible S (2009) Fractional programming. In: Floudas CA, Pardalos PM (eds) Encyclopedia of optimization, 2ed edn. Springer, Boston, pp 1080–1091

Gao LL, Zhou J (2020) Minimax D-optimal designs for multivariate regression models with multi-factors. J Stat Plan Inference 209:160–173

Grant MC, Boyd SP (2013) The CVX Users Guide, Release 2.0 (beta). CVX Research, Inc., Stanford University, Stanford

Horn RA, Johnson CR (2013) Matrix analysis, 2nd edn. Cambridge University Press, New York

Kiefer J (1959) Optimum experimental designs. J R Stat Soc B 21:272–319

Kiefer J (1974) General equivalence theory for optimum designs (approximate theory). Anna Stat 2:849–887

Lu Z, Pong TK (2011) Minimizing condition number via convex programming. SIAM J Matrix Anal Appl 32:1193–1211

Mangasarian OL (1994) Nonlinear programming. Society for Industrial and Applied Mathematics, Philadelphia

Pukelsheim F (2006) Optimal design of experiments. Society for Industrial and Applied Mathematics, Philadelphia

Rempel MF, Zhou J (2014) On exact K-optimal designs minimizing the condition number. Commun Stat 43:1114–1131

Wong WK, Zhou J (2019) CVX based algorithms for constructing various optimal regression designs. Can J Stat 47:374–391

Ye JJ, Zhou J (2013) Minimizing the condition number to construct design points for polynomial regression models. SIAM J Optim 23:666–686

Acknowledgements

The authors thank the Editors and referees for their helpful comments and suggestions to improve the presentation of this article. The authors also thank Professor Jane J. Ye for discussion on the existence of a solution in Sect. 2.1. This research work was supported by Discovery Grants from the Natural Sciences and Engineering Research Council of Canada.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendix: Proofs

Appendix: Proofs

Proof of Theorem 1:

For any two weight vectors \(\mathbf{w}_1\) and \(\mathbf{w}_2\), and \(\delta \in [0, 1]\),

where the inequality is from Weyl’s Theorem, see, e.g., Horn and Johnson (2013, p. 239). Thus, using the result of Boyd and Vandenberghe (2004, p. 67), \(\lambda _1( \mathbf{w})\) is a convex function of \(\mathbf{w}\). Similarly, it can be proved that \(\lambda _q( \mathbf{w})\) is a concave function of \(\mathbf{w}\). \(\square \)

Proof of Theorem 2:

Firstly, we show by using a proof by contradiction that \(\lambda _q(\mathbf{v}^*)=1\) if \(\mathbf{v}^*\) is a solution to (8). Assume \(\mathbf{v}^*\) is a solution to (8) and \(\lambda _q(\mathbf{v}^*)=a_0 >1\). Let \(\tilde{\mathbf{v}}^*=\frac{1}{a_0} \mathbf{v}^*\). Then, \(\tilde{\mathbf{v}}^* \ge 0\), \(\lambda _q(\tilde{\mathbf{v}}^*)=\lambda _q\left( \frac{1}{a_0} \mathbf{v}^*\right) =\frac{1}{a_0} \lambda _q(\mathbf{v}^*) =1\), and \(\lambda _1(\tilde{\mathbf{v}}^*) = \lambda _1\left( \frac{1}{a_0} \mathbf{v}^*\right) =\frac{1}{a_0} \lambda _1(\mathbf{v}^*) < \lambda _1(\mathbf{v}^*), ~\text{ as } a_0>1. \) This implies that \(\mathbf{v}^*\) is not a solution to (8), giving a contradiction.

Secondly, we show that if \(\mathbf{v}^*\) is a solution to (8) then \(\mathbf{w}^*=\frac{1}{\gamma } \mathbf{v}^*\) is a solution to (7), where \(\gamma =\sum _{i=1}^N v_i^*\). It is obvious that \(\mathbf{w}^*\) satisfies the constraints in (7). Also

For any weight vector \(\mathbf{w}\) with \(\lambda _q(\mathbf{w}) \ne 0\),

where the above inequality is from the fact that \(\frac{1}{\lambda _q(\mathbf{w}) } \mathbf{w}\) satisfies the constraints in (8) and \(\mathbf{v}^*\) is a solution to (8). Thus, \(\mathbf{w}^*\) is a solution to (7).

Thirdly, we show that if \(\mathbf{w}^*\) is a solution to (7) then \(\mathbf{v}^*=\frac{1}{\lambda _q(\mathbf{w}^*) } \mathbf{w}^*\) is a solution to (8). It is easy to verify that \(\mathbf{v}^*\) satisfies the constraints in (8) with \(\lambda _q(\mathbf{v}^*)=1\). For any vector \(\mathbf{v}\) satisfying the constraints in (8), define \(\mathbf{w}=\frac{1}{\sum _{i=1}^N v_i} \mathbf{v}\). Notice that \(\mathbf{w}\) satisfies the constraints in (7), and from the facts that \(\lambda _q(\mathbf{v}) \ge 1\) and \(\mathbf{w}^*\) is a solution to (7) it follows that

Thus, \(\mathbf{v}^*\) is a solution to (8) . \(\square \)

Proof of Theorem 3:

For any \(\delta \in [0,1]\),

\(\square \)

Proof of Theorem 4:

For model (11), the diagonal entries of the information matrix satisfy

For any \(a_{2r,2r} \in [0,1]\), it is obvious that

From (15), the condition number of the information matrix satisfies, for \(k \ge 1\),

When \(\mathbf{A}(\mathbf{w})=1 \oplus 0.5 \mathbf{I}_{2k}\), its eigenvalues are \(1, 0.5, \ldots , 0.5\). Thus, its condition number is 2 and the lower bound is achieved. \(\square \)

Proof of Theorem 5:

Since \(S_N\) is symmetric, for each \(\mathbf{u}_i\), \(T\mathbf{u}_i=\mathbf{u}_j\) for some \(1 \le j \le N\), and \(T\mathbf{u}_j=\mathbf{u}_i\) as \(T(T\mathbf{u}_i)=\mathbf{u}_i\). From the definition of \(\xi ^T(\mathbf{w})\) in (19) and (20), it follows that \(w_{i,T}=w_j\) and \(w_{j,T}=w_i\) for all \(1 \le i, j \le N\). In \(\mathbf{w}_{0.5}=0.5 \mathbf{w} + 0.5 \mathbf{w}_T\), the ith entry is \(0.5w_i+0.5w_{i,T}=0.5w_i+0.5w_j\) and the jth entry is \(0.5w_j+0.5w_{j,T}=0.5w_j+0.5w_i\). Thus, the weights in \(\mathbf{w}_{0.5}\) for \(\mathbf{u}_i\) and \(\mathbf{u}_j\) are the same, which implies that \(\xi (\mathbf{w}_{0.5})\) is symmetric with respect to transformation T.

For transformation \(T=T_1\) in (18), the information matrix for \(\xi ^T(\mathbf{w})\) is \(\mathbf{A}(\mathbf{w}_T) =\sum _{i=1}^N w_i \mathbf{f}(T\mathbf{u}_i) \mathbf{f}^\top (T\mathbf{u}_i) =\sum _{i=1}^N w_i \mathbf{Q}{} \mathbf{f}(\mathbf{u}_i) \mathbf{f}^\top (\mathbf{u}_i)\mathbf{Q}^\top = \mathbf{Q} \mathbf{A}(\mathbf{w}) \mathbf{Q}^\top \), where \(\mathbf{Q}\) is a diagonal matrix with diagonal entries: \(1, 1, 1, -1, 1, -1\), for model (16) with \(k=2\). Since \(\mathbf{Q}^{-1}= \mathbf{Q}^\top \), \(\mathbf{A}(\mathbf{w}_T) \) and \(\mathbf{A}(\mathbf{w})\) have the same eigenvalues. Using the result in Theorem 3 gives \(\phi (\mathbf{w}_{0.5}) \le \phi (\mathbf{w})\). The results for \(T_2\) and \(T_3\) in (18) can be proved similarly. \(\square \)

Rights and permissions

About this article

Cite this article

Yue, Z., Zhang, X., Driessche, P.v.d. et al. Constructing K-optimal designs for regression models. Stat Papers 64, 205–226 (2023). https://doi.org/10.1007/s00362-022-01317-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-022-01317-9

Keywords

- Optimal regression design

- Fourier regression

- Condition number

- Convex optimization

- Matrix norm

- Second-order response model