Abstract

Multi-fidelity approaches combine different models built on a scarce but accurate dataset (high-fidelity dataset), and a large but approximate one (low-fidelity dataset) in order to improve the prediction accuracy. Gaussian processes (GPs) are one of the popular approaches to exhibit the correlations between these different fidelity levels. Deep Gaussian processes (DGPs) that are functional compositions of GPs have also been adapted to multi-fidelity using the multi-fidelity deep Gaussian process (MF-DGP) model. This model increases the expressive power compared to GPs by considering non-linear correlations between fidelities within a Bayesian framework. However, these multi-fidelity methods consider only the case where the inputs of the different fidelity models are defined over the same domain of definition (e.g., same variables, same dimensions). However, due to simplification in the modeling of the low fidelity, some variables may be omitted or a different parametrization may be used compared to the high-fidelity model. In this paper, deep Gaussian processes for multi-fidelity (MF-DGP) are extended to the case where a different parametrization is used for each fidelity. The performance of the proposed multi-fidelity modeling technique is assessed on analytical test cases and on structural and aerodynamic real physical problems.

Similar content being viewed by others

References

Amari S-I, Douglas SC (1998) Why natural gradient?. In: Proceedings of the 1998 IEEE international conference on acoustics, speech and signal processing, ICASSP’98 (Cat. No. 98CH36181), vol 2. IEEE, pp 1213–1216

Armstrong JS, Collopy F (1992) Error measures for generalizing about forecasting methods: empirical comparisons. Int J Forecast 8(1):69–80

Bandler JW, Cheng QS, Dakroury SA, Mohamed AS, Bakr MH, Madsen K, Sondergaard J (2004) Space mapping: the state of the art. IEEE Transactions on Microwave theory and techniques 52(1):337–361

Bandler JW, Koziel S, Madsen K (2006) Space mapping for engineering optimization. SIAG/Optimization Views-and-News Special Issue on Surrogate/Derivative-free Optimization 17(1):19–26

Bauchau OA, Craig JI (2009) Euler-bernoulli beam theory. In: Structural analysis. Springer, pp 173–221

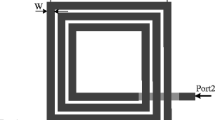

Bekasiewicz A, Koziel S (2015) Efficient multi-fidelity design optimization of microwave filters using adjoint sensitivity. International Journal of RF and Microwave Computer-Aided Engineering 25(2):178–183

Berci M, Toropov VV, Hewson RW, Gaskell PH (2014) Multidisciplinary multifidelity optimisation of a flexible wing aerofoil with reference to a small uav. Struct Multidiscip Optim 50(4):683–699

Bjornstad JF (1990) Predictive likelihood: a review. Stat Sci, 5(2) pp 242–254

Brevault L, Balesdent M, Hebbal A (2020) Multi-objective multidisciplinary design optimization approach for partially reusable launch vehicle design. J Spacecr Rocket, pp 1–17

Brooks CJ, Forrester AIJ, Keane AJ, Shahpar S (2011) Multi-fidelity design optimisation of a transonic compressor rotor

Cutajar K, Pullin M, Damianou A, Lawrence N, González J (2019) Deep gaussian processes for multi-fidelity modeling. arXiv:1903.07320

Damianou A, Lawrence N (2013) Deep gaussian processes. In: Artificial intelligence and statistics, pp 207–215

Matthews AGG, van der Wilk M, Tom Nickson K, Fujii AB, León-Villagrá P, Ghahramani Z, Hensman J (2017) GPflow: a Gaussian process library using TensorFlow. J Mach Learn Res 18 (40):1–6

Dhondt G (2017) Calculix crunchix user’s manual version 2.12. http://www.dhondt.de/ccx, 2

Dong H, Song B, Wang P, Huang S (2015) Multi-fidelity information fusion based on prediction of kriging. Struct Multidiscip Optim 51(6):1267–1280

Forrester A, Sobester A, Keane A (2008) Engineering design via surrogate modelling: a practical guide. Wiley, New York

Giselle Fernández-Godino M, Park C, Kim N-H, Haftka RT (2016) Review of multi-fidelity models. arXiv:1609.07196

Gloudemans J, Davis P, Gelhausen P (1996) A rapid geometry modeler for conceptual aircraft. In: 34th aerospace sciences meeting and exhibit, p 52

Gratiet LL, Garnier J (2014) Recursive co-kriging model for design of computer experiments with multiple levels of fidelity. International Journal for Uncertainty Quantification 4(5)

Hao P, Feng S, Li Y, Bo W, Chen H (2020) Adaptive infill sampling criterion for multi-fidelity gradient-enhanced kriging model. Struct Multidiscip Optim, 62 pp 1–21

Hebbal A, Brevault L, Balesdent M, Talbi E-G, Melab N (2019) Multi-fidelity modeling using dgps: improvements and a generalization to varying input space dimensions

Iyappan P, Ganguli R (2020) Multi-fidelity analysis and uncertainty quantification of beam vibration using correction response surfaces. International Journal for Computational Methods in Engineering Science and Mechanics 21(1):26–42

Jensen JLWV, et al. (1906) Sur les fonctions convexes et les inégalités entre les valeurs moyennes. Acta Mathematica 30:175–193

Jin R, Chen W, Simpson TW (2001) Comparative studies of metamodelling techniques under multiple modelling criteria. Struct Multidiscip Optim 23(1):1–13

Jones DR, Schonlau M, Welch WJ (1998) Efficient global optimization of expensive black-box functions. J Glob Optim 13(4):455–492

Jonsson IM, Leifsson L, Koziel S, Tesfahunegn YA, Bekasiewicz A (2015) Shape optimization of trawl-doors using variable-fidelity models and space mapping. In: ICCS, pp 905–913

Kennedy MC, O’Hagan A (2001) Bayesian calibration of computer models. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 63(3):425–464

Kim HS, Koc M, Ni J (2007) A hybrid multi-fidelity approach to the optimal design of warm forming processes using a knowledge-based artificial neural network. Int J Mach Tools Manuf 47(2):211–222

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv:1412.6980

Koziel S (2010) Computationally efficient multi-fidelity multi-grid design optimization of microwave structures. ACES Journal-Applied Computational Electromagnetics Society 25(7):578

Kullback S, Leibler RA (1951) On information and sufficiency. The Annals of Mathematical Statistics 22(1):79–86

Kuya Y, Takeda K, Zhang X, Forrester AIJ (2011) Multifidelity surrogate modeling of experimental and computational aerodynamic data sets. AIAA J 49(2):289–298

Li W, Chen S, Jiang Z, Apley DW, Lu Z, Chen W (2016) Integrating bayesian calibration, bias correction, and machine learning for the 2014 sandia verification and validation challenge problem. Journal of Verification, Validation and Uncertainty Quantification 1(1)

Liu Z, Xu H, Zhu P (2020) An adaptive multi-fidelity approach for design optimization of mesostructure-structure systems. Struct Multidiscip Optim, 62 pp 1–12

Minisci E, Vasile M (2013) Robust design of a reentry unmanned space vehicle by multifidelity evolution control. AIAA journal 51(6):1284–1295

Paleyes A, Pullin M, Mahsereci M, Lawrence N, González J (2019) Emulation of physical processes with emukit. In: Second workshop on machine learning and the physical sciences, NeurIPS

Park C, Haftka RT, Kim NH (2017) Remarks on multi-fidelity surrogates. Struct Multidiscip Optim 55(3):1029–1050

Peherstorfer B, Willcox K, Gunzburger M (2018) Survey of multifidelity methods in uncertainty propagation, inference, and optimization. Siam Review 60(3):550–591

Perdikaris P, Raissi M, Damianou A, Lawrence ND, Karniadakis GE (2017) Nonlinear information fusion algorithms for data-efficient multi-fidelity modelling. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences 473(2198):20160751

Raissi M, Karniadakis G (2016) Deep multi-fidelity gaussian processes. arXiv:1604.07484

Rasmussen C, Williams Christopher KI (2006) Gaussian processes for machine learning, vol 1. MIT Press, Cambridge

Rayas-Sanchez J (2016) Power in simplicity with asm: tracing the aggressive space mapping algorithm over two decades of development and engineering applications. IEEE Microw Mag 17(4):64–76

Rayas-Sánchez JE (2004) Em-based optimization of microwave circuits using artificial neural networks: the state-of-the-art. IEEE Transactions on Microwave Theory and Techniques 52(1):420–435

Reddy JN (2019) Introduction to the Finite Element Method, Fourth Edition. McGraw-Hill Education. New York

Reeve ST, Strachan A (2017) Error correction in multi-fidelity molecular dynamics simulations using functional uncertainty quantification. J Comput Phys 334:207–220

Robinson TD, Eldred MS, Willcox KE, Haimes R (2008) Surrogate-based optimization using multifidelity models with variable parameterization and corrected space mapping. Aiaa Journal 46(11):2814–2822

Salimbeni H, Deisenroth M (2017) Doubly stochastic variational inference for deep gaussian processes. In: Advances in neural information processing systems, pp 4588–4599

Shah H, Hosder S, Koziel S, Tesfahunegn YA, Leifsson L (2015) Multi-fidelity robust aerodynamic design optimization under mixed uncertainty. Aerosp Sci Technol 45:17–29

Shen X, Hu W, Dong S (2019) Multidisciplinary and multifidelity optimization for twin-web turbine disc with asymmetric temperature distribution. Struct Multidiscip Optim 60(2):803–816

Shi M, Lv L, Sun W, Song X (2020) A multi-fidelity surrogate model based on support vector regression. Struct Multidiscip Optim, 61 pp 1–13

Song X, Lv L, Sun W, Zhang J (2019) A radial basis function-based multi-fidelity surrogate model: exploring correlation between high-fidelity and low-fidelity models. Struct Multidiscip Optim 60(3):965–981

Stander JN, Venter G, Kamper MJ (2016) High fidelity multidisciplinary design optimisation of an electromagnetic device. Struct Multidiscip Optim 53(5):1113–1127

Tao S, Apley DW, Chen W, Garbo A, Pate DJ, German BJ (2019) Input mapping for model calibration with application to wing aerodynamics. AIAA J, 57 pp 2734–2745

Vitali R, Haftka RT, Sankar BV (2002) Multi-fidelity design of stiffened composite panel with a crack. Struct Multidiscip Optim 23(5):347–356

Wang G, Shan S (2007) Review of metamodeling techniques in support of engineering design optimization. J Mech Des 129(4):370–380

Wang F, Xiong F, Chen S, Song J (2019) Multi-fidelity uncertainty propagation using polynomial chaos and gaussian process modeling. Struct Multidiscip Optim 60(4):1583–1604

Xiong S, Qian PZG, Wu CFJ (2013) Sequential design and analysis of high-accuracy and low-accuracy computer codes. Technometrics 55(1):37–46

Yi J, Wu F, Qi Z, Cheng Y, Ling H, Liu J (2020) An active-learning method based on multi-fidelity kriging model for structural reliability analysis. Struct Multidiscip Optim, 63 pp 1–23

Acknowledgments

The Experiments presented in this paper were carried out using the Grid’5000 testbed, supported by a scientific interest group hosted by Inria, and including CNRS, RENATER and several universities as well as other organizations (see https://www.grid5000.fr).

Funding

The work of Ali Hebbal is funded by ONERA - The French Aerospace Lab and the University of Lille through a PhD thesis. This work is also part of two projects (HERACLES and MUFIN) funded by ONERA.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Replication of results

The details on the numerical setup are presented on Appendix 3 with the tuning used of the different parameters. The definitions of the analytic multi-fidelity problems used in the experimentations are presented in the Experimental section. For the structural problem, the analytic equation of the low fidelity is displayed in the presentation of the problem and for the high fidelity a FE analysis is used with Calculix solver. For the aerodynamical problem, the FEM and data are restricted and unable to share. For the implementation of MF-DGP-EM, it is available on request from the corresponding author. A Github repository featuring MF-DGP-EM and the analytic benchmark will be available after the publication of the paper.

Additional information

Responsible Editor: Felipe A. C. Viana

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: Derivation of the evidence lower bound of MF-DGP-EM

By marginalizing the latent variable, the log evidence of the model \(\log p\left (\{y^{t}\}_{t=1}^{s},\{X^{t}_{t-1}\}_{t=2}^{s}|\{X^{t}\}_{t=1}^{s} \right )\) is given by:

Then, the variational approximation used in (13) \(q(\mathcal {F},\mathcal {U},\) \({\mathscr{H}},\mathcal {V})\) is introduced as follows:

A lower bound on the log evidence of the model is obtained using Jensen inequality which relates a concave function of an integral (the logarithm in this case) to the concave function of the integral (Jensen et al. 1906):

Then, by replacing the variational distribution by its expression in (13) and canceling out equivalent terms in the numerator and denominator, the following expression is obtained (the dependence on \(\{X^{t}\}_{t=1}^{s}\) for notation simplicity) :

Next, the \(\log \) expression is separated into a sum of four terms:

The first term does not depend on the variables \({\mathscr{H}}, \mathcal {U}\), and \(\mathcal {V}\); thus, it comes back to:

For the second term of the sum, the log expression does not depend on on the variables \(\mathcal {F}, \mathcal {U}\), and \(\mathcal {V}\); thus, the second term comes back to:

For the third term, the log expression does not depend on on the variables \(\mathcal {F}, {\mathscr{H}}\), and \(\mathcal {V}\); thus, the third term comes back to:

For the fourth term, the log expression does not depend on on the variables \(\mathcal {F}, {\mathscr{H}}\), and \(\mathcal {U}\); thus, the fourth term comes back to:

By injecting these terms in (34) and identifying the expectation and KL divergence terms, then factorizing over the training dataset the final expression is obtained:

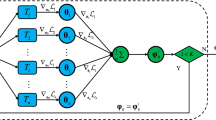

Appendix 2: Training of MF-DGP-EM

The training of MF-DGP-EM comes back to the maximization of the ELBO. This maximization is performed with respect to the hyperparameters of the fidelities GPs \(\{\theta \}_{l=1}^{s}\), the hyperparameters of the input mapping multi-output GPs \(\{\theta _{\text {map}}\}_{l=1}^{s-1}\), the induced inputs \(\{Z_{l}\}_{l=1}^{s}, \{W_{l}\}_{l=1}^{s-1}\), and also the variational parameters of the variational distributions \(\{q(\mathbf {u}_{l})\}_{l=1}^{s},\{q(V_{l})\}_{l=1}^{s-1} \).

1.1 The variational variables

Including the variational distribution parameters makes the parameter space not Euclidian; hence, the ordinary gradient is not a suitable direction to follow (Amari and Douglas 1998). In fact, the variational distribution parameter space has a Riemannian structure defined by the Fisher information. In this case, the natural gradient which comes back to the ordinary gradient rescaled by the inverse Fisher information matrix is the steepest descent direction. This approach is used for MF-DGP-EM. Specifically, the optimization procedure consists of a loop between an optimization step using a stochastic ordinary gradient (Adam Optimizer (Kingma and Ba 2014)) with respect to the Euclidian space parameters and an optimization step using the natural gradient with respect to all the variational parameters of the variational distributions.

1.2 The induced inputs

One of the major difficulties in MF-DGP is the optimization of the inducing inputs \(\{Z_{l}\}_{l=1}^{s}\). In Cutajar et al. (2019), the inducing inputs were arbitrary fixed and not optimized. In fact, except for the first layer, the inducing inputs in MF-DGP do not play the same role as in classic DGPs, where they are defined in the original input space. Specifically, the input space of the inner layers of the MF-DGP is augmented with the output of the previous layer, inducing a non-linear dependence between the d first components and the d + 1 component of each element in this augmented input space. In fact, due to the dependence between the d first components and the d + 1 component of each induced variable, freely optimizing Zl (with 2 ≤ l ≤ s) as vectors with independent components is no longer suitable.

To overcome this issue in MF-DGP-EM, \(\{Z_{l}\}_{l=2}^{s}\) are constrained as follows:

where \(f^{*}_{l-1}(\cdot )\) corresponds to the posterior mean prediction at the previous layer and \(H^{*}_{l-1}(\cdot )\) to the mean mapped value into the lower fidelity. This constraint keeps a dependency between Zl,d+ 1 and Zl,1:d, allowing to remove Zl,d+ 1 from the expression of the ELBO. Hence, the optimization is done with respect to Zl,1:d instead of Zl.

Appendix 3: Numerical setup

-

All experiments were executed on Grid’5000 using a Tesla P100 GPU. The code is based on GPflow (Matthews et al. 2017), Doubly-Stochastic-DGP (Salimbeni and Deisenroth 2017), and emukit (Paleyes et al. 2019).

-

For all GPs, Automatic Relevance Determination (ARD) Squared Exponential (SE) kernels are used with a length scale and variance initialized to 1. The data is scaled so the HF data have a zero mean and a variance equal to 1.

-

The Adam optimizer is set with β1 = 0.9 and β2 = 0.99 and a step size γadam = 0.003.

-

The natural gradient step size is initialized for all layers at γnat = 0.01

-

The number of training iterations for MF-DGP-EM is fixed to 28,000 iterations (one iteration = Adam step + natural gradient step).

-

The mean of the variational distribution of the inducing variables for the layer t is initialized at yt, and for the input mapping GP at layer t at \(X^{t+1}_{t}\).

-

The inducing input of the fidelity GP at layer t is initialized at Xt, and for the input mapping GP at layer t it is initialized at Xt+ 1.

-

A Github repository featuring MF-DGP-EM will be available after the publication of the paper.

Appendix 4: Numerical results

Rights and permissions

About this article

Cite this article

Hebbal, A., Brevault, L., Balesdent, M. et al. Multi-fidelity modeling with different input domain definitions using deep Gaussian processes. Struct Multidisc Optim 63, 2267–2288 (2021). https://doi.org/10.1007/s00158-020-02802-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-020-02802-1