Abstract

In this work a second order approach for reliability-based design optimization (RBDO) with mixtures of uncorrelated non-Gaussian variables is derived by applying second order reliability methods (SORM) and sequential quadratic programming (SQP). The derivation is performed by introducing intermediate variables defined by the incremental iso-probabilistic transformation at the most probable point (MPP). By using these variables in the Taylor expansions of the constraints, a corresponding general first order reliability method (FORM) based quadratic programming (QP) problem is formulated and solved in the standard normal space. The MPP is found in the physical space in the metric of Hasofer-Lind by using a Newton algorithm, where the efficiency of the Newton method is obtained by introducing an inexact Jacobian and a line-search of Armijo type. The FORM-based SQP approach is then corrected by applying four SORM approaches: Breitung, Hohenbichler, Tvedt and a recent suggested formula. The proposed SORM-based SQP approach for RBDO is accurate, efficient and robust. This is demonstrated by solving several established benchmarks, with values on the target of reliability that are considerable higher than what is commonly used, for mixtures of five different distributions (normal, lognormal, Gumbel, gamma and Weibull). Established benchmarks are also generalized in order to study problems with large number of variables and several constraints. For instance, it is shown that the proposed approach efficiently solves a problem with 300 variables and 240 constraints within less than 20 CPU minutes on a laptop. Finally, a most well-know deterministic benchmark of a welded beam is treated as a RBDO problem using the proposed SORM-based SQP approach.

Similar content being viewed by others

1 Introduction

The first order reliability method by Hasofer and Lind (1974) is based on two key ingredients in the case of uncorrelated variables; the iso-probabilistic transformation (IsoT) and the most probable point. The invariance of the approach is obtained by the IsoT, in such manner the reliability index is established for normal distributed variables \(\boldsymbol {Y}\) with zero means and unit standard deviations. Let \({\Phi }(Y_{i})\) be the cumulative distribution function for the standard normal distribution and \(F(X_{i};\boldsymbol {\theta })\) be the cumulative distribution function for the original variable \(X_{i}\) with some distribution parameters collected in \(\boldsymbol {\theta }\), then the IsoT is defined by

The Hasofer-Lind reliability index \(\beta _{\text {HL}}\) is then defined by the distance from the origin to the closest point, denoted the MPP, on the failure surface \(h(\boldsymbol {Y})\). This is established by solving

In FORM the probability of failure is obtained by taking the standard cumulative distribution of the Hasofer-Lind reliability index in the following way:

This is a direct result of including only the linear terms in the Taylor expansion of the failure constraint at the MPP.

Second order reliability methods are obtained by also including second order terms in the Taylor expansion of the failure surface. Based on these higher order terms the first order approximation of the reliability is corrected. The most established SORM correction is probably Breitung’s formula (Breitung 1984) which utilize the curvature of the constraint function at the MPP. In such manner the relationship above is corrected according to

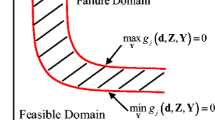

where \(\chi _{\text {SORM}}\) is a correction factor depending on the curvature of the corresponding hyperbolic approximation of the limit state function at the MPP. Other established SORM corrections are proposed by Hohenbichler et al. (1987) and Tvedt (1983). These corrections are also implemented and studied in the paper. A detailed presentation of different SORM approaches can be found in the excellent textbook by Lemaire (2009). Examples of other useful textbooks are Halder and Mahadevan (2000) and Choi et al. (2010). In addition, a recent second order formula presented by Mansour and Olsson (2014) is also implemented and investigated. Fig. 1 illustrates the concepts of MPP, FORM and SORM.

Illustration of MPP, FORM and SORM. The established rotation of the normal space axes shown here is discussed in detail in Section 3

A review of different RBDO approaches can be found in Valdebenito and Schuëller (2010). Most RBDO approaches are based on FORM and RBDO including SORM appears less freqently. However, RBDO using SORM is discussed already in the paper by Shetty et al. (1998), where fire safety of offshore structures was optimized. Another early paper on RBDO using SORM is by Choi et al. (2001). More recently, Lim et al. (2014) proposed a SORM-based RBDO approach using approximations of the Hessian. Yoo et al. (2014) performed sensitivity analysis of SORM at the MPP for RBDO. Gu et al. (2015) presented a practical approach for RBDO using SORM for vehicle occupant systems, which was further developed in Gu et al. (2016). RBDO using Breitung’s formula and non-Gaussian variables was demonstrated in Lim et al. (2016). Mansour and Olsson (2016) demonstrated the importance of using SORM-based methods for non-linear problems by solving a problem with a quadratic objective and quadratic constraints taken from Lee et al. (2015). The same conclusion is also drawn in this work by solving the same problem with our proposed SORM-based SQP method.

An early paper on RBDO using SQP is presented by Ahn and Kwon (2006). Karadeniz et al. (2009) optimized offshore towers using SQP and FORM. A filter-based SQP algorithm to solve RBDO problems with highly nonlinear constraints was proposed by Hsu and Chan (2010). Sampling-based RBDO of ship hull structures by SQP was performed by Choi et al. (2015). Recently, RBDO under mixed probability and interval uncertainties was done by SQP in Zhou et al. (2016).

The SORM-based SQP approach for RBDO presented in this paper is proven to be efficient for solving large problem sizes with mixtures of non-Gaussian variables and high targets of reliability. For instance, this is demonstrated for a benchmark studied by Cho and Lee (2011) with 10 variables and 8 constraints, where the overall CPU-time for the SORM-based SQP approach is less than 12 seconds. This should be compared to the CPU-time for the corresponding crude Monte Carlo simulations which is 1767 seconds. Furthermore, this benchmark is also generalized and solved for 300 variables and 240 constraints. It is shown that our proposed approach treats this problem size efficiently for five different distributions (normal, lognormal, Gumbel, gamma and Weibull). The density distributions, cumulative distributions and the gradients of these density distribution functions are depicted in Fig. 2. Another most well-known benchmark for RBDO with two variables and three constraints are expanded to 10 variables with mixtures of the five distributions and 15 constraints with high targets of reliability. This problem is also solved for 50 variables and 75 constraints.

The density, cumulative and gradient of the density distribution functions for the normal, lognormal, Gumbel, gamma and Weibull distributions, see further details in Appendix 6.1A

The outline of the paper is as follows: in Section 2 the FORM-based SQP is derived by using the MPP and the IsoT, in the next section we introduce the four SORM-based corrections, in Section 4 the suggested second order approach for RBDO is evaluated for several benchmarks, and, finally, we present some concluding remarks.

2 FORM-based SQP

Most recently, in Strömberg (2016), a FORM-based sequentially linear programming (SLP) approach for RBDO was obtained by introducing Taylor expansions of the limit state function at the MPP by using intermediate variables defined by the IsoT. In this work, those ideas are further developed for deriving a second order approach for RBDO by using SQP and SORM. First, in this section, a FORM-based SQP approach for RBDO is derived by adopting the IsoT and the MPP. At a current iterate, an approximative QP-problem of our RBDO problem is derived by performing Taylor expansions at the MPP and by using intermediate variables defined by the IsoT. In such manner, a QP-problem including a target reliability index is obtained in the standard normal space. The idea of intermediate variables is used frequently in optimization, see e.g. the early works by (Fleury 1979; Fleury and Braibant 1986). The reciprocal approach of Fleury and Braibant was e.g. utilized by Cho and Lee (2011) for solving RBDO problems. Secondly, in the next section, the FORM-based target reliability index is adjusted by four SORM formulas. In such manner a SORM-based SQP methodology for RBDO as illustrated by the flowchart in Fig. 3 is obtained.

Let us consider a RBDO problem for one objective \(f=f(\boldsymbol {X})\) and a constraint \(g=g(\boldsymbol {X})\), where \(\boldsymbol {X}\) is considered to be a vector of \(N_{\text {VAR}}\) uncorrelated random variables with mean values \(\mu _{i}\) which are collected in \(\boldsymbol {\mu }\). The distribution of each variable is defined by a probability density function \(\rho _{i}=\rho _{i}(x;\boldsymbol {\theta }_{i})\), where \(\boldsymbol {\theta }_{i}=\boldsymbol {\theta }_{i}(\mu _{i})\) represents distribution parameters that depend on the mean value. The corresponding cumulative distribution function is defined by

For simplicity and clarity we only consider one constraint. However, it is straight-forward to extend the formulation such that several constraints are treated simultaneously. This is done in the numerical implementation. Our RBDO problem reads

where \(\text {Pr}[\cdot ]\) is the probability of the constraint \(g\leq 0\) being true. \(P_{s}\) is the target of reliability that must be satisfied. Notice, that we formulate the problem by using \(f(\boldsymbol {\mu })\) as a representative of our objective and not the expected value of f, i.e. \(\mathrm {E}[f(\boldsymbol {X})]\) where \(\mathrm {E}[\cdot ]\) designates the expected value of the function f. In the FORM-based SLP approach presented in Strömberg (2016), we use \(\mathrm {E}[f(\boldsymbol {X})]\) as objective function. In that latter work, we also let f and g be given as surrogate models using a new approach for radial basis function networks recently suggested and evaluated in Amouzgar and Strömberg (2016). In this work, we focus on the derivation and performance of the proposed second order RBDO approach and therefore only consider explicit analytical expressions on f and g. Surrogate model based RBDO can e.g. be found in (Gomes et al. 2011; Duborg et al. 2011; Qu et al. 2003; Youn and Choi 2004a; Song and Lee 2011; Kang et al. 2010; Zhu and Du 1403; Ju and Lee 2008).

The QP-problem is solved in the standard normal space instead of the physical space. This is obtained by mapping the problem in (2) from the physical space to the normal space by using the iso-probabilistic transformation and the Taylor expansions. At an iterate k with mean values collected in \(\boldsymbol {\mu }^{k}\), the incremental iso-probabilistic transformationFootnote 1 reads

where \({\Phi }(x)\) is the cumulative distribution function of the standard normal distribution with zero mean and a unit standard deviation. We also recognize, by taking the derivative, that (3) implies

In this relationship, we have also introduced the probability density function \(\phi =\phi (x)\) of the standard normal distribution. Equation (3) is not defined numerically for \({\Phi }^{-1}(0)=-\infty \) and \({\Phi }^{-1}(1)=\infty \). In the numerical implementation, when this happens, we replace (3) by

where \(\sigma _{i}\) is the standard deviation. Equation (5) is of course the explicit expression of the IsoT when the random variables are Gaussian. Furthermore, by taking the mean of (4), we arrive at

The Taylor expansions of f and g, at an iterate k, are performed for increments \(\text {d} Y_{i}=Y_{i}-{Y_{i}^{k}}\) and \(\text {d} X_{i}=X_{i}-{X_{i}^{k}}\). By taking the means of these increments, we get

where \(\eta _{i}=\mathrm {E}[Y_{i}]\), \(\mathrm {E}[{Y_{i}^{k}}]=0\) by the definition of the iso-probabilistic transformation in (3), \(\mu _{i}=\mathrm {E}[X_{i}]\) and \({\mu _{i}^{k}}=\mathrm {E}[{X_{i}^{k}}]\). By inserting (7) in (6), one obtains that

is valid in a neighborhood of \({\mu _{i}^{k}}\). Equation (6) holds for infinitesimal increments, but (8) is written for finite increments and therefore becomes an approximation. Equation (8) is crucial for the mapping back from the normal space to the physical space at the end of the sequential step since (3) is no longer valid at this stage, see (20) and Fig. 4. Furthermore, (8) is also used to transform the objective function to the standard normal space, see (9) and (11). In (8), the notation \(\boldsymbol {\theta }_{i}^{k}=\boldsymbol {\theta }_{i}({\mu _{i}^{k}})\) was also introduced, which will be used in the following.

A second-order Taylor expansion of f is performed at \({\mu _{i}^{k}}\), when f is considered to be a function of \(\mu _{i}\) as in (2). This can be written as

where

By utilizing (8), (9) can also be written as a function of \({\boldsymbol {\eta }}\), i.e.

where

The constraint is expanded at the most probable point \(\boldsymbol {X}^{k}=\boldsymbol {X}^{\text {MPP}}\) by using a first order Taylor expansion in the intermediate variables defined by the IsoT in (3). The MPP is obtained by solving

in the physical space, where \(\boldsymbol {Y}\) contains \(Y_{i}=Y_{i}(X_{i})\) defined by the iso-probalistic transformation in (3). This means, of course, finding the closest point to the limit state function in the metric of Hasofer-Lind. The main motivation for finding the MPP in the physical space is that the IsoT then only appears in the objective function. This has been found to be most beneficial for problems with many constraints and non-Gaussian variables.

Numerically, the problem in (13) is solved by applying Newton’s method to the necessary optimality conditions, which are

By neglecting the gradients of the probability density functions, the following in-exact Jacobian is used in the Newton algorithm:

The use of this Jacobian has proven to be superior over the exact one in our implementation of the algorithm. By adding

to \({\boldsymbol {J}}(i,i)\) for \(i=1,\ldots ,N_{\text {VAR}}\), the exact Jacobian is recovered. Notice, that the derivative of the density functions also are needed in order to establish this term, see Appendix A. In addition, we are using a line-search of Armijo type, which we have adopted several times for solving non-smooth and non-linear problems in mechanics. For instance, in Strömberg (2011), it was utilized to simulate frictional heat bands in a brake disc. In Klarbring and Strömberg (2013), the state problem of hyperelastic bodies was established with this approach in order to perform topology optimization of non-linear elastic structures.

The linear approximation of g obtained by the Taylor expansion in the intermediate variables reads

where \(y_{i}^{\text {MPP}}=Y_{i}(\boldsymbol {x}_{i}^{\text {MPP}})\) is the optimal solution to (13). Notice that \(g({\boldsymbol {x}}^{\text {MPP}})=0\) was utilized in the expansion.

Thus, we let \(\tilde {g}=\tilde {g}(\boldsymbol {Y})\) represents the hyperplane in (16). By inserting \(\tilde {g}\) into the reliability constraint in (2), the probability operator \(\text {Pr}[\cdot ]\) is straight-forward to evaluateFootnote 2. We have that

where the mean value \(\mu _{\tilde {g}}\) and the standard deviation \(\sigma _{\tilde {g}}\) of \(\tilde {g}\), respectively, can be expressed as

Here, \({\tau _{i}^{2}}=\text {VAR}[Y_{i}]\) is the variance of \(Y_{i}\). In the following it is assumed that the move limits represented by \(\epsilon \), see (19), are set such that \(\eta _{i}\) will be close to zero, implying that \(\tau _{i} \approx 1\) can be considered to be a proper approximation. As discussed previously in this section, one might think that \(\text {VAR}[Y_{i}]\) always equals one, but this is not the case since the mean \(\mathrm {E}[Y_{i}]\) typically goes from zero to a non-zero value during the solution of (19). Thus, during an increment the mean is changed and the iso-probabilistic transformation at iteration k is no longer valid at iteration \(k+1\). This is also explained by Fig. 4.

In conclusion, by inserting (11) and (17) into (2), the deterministic QP-problem to be solved in the standard normal space reads

Here, the target reliability index \(\beta _{t}={\Phi }^{-1}\left (P_{s}\right )\) has also been introduced. In the next section, it is discussed how this reliability index is corrected by using SORM.

The optimal solution to (19) is obtained by using the optimization toolbox of Matlab (quadprog.m) and it is denoted \(\eta _{i}^{*}\). The corresponding mean value \(\boldsymbol {\mu }^{k+1}\) is then updated by using (8). By using this relationship, we map back \(\eta _{i}^{*}\) from the standard normal space to the means in the physical space according to

Then, a new QP-problem is generated around \(\boldsymbol {\mu }^{k+1}\) following the procedure presented above. This procedure, see Fig. 4, continues in a sequence until convergence is reached, see also the flowchart presented in Fig. 3.

3 SORM-based corrections

At the origin of the standard normal space, (19) is equivalent to

where

is the Hasofer-Lind reliability index. The perhaps confusing minus sign here is of course a consequence of the definition of g in (2). This is explained in detail in Appendix B. When \(\beta _{\text {HL}}=\beta _{t}\), the probability of failure is estimated using FORM to

Furthermore, by using SORM, this is adjusted to

where \(\chi _{\text {SORM}}\) is a correction factor established by any SORM approach. Four such approaches are presented in detail below. Equation (24) now suggest how to correct our target of reliability \(\beta _{t}\) in the following manner:

This is our SORM-based target reliability index to be used in (19) instead of \(\beta _{t}\). In such manner, we modify our FORM-based SQP to a SORM-based SQP approach.

Before applying SORM, let us transform our variables \(\boldsymbol {Y}\) with base vectors \({\boldsymbol {e}}_{i}\) to a new orthonormal coordinate system where the first base vector \(\hat {\boldsymbol {e}}_{1}\) is defined by the MPP using

see also Fig. 1. The other base vectors \(\hat {\boldsymbol {e}}_{i}\) are then generated by the Gram-Schmidt procedure of the following set of vectors \(\{\hat {\boldsymbol {e}}_{1}, {\boldsymbol {e}}_{2},\ldots ,{\boldsymbol {e}}_{N_{\text {VAR}}}\}\). The columns of the corresponding rotation matrix \({\boldsymbol {R}}\) is then defined by \(\hat {\boldsymbol {e}}_{i}\) in the following manner:

and we have that our new variables are given by

Now, let us perform a second order Taylor expansion of \(h=h(\boldsymbol {Y})=-g(\boldsymbol {X}(\boldsymbol {Y}))\) in \({\boldsymbol {Z}}\) at the MPP. This yields

Since \({\boldsymbol {R}}^{T}\nabla h\) and \({\boldsymbol {Z}}^{\text {MPP}}\) are collinear and opposite (this follows from the necessary conditions to (13)) and

we can write (29) as

Furthermore, if we normalize this limit function with the scalar \(||{\boldsymbol {R}}^{T} \nabla h||\), then we obtain

where

In the derivations above, we also utilize

when \(\nabla ^{2} h\) is calculated. Gradients of the five distributions considered in this paper are presented in Appendix A.

A parabolic approximation of (32) reads

where \(\lambda _{i}\) are the principle curvatures of \({\boldsymbol {A}}\)(1:\(N_{\text {VAR}}-1\),1:\(N_{\text {VAR}}-1)\) and \(\zeta _{i}\) are some variables given in a coordinate system defined by the corresponding eigenvectors. The parabolic approximation in (35) is illustrated in Fig. 5.

By starting from the representation of the limit function in (35), one can derive Breitung’s formula (Breitung 1984), which suggests that

for large values on \(\beta _{\text {HL}}\). The latter product is called Breitung’s correction and denoted here by \(\chi _{\text {SORM}}=\chi _{\text {SORM}}(\beta _{\text {HL}},{\boldsymbol {\lambda }})\), where \({\boldsymbol {\lambda }}\) contains the principle curvatures \(\lambda _{i}\).

Instead of using (36), one can apply the correction factor suggested by Hohenbichler et al. (1987), which reads

This is suggested to be an improvement of Breitung’s correction.

Another improvement of Breitung’s formula is suggested by Tvedt (1983):

When using this formula, our correction factor becomes

In this work, we also consider a less known SORM approach suggested recently by Mansour and Olsson (2014). They propose

where

are three of the probabilists’ Hermite polynomials. Furthermore,

Here, \(\beta _{\text {C}}\), \(\gamma _{1}\) and \(\gamma _{2}\) represent the Cornell reliability index, the skewness and kurtosis, respectively, of the limit state function in (35). The corresponding correction factor \(\chi _{\text {SORM}}\) is again established by (39).

4 Numerical examples

The performance of the proposed SORM-based SQP methodology for RBDO is demonstrated in this section for three established benchmarks. However, the first two benchmarks are generalized to be formulated for considerable higher number of variables and constraints than the original formulations, and the final one is typically used to test deterministic design optimization algorithms, but in this work formulated instead as a RBDO problem. The density functions and the corresponding gradients of the five distributions (normal, lognormal, Gumbel, gamma and Weibull) are presented in Appendix A, see also Fig. 2. The distributions are generated by using the statistics and machine learning toolbox of Matlab and the QP-problem is solved using quadprog.mof Matlab. In all examples, the starting point is taken to be the deterministic optimal solution obtained by a genetic algorithm (GA) and SLP, and the sample size is set to 1E6 using quasi-Monte Carlo simulations with our in-house code with Halton and Hammersley samplings, see Appendix C. All SORM approaches have been tested and so far we think that their performances are very similar. The solutions are obtained on a laptop workstation with Intel i7 2.80 GHz and 16 GB RAM.

The first example in (43) is a numerical benchmark that was considered by Cho and Lee (2011), which is a RBDO formulation of the Hock and Schittkowski problem no. 113. The RBDO problem in Cho and Lee (2011) consists of 10 normal distributed variables with \(\text {VAR}[X_{i}]=0.02^{2}\) and 8 constraints. This problem is recovered by setting \(N=0\) in (43). The solution obtained by the SORM-based SQP approach is presented in Table 1. The solution is better than the one presented in Cho and Lee (2011), both the functional value \(f(\boldsymbol {\mu })\) and the mean \(\mathrm {E}[f]\). In addition, the reliability for each constraint is satisfied most accurately.

We also consider the problem in (43) for non-Gaussian distributions. The results are reported in Table 2. The solutions for lognormal and gamma distributions are very similar to the one obtained for the normal distribution, but for the Gumbel and Weibull distributions both solutions differ slightly. One also observers that the corresponding probabilities of safety for these two solutions are a bit more conservative. This is probably a topic for small improvements in the future. In addition, we solve the problem for 50, 100, 200 and 300 normal distributed variables with 40, 80, 160 and 240 constraints, respectively, in order to demonstrate the numerical performance. The trend of the CPU-time and the objective value for this study is shown in Fig. 6. From the graphs one can see that the problem with 300 variables is solved in less than 20 CPU minutes on a laptop with the same accuracy as for 10 variables.

The second problem in (44) is a generalization of a well established benchmark for RBDO, see e.g. (Chan et al. 2007; Youn and Choi 2004b) and also Figs. 7 and 8. The original problem is for two variables and three constraints, this is obtained by setting \(N=0\) in (44). In addition, we also square the objective function in order to evaluate the SQP approach. The generalization reads

where \(\text {VAR}[X_{i}]=0.3^{2}\).

(44) solved for different distributions and targets of reliability. Column 1: normal, column 2: lognormal, column 3: Gumbel, column 4: gamma and column 5: Weibull. Row 1: P s = 0.99, …, row 4: P s = 0.99999

We begin to solve the original problem of (44), i.e. when \(N=0\), for our five different distributions. The results from this study are presented in the first rows of Table 3. We also report the number of function calls for these problems on the fourth row together with the corresponding CPU time. The slightly longer CPU time for the gamma variables compared to the times obtained for normal and lognormal variables is a consequence of a slower algorithm for the gamma distribution. However, in my opinion, the number of function calls does not reveal the performance of an algorithm. For instance, by increasing the number of functions calls when using the Armijo line-search procedure, the performance of the Newton algorithm is improved significantly and the bottle-neck of solving linear equation systems for finding search directions is reduced. Thus, by increasing the number of function calls, the CPU time decreases. We believe that the problem size and corresponding CPU times are more relevant information for evaluating numerical performance of an algorithm as presented in Fig. 6.

Next, we let \(N=4\), i.e. 10 variables and 15 constraints, where the first pair of variables is normal distributed, the second pair is lognormal, the third is Gumbel, the fourth is gamma and the fifth pair is Weibull distributed. The solution to this problem is presented on the second rows of Table 3. Notice that the solution obtained for 10 variables with mixtures of the five distributions is identical to the sub-solutions obtained for two variables. Furthermore, the targets of reliability are also satisfied most accurately. We also solve this problem with 10 variables when the target of reliability is changed from \({\Phi }(3)\) to \({\Phi }(4)\). The solution is given on the third rows of Table 3. For this particular problem we let the sampling size of the quasi-Monte Carlo simulation be 1E7. The solutions for Weibull distributions are plotted in Fig. 7 for both \({\Phi }(3)\) and \({\Phi }(4)\). In Fig. 8, we have solved (44) when \(N=0\) for each distribution with targets of reliability ranging from 0.99 to 0.99999.

We also solve (44) when \(N=24\), implying 50 variables and 75 constraints. The first 10 variables are normal, the next ten are lognormal and so forth. The solution is identical to the ones obtained for two and ten variables. The CPU-time is less than ten minutes, and the corresponding SQP convergence histories for the FORM and SORM steps are plotted in Fig. 9.

Finally, we consider the welded beam problem depicted in Fig. 11. This is a well-known mechanical design problem that has served as benchmark several times in deterministic optimization, see e.g. Garg (2014). We now treat this design problem as a RBDO problem as given in (45). The corresponding deterministic problem is given in Appendix D. We let \(0.1\leq X_{1},X_{4} \leq 2\) and \(0.1\leq X_{2}, X_{3} \leq 10\) be lognormalFootnote 3 with \(\text {VAR}[X_{1}]=\text {VAR}[X_{4}]=0.1^{2}\) and \(\text {VAR}[X_{2}]=\text {VAR}[X_{3}]=0.3^{2}\). The optimal objective value for the deterministic problem is 2.381 at \(\boldsymbol {X}^{*}=(0.24437,6.2175,8.2915,0.24437)\) and is obtained when constraints 1-3 and 6 are active. However, for our RBDO formulation of the welded beam problem, the expected mean of the optimal objective is 9.9442 at \(\boldsymbol {\mu }^{*}=(0.43379,6.9512,9.0252,0.92561)\) when constraints 1 and 3 are active. The probability of safety of these constraints are 0.9990. The corresponding histograms are plotted in Fig. 10. Problem (45) might serve as a challenging RBDO benchmark with highly non-linear objective and constraints.

5 Concluding remarks

In this work SORM-based RBDO is performed by SQP for large problem sizes with mixtures of non-Gaussian distributions and high targets of reliability. This is done by deriving a FORM-based QP-problem in (19), where the target of reliability index is corrected using (25) with four different SORM approaches. In this work, all SORM corrections have shown very similar performance.

The derivation of (19) utilize the fact that the IsoT in (3) is incremental in a sequential approach, therefore (3) is termed incremental IsoT in this work. A proper treatment of this fact is crucial for the performance of the method and this is presented in detail in the paper. The derivation is performed by using (8) and intermediate variables, both defined by the incremental IsoT, such that (19) is formulated in the standard normal space, which implies that the setting of the move limits is straight-forward. Another important feature of the methodology is the treatment of the MPP, which is solved in the physical space instead of the normal space. In such manner, we only have to deal with the IsoT in the objective function instead of the limit functions. This is crucial for the numerical performance when dealing with many constraints and non-Gaussian variables. In addition, we have also found the introduction of the inexact Jacobian in (15) improve the performance significantly for non-Gaussian variables.

The proposed methodology is studied numerically for several problems with non-Gaussian variables. In particular, two established RBDO benchmarks are generalized to large number of variables and many constraints of reliability. These two generalizations, (43) and (44), might sever as benchmarks for testing new RBDO algorithms for large problem sizes with mixtures of non-Gaussian variables. The numerical performance of the implementation of the proposed second order RBDO approach is excellent, showing high accuracy and robustness at low CPU times when solving (43) for 300 variables and 240 contraints, and (44) for 50 variables and 75 constraints with mixtures of non-Gaussian variables and high targets of reliability.

Notes

Notice that in the next iteration \(k+1\) we have a new IsoT defined by \(Y_{i} = {\Phi }^{-1}\left (F_{i}(X_{i};{\boldsymbol {\theta }_{i}}(\mu _{i}^{k+1}))\right )\) , implying that E[Y i ] ≈ 0 and \(\text {VAR}[Y_{i}]\approx 1\) during the sequential step starting at exact zero and one, respectively, see also Fig. 4.

\(\tilde {g}\) is of course normal distributed.

This choice is crucial since \(X_{i}\) cannot be negative.

References

Ahn J, Kwon JH (2006) An Efficient Strategy for Reliability-based Multidisciplinary Design Optimization using BLISS. Struct Multidiscip Optim 31:363–372

Amouzgar K, Strömberg N (2016) Radial Basis Functions as Surrogate Models with a Priori Bias in Comparison with a Posteriori Bias, Structural and Multidisciplinary Optimization on-line

Breitung K (1984) Asymptotic approximations for multinormal integrals. J Eng Mech 110:357–366

Chan KY, Skerlos SJ, Papalambros P (2007) An Adaptive Sequential Linear Programming Algorithm for Optimal Design Problems with Probabilistic Constraints. J Mech Des 129:140–149

Cho TM, Lee BC (2011) Reliability-based Design Optimiztion using Convex Linearization and Sequantial Optimization and Reliability Assessment Method. Struct Saf 33:42–50

Choi KK, Tu J, Park YH (2001) Extensions of Design Potential Concept for Reliability-based Design Optimization to Nonsmooth and Extreme Cases. Struct Multidiscip Optim 22:335–350

Choi M-J, Cho H, Choi KK, Cho S (2015) Sampling-based RBDO of Ship Hull Structures considering Thermo-elastic-plastic Residual Deformation. Mech Based Des Struct Mach 43:183–208

Choi S-K, Grandhi RV, Canfield R (2010) Reliability-based Structural Design. Springer London Ltd.

Duborg V, Sudret B, Bourinet J-M (2011) Reliability-based Design Optimization using Kriging Surrogates and Subset Simulation, vol 44

Fleury C (1979) Structural weight optimization by dual methods of convex programming. Int J Numer Methods Eng 14:1761–1783

Fleury C, Braibant V (1986) Structural Optimization: A New Dual Method using Mixed Variables. Int J Numer Methods Eng 23:409–428

Garg H (2014) Solving Structural Engineering Design Optimization Problems using an Artificial Bee Colony Algorithm. J Ind Manag Optim 10:777–794

Gomes H, Awruch A, Lopes P (2011) Reliability Based Optimization of Laminated Composite Structures using Genetic Algorithms and Artificial Neural Networks. Struct Saf 33:186–195

Gu X, Dai J, Huang X, Li G (2016) Reliable Optimisation Design of Vehicle Structure Crashworthiness under Multiple Impact Cases, International Journal of Crashworthiness online

Gu X, Lu J, Wang H (2015) Reliability-based Design Optimization for Vehicle Occupant Protection System based on Ensemble of Metamodels. Struct Multidiscip Optim 51:533–546

Halder A, Mahadevan S (2000) Probability, Reliability, and Statistical Methods in Engineering Design. Wiley

Hasofer A, Lind N (1974) Exact and Invariant Second Moment Code Format. J Mech Div ASCE 100:111–121

Hohenbichler M, Gollwitzer S, Kruse W, Rackwitz R (1987) New Light on First- and Second-Order Reliability Methods. Struct Saf 4:267–284

Hsu K-S, Chan K-Y (2010) A Filter-based Sample Average SQP for Optimization Problems with Highly Nonlinear Probabilistic Constraints. J Mech Des Trans ASME 132:111002

Ju BH, Lee BC (2008) Reliability-based Design Optimization using a Moment Method and a Kriging Metamodel. Eng Optim 40:421–438

Kang SC, Koh HM, Choo JF (2010) An Efficient Response Surface Method using Moving Least Squares Approximation for Structural Reliability Analysis. Probabilistic Eng Mech 25:365–371

Karadeniz H, Togan V, Vrouwenvelder T (2009) An Integrated Reliability-based Design Optimization of Offshore Towers. Reliab Eng Syst Saf 94:1510–1516

Klarbring A, Strömberg N (2013) Topology Optimization of Hyperelastic Bodies including Non-zero Prescribed Displacements. Struct Multidiscip Optim 47:37–48

Lee I, Noh Y, Yoo D (2015) A Novel Second Order Reliability Method (SORM) using Non-central or Generalized Chi-Squared Distributions. J Mech Des Trans ASME 134:100912

Lemaire M (2009) Structural Reliability. Wiley

Lim J, Lee B, Lee I (2014) Second-order Reliability Method-based Inverse Reliability Analysis using Hessian Update for Accurate and Efficient Reliability-based Design Optimization. Int J Numer Methods Eng 100:773–792

Lim J, Lee B, Lee I (2016) Post Optimization for Accurate and Efficient Reliability-based Design Optimization using Second-order Reliability Method based on Importance Sampling and Its Stochastic Sensitivity Analysis. Int J Numer Methods Eng 107:93–108

Mansour R, Olsson M (2014) A Closed-form Second Order Reliabillity Method using Noncentral Chi-Squared Distributions , vol 136

Mansour R, Olsson M (2016) Response Surface Single Loop Reliability-based Design Optimization with Higher Order Reliability Assessment. Struct Multidiscip Optim 54:63–79

Qu X, Haftka RT, Venkataraman S, Johnson TF (2003) Deterministic and Reliability-Based Optimization of Composite Laminates for Cryogenic Environments. AIAA J 41:2029–2036

Shetty NK, Guedes Soares C, Thoft-Christensen P, Jensen FM (1998) Fire Safety Assessment and Optimal Design of Passive Fire Protection for Offshore Structures. Reliab Eng Syst Saf 61 :139–149

Song C, Lee J (2011) Reliability-based Design Optimization of Knuckle Component using Conservative Method of Moving Least Squares Meta-models. Probabilistic Eng Mech 26:364–379

Strömberg N (2016) Reliability Based Design Optimization by using a SLP Approach and Radial Basis Function Networks, in the proceedings of the ASME 2016 International Design Engineering Technical Conferences & Computers and Information in Engineering Conference IDETC/CIE 2016, August 21-24, Charlotte, North Carolina, USA

Strömberg N (2011) An eulerian approach for Simulating Frictional Heating in Disc-Pad Systems. Eur J Mech A/Solids 30:673–683

Tvedt L (1983) Two Second Order Approximations to the Failure Probability, Technical report: RDIV/20-004-83 Det Norske Veritas

Valdebenito MA, Schuëller GI (2010) A Survey on Approaches for Reliabillity-Based Optimization. Struct Multidiscip Optim 42:645–663

Yoo D, Lee I, Cho H (2014) Probabilistic Sensitivity Analysis for Novel Second-order Reliability Method (SORM) using Generalized Chi-squared Distribution. Struct Multidiscip Optim 50:787–797

Youn BD, Choi KK (2004) A New Response Surface Methodology for Reliability-Based Design Optimization. Comput Struct 82:241–256

Youn BD, Choi KK (2004) An Investigation of Nonlinearity of Reliability-Based Design Optimization Approaches. J Mech Des 126:403–411

Zhou J, Xu M, Li M (2016) Reliability-based Design Optimization concerning Objective Variation under Mixed Probabilistic and Interval Uncertainties. J Mech Des Trans ASME 138 :114501

Zhu Z, Du X (1403) Reliability Analysis with Monte Carlo Simulation and Dependent Kriging Predictions, ASME Journal of Mechanical Design. J Mech Des Trans ASME 138(12):2016

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Distributions

In this appendix the density distribution functions used in this work, and the corresponding gradients, are presented. The relationships between the distribution parameters and the mean \(\mathrm {E}[X]\) and the variance \(\text {VAR}[X]\) are given in Table 4. The distributions are plotted in Fig. 2 for \(\mathrm {E}[X]=1\) and \(\text {VAR}[X]=0.1^{2}\).

Normal

Lognormal

Gumbel

Gamma

Weibull

Appendix B: Hasofer-Lind approach

In the established Hasofer-Lind approach, the reliability index \(\beta _{\text {HL}}\) is defined by the distance from the origin to the closest point, denoted the MPP, on the failure surface \(h(\boldsymbol {Y})=-g(\boldsymbol {X}(\boldsymbol {Y}))\), see Fig. 1. This is established by solving

The Hasofer-Lind reliability index is then defined by the corresponding optimal solution \(\boldsymbol {Y}^{\text {MPP}}\) in the following manner

or, by using \(\nabla h^{T}\boldsymbol {Y}=-\lambda \nabla h^{T}\nabla h\sqrt {\boldsymbol {Y}^{T}\boldsymbol {Y}}\) from the necessary optimality conditions to (56), as

This can also be expressed in \(g=g(\boldsymbol {X}(\boldsymbol {Y}))\) by utilizing (4). If this is done, then one finally arrives at

from which (22) is obtained by using (18).

Appendix C: Halton and Hammersley sampling

The Halton and Hammersley sequences are two examples of sparse uniform samplings generated by quasi-random sequences. Let \(p_{1}\), \(p_{2},...,p_{D}\) represent a sequence of prime numbers, where D is the dimension of a Halton point defined by

for a non-negative integer k. Furthermore, for any prime number p,

where the integers \(a_{0}\), \(a_{1}\),..., \(a_{M}\) are obtained from the fact that k can be represented as

A quasi-random set of N Halton points is now simply obtained by taking a sequence of Halton points in (60) for \(k=0\), 1, 2,..., \(N-1\). By defining the Hammersley point as

we can easily generate a set of Hammersley sampling points in a similar way as for the Halton set. The radical inverse \({\Phi }(k,p)\) appearing in (61) is established in this work by the following algorithm:

Appendix D: The welded beam problem

A most established engineering benchmark is the welded beam problem shown in Fig. 11, which reads

where the shear stress \(\tau (x_{i})\), normal stress \(\sigma (x_{i})\), displacement \(\delta (x_{i})\) and critical force \(P_{c}(x_{i})\) are given by

Here, G=12\(_{E}\)6 Psi is the shear modulus, E=30\(_{E}\)6 Psi is Young’s modulus and \(L=14\) in. In addition, the variables are bounded by \(0.1\leq X_{1},X_{4} \leq 2\) and \(0.1\leq x_{3}, x_{4} \leq 10\). The optimal solution obtained by GA and SLP is \((0.24437,6.2175,8.2915,0.24437)\). An almost identical solution was presented by Garg (2014).

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.