Abstract

Complex systems are difficult to manage, operate and maintain. This is why we see teams of highly specialised engineers in industries such as aerospace, nuclear and subsurface. Condition based monitoring is also employed to maximise the efficiency of extensive maintenance programmes instead of using periodic maintenance. A level of automation is often required in such complex engineering platforms in order to effectively and safely manage them. Advances in Artificial Intelligence related technologies have offered greater levels of automation but this potentially pivots the weight of decision making away from the operator to the machine. Implementing AI or complex algorithms into a platform can mean that the Operators’ control over the system is diminished or removed altogether. For example, in the Boeing 737 Air Max Disaster, AI had been added to a platform and removed the operators’ control of the system. This meant that the operator could not then move outside the extremely reserved, algorithm defined, “envelope” of operation. This paper analyses the challenges of AI driven condition based monitoring where there is a potential to see similar consequences to those seen in control engineering. As the future of society becomes more about algorithm driven technology, it is prudent to ask, not only whether we should implement AI into complex systems, but how this can be achieved ethically and safely in order to reduce risk to life.

Similar content being viewed by others

1 Introduction

Maintaining a system of component parts is an extremely complex undertaking, not least because the component parts can be affected by the raw material, running hours, shock events, environmental conditions or how far they are pushed to work within their physical, not regulatory, boundariesFootnote 1 (Takata et al. 2004; Frangopol et al. 2012). A system inevitably has to be closed down, partially or fully, in order to fix the required component part. Due to breakage or decay of a component, further linked components or other system parts may also need to be replaced at the same time. This leads to lost revenue or capability due to shutdown times lengthening as systems become more complex (Goossens and Basten 2015). With an increasing complexity and interdependency of systems comes a challenging importance to maximise the efficiency of maintenance. Many industries are affected by maintenance efficiency issues, particularly where redundancy is difficult or expensive to achieve; some of the most critical are aerospace (Dalal et al. 1989), nuclear (Stott et al. 2018) and subsurfaceFootnote 2 (Dui et al. 2020).

An example of this would be the Royal Navy’s Nuclear Subsurface Vessels. Due to the expense of single assets, they are few in number and, in addition, they are highly complex engineering platforms. These platforms lack redundancy in onboard systems due to space limitations. Here the operational output is heavily reliant on the efficiency of its maintenance. Due to the complexity of the engineering, the highly trained and specialised manpower required to both operate and maintain such an asset is significantly greater in comparison with many other engineering industries. In this example the operators are highly trained engineers. The operatorsFootnote 3 are so highly attuned that they know the ‘feel’ of the vessel and every valve and connection in the system. Similarly, Captain Sullenberger states that “pilots must be capable of absolute mastery of the aircraft and the situation at all times, a concept pilots call airmanship” (Sullenberger 2019). In the same way we could feel that something was wrong with our car when we had manual steering columns, an operator knows their system. This, however, is subject to the level of competence of the operator where many human factors need to be considered. This is mitigated by operators being heavily trained. Nonetheless, in some spheres, human error is what drives the argument for greater automation (Brown 2016). This paper aims to address the potential risks of greater automation in engineering rather than addressing the competition inherent in human - computer decision making.

Due to having complex systems to work with, the Royal Navy requires time and work to rectify defects, thereby preventing more crucial operations from being carried out (Widodo and Yang 2007; Mobley 1990). The Royal Navy’s current fleet of Vanguard Class SSBN submarines are being extended beyond their original design life. This generates significant engineering challenges as defects begin to dominate the maintenance workload for these ageing platforms. The increasing frequency of sudden failures, which can induce significant programme pressures, raises important questions about the performance of the current reliability centred maintenance (RCM) philosophies, of which Condition Based Monitoring (CBM) is a core component.

New artificial intelligence (AI) systems in condition based monitoring aim to eliminate or optimise the shutdown time and loss of revenue as well as ensure platforms work within their ‘regulatory envelope’ (see details in Sect. 5). In this paper it is discussed whether we can indeed use the successes of AI on simpler systems and apply this technology to more complex platforms without further risk. From the authors’ perspective the impacts of introducing AI into predictive maintenance has not been fully addressed. Whilst many papers are currently examining technical progression in this area, here we aim to address some of the potential ethical risks and difficulties in the practical application in complex systems.

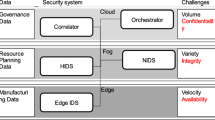

In this paper CBM is first introduced and then discussed in terms of its relevance to maintenance of complex engineering platforms. Section 3 explores the analytical work that may potentially introduce AI into predictive maintenance. The complex issues that may be discovered in the introduction of such technologies into real systems are then discussed. In Sect. 4 examples of advanced condition based monitoring employed in industry are investigated. Analysis in this section illustrates how we can drive greater data capture through advanced technologies. In Sect. 5 the importance of context in which new technology could operate is examined. The importance of the operator and their ability to recognise contexts where the rules have to be applied with discretion is analysed. Section 6 presents examples of the risks of the removal of an operator from decision making. This also considers operator-algorithm disconnect. Section 7 examines the potential risks of AI solutions. In particular the risk to maintenance of subsurface nuclear vessels is explored. Section 8 contains a summary of the paper, the conclusions and directions for further work

2 Condition based monitoring

RCM has been widely adopted in engineering industries since the extensive studies in the US aviation maintenance during the 1960/70s which examined the various failure modes that exist in engineering assets, (Allen 2001). RCM is a methodology that aims to formulate the most effective maintenance strategy for each individual asset based on its failure modes. In effect the its overall aim is to maximise the efficiency of maintenance and as such where it is effective, condition based maintenance should be adopted (Fig. 1).

Under a condition based maintenance philosophy, maintenance is only conducted when there is objective and observable evidence to suggest that impending failure will occur. There have been a number of studies that have compared the two and the factors that dictate the effectiveness of CBM (Mann et al. 1995; Jonge et al. 2017; Engeler et al. 2016). Ideally, the closer repair action can be taken prior to failure, the more efficient the maintenance action is. This is one of the main objectives of CBM. However, for condition-based maintenance strategy to be feasible the three following criteria need to be met according to Mokashi et al. (2002):

-

A clear potential failure condition can be defined.

-

The P-F interval (as drawn below) should be reasonably consistent. The P-F interval is the time between where the condition deterioration can be detected and point at which functional failure occurs.

-

The asset can be monitored/inspected at time intervals less than the P-F interval.

Gearbox diagram (Jing et al. 2017)

With advances in both sensor and analytic technologies, it appears hopeful that these conditions can be increasingly met across a wider range of engineering assets. Some of the advances in sensor technologies that exist will be explored in Sect. 4, where we will see that an increasing and continuous monitoring techniques are explored. This paper will, however, examine the implementation of advanced analytics and AI to predict the remaining useful life (RUL) of engineering assets and in particular the risks that exist, including the human interface with such systems.

3 How AI is being developed to implement CBM systems: a gearbox example

Various methods can be used to monitor equipment and its components in order to observe degradation. When utilising conditioning monitoring data provided by equipment sensors or inspection it is necessary to have a reliable method of interpreting that data to assess the equipment reliability. A variety of degradation models exist that are used in CBM, such as the Weiner process, Gamma process, inverse Gaussian process, general path and random coefficient models (Jonge et al. 2017; Wu et al. 2015).

As shown in Jing et al. (2017) a convolutional neural network based feature learning and fault diagnosis method can be used for the condition based monitoring of a gearbox. A gearbox is a much simpler system than those found in a vessel or airplane and this gearbox is analysed in isolation from the wider system it would normally be a part of.

Many studies of the use of neural networks have been undertaken, (Kane and Andhare 2016, 2020; Tran et al. 2018; Li et al. 2018a). Various methods of the application of neural networks can be seen. For example, Li et al. (2018a) applied a deep belief neural network to diagnose gearboxes and bearings using statistical features in time, frequency and time-frequency domains. In Chen et al. (2012), several time and frequency features were extracted and a convolutional neural network (CNN) was employed to classify different health conditions of a gearbox.

In the following, we discuss the deep learning algorithm developed by Jing et al. (2017) to highlight in detail the gap between academic research and the needs of operators of complex systems.

The model in Jing et al. (2017) constructs feature profiles based on frequency data from vibrational signals and is testing the performance of feature learning from raw data, frequency spectrum and combined time-frequency data; the authors describe a deep learning algorithm, or deep neural network that uses “multiple levels of abstraction” by employing a “hierarchical structure with multiple neural layers” Jing et al. (2017). Features are firstly extracted by various signal analysis methods and these features are used to train and test the neural network (Jing et al. 2017). Figure 2 shows how the data passes through the CNN model from Jing et al. (2017).

Flowchart of the proposed method (marked in red) and its comparative methods (Jing et al. 2017)

The system works in a similar way to image processing; a 1D segment of raw data is used as the input (Jing et al. 2017). Jing et al. state that “deep learning with a deep architecture achieves better performance than shallow architecture”(Jing et al. 2017) but do not give any reasons for the methodology chosen except to say that the method used is based on image processing. They further state that a deeper neural network becomes extremely complex to build and architect, leading to the decision to examine the data and then based on this, to choose the parameters of the network, data segments, size of filters and number of nodes and layers; thus, the configuration of best performance is chosen. When best performance is chosen as a metric, however, with context and operational knowledge, the best performance model may not be the most useful for implementation in a real system.

Jing et al. (2017) then move onto evaluating the chosen model for the study and, to compare the results of the proposed method, multiple further models are used: a fully connected neural network, a support vector machine and a random forest method. It is stated that these methods are widely used and have achieved success.

In general, academic studies are performed on non-complex isolated systems. Developing systems in isolation such as discussed could be particularly vulnerable to environmental changes that would exist in reality. This is because, in a more complex system, there are dependencies, interactions and environmental functions to consider. Gearbox vibrations in a submarine engine room for example may be influenced by the condition of the various drive train components, lubrication oil and compartment temperatures and humidity. How such factors impact the condition monitoring needs to be understood, otherwise the model may become ineffectual, perhaps unknowingly. This can mean that if the final integration with whole systems and users and operators is not considered at the outset, the implementation can fail. In reality, the academic studies and resulting models would need to be developed in collaboration with the operator in order to be integrated into larger, more complex systems, with operational restrictions. In addition, the operator should be able to understand and clearly see how the model works and how the recommendations are formed by the model. Most importantly there must be the means and awareness for the operator to override the models decisions as the operator sees fit.

It is difficult for both the developer, operator and the user when many studies in this area begin in the mechanical sphere and then become opaque. This makes it difficult for interdisciplinary work to take place, as the operator will typically not be a skilled neural network developer with deep prior knowledge. The data that is collected to run the AI must be collected rigorously and be contextually relevant. An operator or user would be best placed to inform this.

With the analysis and monitoring being remote from the operator, this would mean that the operator is detached from the in-depth technology Borth,Hendriks (2016). This would remove the opportunity for the operator to be able to monitor the system themselves or indeed override decisions. Therefore incorrect decisions could be made out of context within the AI and the operator may not know that this has happened (Berente et al. 2019).

In the next section, we examine the advances in degradation measuring techniques in industry and how input data is a significant factor in AI or non-AI models. The AI systems that we have seen use data to operate and the quality and depth of this data is crucial to building models that work for the operator.

4 Degradation measuring techniques and the move towards algorithmic based systems of maintenance

In order for CBM to be effective, there needs to be a means to accurately measure or infer component degradation. As identified in Sect. 2, for CBM to be feasible the asset is required to be monitored/inspected at time intervals less than the P-F interval. Thus, live monitoring is desirable but creates large data which requires analysing. With the advances in sensor technology to offer remote and continuous condition monitoring, that if failure can be accurately predicted there is significant promise for a highly developed maintenance strategy. In this section, we will explore some of the successes achieved in sensor technology and data gathering.

One field that has made significant strides towards the adoption of a modern condition based maintenance strategy is the wind turbine industry. This is motivated by the fact that the locations are often remote, particularly off-shore wind, and the numbers of turbines are significant. Therefore, inspection costs including non-operating time can become inflated (Zhang et al. 2018; Coronado 2014; Yang et al. 2014). This section explores current methods of measuring degradation without application to predictive models.

CBM has encompassed a wide range of continuous sensor and fault detection techniques, such as vibration analysis, oil analysis, temperature measurement, strain measurement and thermography (Yang et al. 2014; Tchakoua et al. 2014). The operational life of a wind turbine can vary significantly based on the weather conditions. Considering this, Rommel et al. (2020) demonstrate how monitored load profiles and environmental conditions can be employed to provide a more informed calculation of the RUL of the wind turbine components. Similarly, Zang et al. (2019) highlight that direct measurement of component condition through vibration analysis, a key health monitoring tool in rotating machinery, can be skewed by operational and environmental variations.

Below offers some further examples of continuous and advanced condition monitoring techniques that have been adopted:

-

Advances in acoustic monitoring techniques and fault detection also present opportunities over a wide range of mechanical applications, and in most circumstances sensing equipment, not integral to the asset, can be easily retrofitted.

-

Prateepasen et al. (2011) demonstrate that leakage and flow rate of air through a valve can be accurately measured through the turbulent flow and resulting acoustic emissions, at relatively low cost.

-

In Albarbar et al. (2010), the air-borne acoustic signals from a diesel engine fuel injection system is analysed. Their findings indicated that deviation from default settings could be detected through high and low speeds, which could be effective in detecting injector spring faults (Albarbar et al. 2010).

-

A wide range of vibro-acoustic monitoring techniques used to detect and analyse both airborne and structural borne emissions from internal combustion engines are reviewed in Delvecchio et al. (2018). Additionally, a wide range of engine faults can be detected through vibration and/or sound pressure emissions, but the time of detection in relation to failure varied significantly depending on the fault and emission type (Delvecchio et al. 2018).

Although not prognostic tools, the above techniques demonstrate a significant maturity in fault detection and diagnostic capabilities of modern maintenance systems. These developments can significantly aid engineering decision making in maintenance.

In summary, it is clear that there has been significant advances in the uses of CBM techniques, that can provide non-invasive symptomatic data that can be used to inform RUL analysis. Greater condition information and data allow the opportunity to potentially employ advanced analytical tools and AI in order to predict RUL, relying less on engineering judgement. However, degradation measurement and prediction can be vulnerable to measurement errors, shock events and operational, environmental and endogenous factors. To consider such factors in any RUL modelling increases the complexity of the models, but ultimately the greater the affect of these are, the poorer the understanding is in the P-F interval. For example, where single shock events tend to dominate the degradation process of a component, CBM is ineffectual (Jonge et al. 2017).

If we consider the wind turbine industry where risk is reduced due to operator separation and redundancy in volume is high, as long as maintenance efficiency across all assets is calculably improved it may be beneficial to accept the potential errors. In the following sections, we will explore the risk of removing the operator decision making and the dangers in the human interface particularly where explainability is absent.

5 The effect of operational restrictions on system management

When working with a complex system there are several limits of the components: the physical limit, the regulatory limit and the engineering limit (Kadak and Matsuo 2007). To provide example of the potential hazards in removal of the operator from the decision making in automated systems, we will examine examples in control engineering. Generally speaking, the hazards induced by errors in control engineering are inherently more acute than in maintenance, but the potential vulnerabilities in the engineer/operator and machine interface are intrinsically the same.

The physical limit is one that operators such as pilots, submarine engineers or other operators of complex systems are aware of. A pilot may have to use this knowledge in a sudden emergency where his own experience is crucial to survival. This may lead to manoeuvres outside the regulatory envelope that are nevertheless safe and necessary. This physical limit of the system is where the component breaks. Systems are often designed and regulated in isolation in the scope of the ‘foreseeable operating conditions’. However, the true operating context has infinite possibilities and the responsibility is on the operator when they are working outside of the normal operating conditions. This means that wider considerations need to be met and some safety aspects may need to be prioritised over another. In the context of the nuclear submarine, this may require whole boat safety (i.e. threat of sinking) to be put above the safety of the nuclear plant and recognising when to do so.

The regulatory limit is almost always the most risk averse compared to the physical and engineering limits. Regulators and system design authorities use limits that academically might be reasonable but practically can prevent normal operations.

The engineering limit is more risk aware than the regulatory limit. This is the limit which may be beyond the regulatory limit and is certainly beyond the normal operating zone, but still within a tolerable limit for the platform. This is about understanding how far you can push the system without damage or detriment.

In aircraft the distinction between these limits is crucial for operation. “In aerodynamics, the flight envelope defines operational limits for an aerial platform with respect to maximum speed and load factor given a particular atmospheric density. The flight envelope is the region within which an aircraft can operate safely. If an aircraft flies “outside the envelope’ it may suffer damage; the limits should therefore never be exceeded. The term has also been adopted in other fields of engineering when referring to the behaviour of a system which is operating beyond its normal design specification, i.e. “outside the flight envelope’ (even if the system is not even actually flying)” (Knowledgebase 2019).

This is illustrated in Fig. 3 where we can see a basic V-n (airspeed and load variation) diagram. The diagram does not belong to a specific airframe. The diagram may vary between aircraft.

Typical V-n diagram (Knowledgebase 2019)

The green area shows the normal operating limit determined by the regulator. The yellow and orange area are included in the operating limit. This is because, due to experience, the operator may be aware that some structural damage would occur but would not necessarily cause failure. Also, this failure may be preferable to catastrophic failure if action is not taken. The failure may be tolerated when there are more urgent risks that require equipment to be operated outside its normal limits. The red limit represents the physical limit of the system or components. It is vital that the operator understands these limits and is able to use them intelligently.

Where this system is disrupted by an AI or algorithm driven system that is programmed within the regulatory limit, the operator loses a significant portion of control over the system. This control would be most important where there was an emergency and the operator needed to respond outside the regulatory envelope but the system prevented the operator from doing so. AI driven machines are bounded by their inability to correctly frame a problem due to the lack of context. They are purely driven to identify patterns from data rather than, in the case of an engineer, identifying reasoning and applying engineering logic to the observed data. An engineer can understand the wider context of the operating environment and will apply engineering logic to changes and variables and will apply assumptions but will also, sometimes more importantly, understand when these conditions are absent. The potential consequences of AI and automated systems being unable to adapt to changes in the wider context are explored in the next section.

6 Risks of operator removal in system management

In this section we discuss the example of operator control of a system being removed. The discussion of operator removal from control systems raises a fundamental point that operator removal from engineering systems induces risk. We use the Boeing 737 Air Max Disaster to illustrate this. We also discuss where confusion between an AI system and the Operator, in the Air France Disaster caused similar devastation.

In the Air France disaster of 2009 a barrage of confusion took place with the central element being interoperability between the operator and automated system. “According to a report issued on 5 July 2012, the Bureau of Investigation and Analysis found that ice crystals had misled the plane’s airspeed sensors and that the autopilot had disconnected. Confusion heightened when faulty instructions emerged from an automated navigational aid called the flight director.” Amid a barrage of alarms, the crew struggled to control the plane manually, but they never understood that the aircraft was in a stall and never undertook the appropriate recovery maneuvers. In fact, they followed the flight director’s instructions and went into a climb instead of into a dive, as they should have to correct a stall” (Fred 2012). This is highlighted further in the Boeing 737 Air Max disasters.

The Air Max series of disasters are the most prominent in public perception when we discuss AI and its implementation in Aerospace. After these disasters many issues were found such as lack of training of flight crew, lack of control over the AI system and faulty AI (Herkert et al. 2020). The unfortunate set of circumstances surrounding the loss of both Lion Air Flight 610 and Ethiopian Airlines Flight 302 led to the immediate grounding of the advertised “incredibly fuel-efficient” Boeing 737-MAX (Cioroianu et al. 2021).

In October 2018 and March 2019, Boeing 737 MAX passenger jets crashed minutes after takeoff; these two accidents claimed nearly 350 lives (Herkert et al. 2020). This led to Boeing 737 Air Max platforms being grounded across the globe. The crashes were said to be caused by by a failure of an Angle of Attack (AOA) sensor and the subsequent activation of new flight control software, the Maneuvering Characteristics Augmentation System (MCAS) (Herkert et al. 2020).

Instead of purchasing new platforms, Boeing decided to mount large, more fuel efficient engines onto the existing air frame. This meant the engines had to be mounted higher and farther forward on the wings than for previous models of the 737. This significantly changed the aerodynamics of the aircraft and created the possibility of a nose-up stall under certain flight conditions (Glanz et al. 2019). This was not coherent with the existing automatic software. There were multiple explanations for the crashes but only one was a design flaw; which was within the MAX’s new flight control software system designed to prevent stalls. The remaining explanations were ethical and political: internal pressure to keep pace with Boeing’s chief competitor, Airbus; Boeing’s lack of transparency about the new software; and the lack of adequate monitoring of Boeing by the Federal Aviation Authority (FAA), especially during the certification of the MAX model and following the first crash (Herkert et al. 2020).

Travis (2019), a qualified Pilot and software engineer, states the following: “Neither such coders nor their managers are as in touch with the particular culture and mores of the aviation world as much as the people who are down on the factory floor, riveting wings on, designing control yokes, and fitting landing gears. Those people have decades of institutional memory about what has worked in the past and what has not worked. Software people do not”. The apparent gap in communication in the development of software systems that are then added onto complex platforms is concerning. Historically, system testing would have been done by operators and training would have been supplied, while in the Air Max disasters the operator was deemed to be quite separate from the software. Moreover, “The existence of the software, designed to prevent a stall due to the reconfiguration of the engines, was not disclosed to pilots until after the first crash. Even after that tragic incident, pilots were not required to undergo simulation training on the 737 MAX” (Herkert et al. 2020). Additionally, MCAS was not identified in the original documentation/training for 737 MAX pilots (Glanz et al. 2019).

There are ethical considerations when rules are constructed for operation of complex platforms. Travis (Travis 2019) discusses the similarities between the Air Max and the ChallengerFootnote 4 disaster in terms of the operational process. “The Challenger accident came about not because people didn’t follow the rules but because they did. In the Challenger case, the rules said that they had to have prelaunch conferences to ascertain flight readiness. It didn’t say that a significant input to those conferences couldn’t be the political considerations of delaying a launch. The inputs were weighed, the process was followed, and a majority consensus was to launch. And seven people died” (Travis 2019).

In the Air Max case the system was also following rules. Travis (2019) states that “The rules said you couldn’t have a large pitch-up on power change and that an employee of the manufacturer, a DERFootnote 5, could sign off on whatever you came up with to prevent a pitch change on power change. The rules didn’t say that the DER couldn’t take the business considerations into the decision-making process and 346 people are dead. It is likely that MCAS, originally added in the spirit of increasing safety, has now killed more people than it could have ever saved. It doesn’t need to be “fixed” with more complexity, more software. It needs to be removed altogether”.

The authority that the AI software system was given in the Air Max disaster effectively overruled and overwhelmed the pilots completely. There was no way to even physically pull the stick back and take manual control because the system was always on even when it was off (Travis 2019). Such was the adherence of the AI system to the rule book; as a consequence, two planes were downed.

This shows the crucial part that both operators and users play in the development of models, software and AI. The developer cannot develop and implement a system without knowing by whom it will be used and in what circumstances. It is inappropriate to design the system such that the regulatory rules within the system are opposed to general operational practises. By trying to be safer it can inadvertently happen that the platform becomes less safe as a result of two competing operators ( Wang et al. 2020).

At this time there are roadmaps to AI in aviation such as the EASA Roadmap ( Roadmap 2020) and analysis of explainable AI in aviation (Shukla et al. 2020). This is attracting much discussion but also indicates that the development of AI in aviation still has significant challenges to overcome.

The design of any AI in complex systems needs to consider the operator and their ability to interact with the system and override if necessary. Ultimately the operators input is imperative to the safety of the platform. As can be seen if the AI is programmed to regulatory standards but the operator needs to avert crisis by using engineering limits then this would not be possible if there is no override for the AI system. Therefore, the AI could force a platform into catastrophic situation by following the rulebook (Travis 2019). Whilst the introduction of AI or algorithms into maintenance may appear to have a less acute risk, the lessons are apparent. Operator control and understanding of the systems is currently fundamental to safe and effective operation of the system.

Whilst the direct consequences of removing the engineer’s decision making ability through the adoption of AI may be somewhat different in maintenance than control engineering, the ability to way up the decision to replace/maintain against operational conditions, potential safety consequences, cost and effort are vitally important. If we reconsider the regulatory envelopes, any AI adopted in maintenance engineering would presumably be designed against these limits. Without the explainability in the AI, the engineer may be unable to challenge decisions even where it may be appropriate to considering the wider operational context. In the next section we discuss possible implementation of AI driven maintenance on sub-surface nuclear vessels and how wider context may impact AI performance and be neglected within design.

7 The issues of predictive maintenance on complex platforms

In this section we discuss the complexities of subsurface nuclear vessels and the necessary conditions for the safe potential implementation of AI-based CBM.

If we consider a gearbox on a naval platform, for example, there are added complexities due to the complexity of the system it interfaces with. Other factors include intermittent and variable load condition as well external shock and varying environmental conditions.

The authors in Cipollini et al. (2018) analysed several machine learning models applied to a Naval Propulsion System. They concluded that unsupervised AI models could achieve accurate modelling with less data points than current supervised models. However, the assumptions in this study are highly significant to applicability. Data was collected from a frigate simulator, assuming calm waters with no measurement uncertainties. If we consider the true operating context, measurement errors and environmental variables are not insignificant in degradation modelling, as discussed below.

System CBM relies on a measurement technique (eg. vibration analysis, acoustic emissions, IRs, etc.) which is used to infer degradation. Inspection and measurement of the system condition, particularly when taken in an indirect way (eg. sensors) will induce measurement errors. Inspection quality will vary significantly dependent on the measurement methodology. Whilst degradation is considered to be monotonic, measurement errors are generally considered as white noise and should not be seen to accumulate over time (Ye and Xie 2015). Equally, the possibility of not detecting failure and false positivesFootnote 6 are also an important consideration in CBM (Alaswad and Xiang 2017). The probability of false negatives (i.e. non-detection) are dependent on the failure cause (Badıa et al. 2002) and if remaining unrevealed, may lead to unexpected functional or catastrophic failure. False positives, on the other hand, may lead to unnecessary maintenance and can increase the likelihood of imperfect and worse maintenance (Berrade et al. 2013). The inclusion of measurement errors in modelling is often assumed to be constant, but in reality, are dependent on degradation processes. However, this is very difficult to model effectively due to the mathematical complexities involved (Alaswad and Xiang 2017).

The degradation rate of systems is largely dependent on the influencing environmental factors, such as temperature, humidity, voltage, vibration, etc. Therefore, these should not and cannot be ignored, particularly where they are so dominant. There are also additional environmental factors to consider. If we consider the submarine operating conditions for example, equipment can be exposed to significant variations in atmosphere, vibrations and operations. The level of impact will be equipment dependent. Additionally, measurement errors are often applied as mutually independent terms in modelling, but the reality may be far more complex, as correlation in measurement errors can often be found with cyclic ambient environmental factors and the degradation process itself (Li et al. 2018b).

The studies on wind turbines showed the importance of the context (Rommel et al. 2020; Zhang et al. 2018); consequently, to build on their success and transfer their learned lessons for more complex systems, the significance of context needs to be acknowledged. Moreover, the validity of the model needs to be verified in different contexts (e.g. under different weather conditions); this would be difficult with black-box, explainable models. This raises the question of the usefulness of black-box highly accurate models trained with simulated data and indicates that there is a need for: (a) training models with realistic data under a variety of changing environmental conditions, and (b) explainable models, that enable an operator to understand a decision made by the system, and, if necessary, override it. The importance of the last point has been showed by the unfortunate examples from the aerospace industry which were detailed in the previous section. Context is significant and models should be explainable, such that when the context changes the operator or user understand the validity of the model.

Assuming that CBM measurements and modelling is reasonably accurate, the benefits can be significant over a preventive based maintenance approach However, the authors in Xia et al. (2013) suggest that even a small measurement error, may mean that CBM is no better than a periodic preventive maintenance approach.

Condition monitoring is reliant on measurement techniques applied to a system or component that can be used to infer equipment health, such as acoustic or vibration monitoring, lubricant analysis, electrical measurements, thermography, torque measurements and pressure differentials (Yang et al. 2014; Ahmad and Kamaruddin 2012). In some circumstances system condition may be inferred from system performance and operating data (Shorten 2012; Ahmad and Kamaruddin 2012; Wang et al. 2017). The effectiveness of AI modelling to assess RUL utilising CBM techniques will be largely system dependent. These dependencies will be determined by the predictive nature of its degradation, influenced by its susceptibility to environmental and operational factors as well as to external shocks and the reliability of the measurement techniques and its errors.

It is unclear whether truly effective methods exist for AI driven RUL models. However, we have seen significant advances in measurement of degradation as seen in the wind turbine industry. Consequently, the link between degradation measurements in CBM and RUL has to maintain operator input.

Indicated by the lessons from aerospace, the gap between system and operator is a significant one. There is still a significant gap between research and practical application for AI driven CBM. For AI technology to be incorporated into engineering systems, especially complex systems, the operator would need to be considered along with the operators ability to override the system. The implementation of AI driven CBM would require extensive validation of any system in the actual operational environment alongside involvement of the operator, especially in complex systems.

8 Discussion and conclusions

In this paper we discussed the AI implementation on complex platforms in relation to system monitoring and control. We looked at systems of varying complexity, from simple gearboxes and medium-complexity wind turbines to complex aircraft and subsurface nuclear vessels.

In the following we discuss several aspects that are essential to progress towards safe implementation of AI into complex platforms: interdisciplinary working; reliable data; wider system considerations; regulatory, engineering and physical limits; industry and academic collaborations (Shao et al. 2020); and measurement of success.

Implementation of remote and automated systems in industry has seen mixed results. From success in the wind industry to failure in aerospace. The complexity of the platforms becomes a significant issue when intertwined with AI. AI having significant control over the platform or systems and having the natural feel of the platform (Travis 2019) removed from the human operator can cause unintended and fatal consequences.

It is important to deploy robust systems that have been developed in context and with the operator and user at the heart of them (Oldfield et al. 2021; Oldfield and Haig 2021).

It is key to be able to collect all the data as discussed in (Sect. 4) and understand which parameters both need to be collected and have importance to the system (Akerkar 2014). This type of measurement may not be mature enough yet to enable the implementation of algorithmic based systems on complex platforms to compute RUL.

There are large differences between the regulatory limit, the engineering limit and the physical limit. The discrepancy between the regulatory limit and operator limit can lead to conflict. For example, to conduct an operational manoeuvre to save the platform, and indeed lives, an operator may be close to the physical limit of the system or components as an experienced specialist can have the awareness of what can and cannot be achieved. The regulatory limit, if imposed through automation, takes away this discretion and does not allow for potentially life saving manoeuvres to occur or indeed permutations of potentially safe manoeuvres. This is partially because we cannot program the AI with every eventuality it may face. Indeed operators are drilled in simulators on rare events but in a manner that follows regulation. In reality what they did based on multiple inputs might be extremely different. This indicates that we have far to go in developing systems that are able to remove control from a human. Indeed, the question is if we even should remove total control from the human in a complex system (Riley 2018; Degani and Wiener 1997). This concerns the shift in the decision making process and is a relevant lesson to the adoption of AI across all aspects of engineering including the potential implementation in maintenance.

As we see in academic studies, there is not necessarily the input from stakeholders, operators or users that would provide context to the initial idea, which indicates the need for collaboration between academia and industry. The gearbox in the academic study is isolated whereas the gearbox in the submarine is connected to a mass of systems, many of which are interdependent. There is also the operational, environmental and safety contexts to consider. In this situation is is difficult to see how AI based maintenance systems could be applied without providing them with the knowledge and context of the operator. Indeed the user would require full knowledge of the system before implementations. This was a significant failure in the Boeing 737 Air Max AI implementation.

We can see within the studies in the wind turbine industry that there is potential for advanced CBM to be a valuable method for maintenance. This data may also be used to underpin AI systems, as we have shown can be done in simple systems. In general, and specifically in more complex systems there seems to be a lack of understanding on how we could measure the success of the complex AI driven CBM systems and how we might implement it. There are substantial risks associated with implementing AI systems at present that would not be acceptable, especially in systems such as those on board a subsurface nuclear vessel (Modarres 2009).

Availability of data and materials

Not applicable

Code Availability

Not applicable

Notes

A physical boundary would be classed as the failure of the component whereas the regulatory boundary will often exist at a much lower level as it is calculated from the physical boundary with a calculated safety factor and has no related physical consequence.

Subsurface industries deal with operations that take place under the surface of the earth or oceans.

Here we use operator as the end operator of a system and user where the interaction is passive rather than active, i.e an operator may be a pilot and a user might be someone at the end of a decision on a credit card.

The Space Shuttle Challenger disaster was a fatal incident in the United States’ space program that occurred on January 28, 1986, when the Space Shuttle Challenger (OV-099) broke apart 73 seconds into its flight, killing all seven crew members aboard.

Designated Engineering Representative (DER). This is an individual who holds an engineering degree or equivalent, possesses the necessary technical knowledge and experience, and meets specific qualification requirements.

a test result which wrongly indicates that a particular condition or attribute is present.

References

Ahmad R, Kamaruddin S (2012) An overview of time-based and condition-based maintenance in industrial application. Comput Ind Eng 63(1):135–149. https://doi.org/10.1016/j.cie.2012.02.002

Akerkar R (2014) Analytics on big aviation data: turning data into insights. Int J Comput Sci Appl 11(3):116–127

Alaswad S, Xiang Y (2017) A review on condition-based maintenance optimization models for stochastically deteriorating system. Reliab Eng Syst Saf 157:54–63. https://doi.org/10.1016/j.ress.2016.08.009

Albarbar A, Gu F, Ball AD (2010) Diesel engine fuel injection monitoring using acoustic measurements and independent component analysis. Measurement 43(10):1376–1386. https://doi.org/10.1016/j.measurement.2010.08.003

Allen T (2001) Us navy analysis of submarine maintenance data and the development of age and reliability profiles. Department of the Navy SUBMEPP

Badıa F, Berrade MD, Campos CA (2002) Optimal inspection and preventive maintenance of units with revealed and unrevealed failures. Reliab Eng Syst Saf 78(2):157–163. https://doi.org/10.1016/S0951-8320(02)00154-0

Berente N, Gu B, Recker J, Santhanam R (2019) Managing AI. Call for papers, MIS quarterly, 1–5

Berrade MD, Scarf PA, Cavalcante CAV, Dwight RA (2013) Imperfect inspection and replacement of a system with a defective state: a cost and reliability analysis. Reliab Eng Syst Saf 120:80–87. https://doi.org/10.1016/j.ress.2013.02.024

Borth M, Hendriks M (2016) Engineering the smarts: an illustration of the disconnect between control engineering and AI. In: 2016 11th System of Systems Engineering Conference (SoSE), IEEE, pp 1–5

Brown JP (2016) The effect of automation on human factors in aviation. J Instrum Autom Syst 3(2):31–46

Chen C, Brown D, Sconyers C, Zhang B, Vachtsevanos G, Orchard ME (2012) An integrated architecture for fault diagnosis and failure prognosis of complex engineering systems. Expert Syst Appl 39(10):9031–9040. https://doi.org/10.1016/j.eswa.2012.02.050

Cioroianu I, Corbet S, Larkin C (2021) Guilt through association: Reputational contagion and the boeing 737-max disasters. Econ Lett 198:109657. https://doi.org/10.1016/j.econlet.2020.109657

Cipollini F, Oneto L, Coraddu A, Murphy AJ, Anguita D (2018) Condition-based maintenance of naval propulsion systems: data analysis with minimal feedback. Reliab Eng Syst Saf 177:12–23. https://doi.org/10.1016/j.ress.2018.04.015

Coronado DK (2014) Assessment and validation of oil sensor systems for on-line oil condition monitoring of wind turbine gearboxes. Proced Technol 15:747–754

Dalal SR, Fowlkes EB, Hoadley B (1989) Risk analysis of the space shuttle: pre-challenger prediction of failure. J Am Stat Assoc 84(408):945–957. https://doi.org/10.1080/01621459.1989.10478858

Degani A, Wiener EL (1997) Procedures in complex systems: the airline cockpit. IEEE Trans Syst Man Cybern Part A 27(3):302–312

Delvecchio S, Bonfiglio P, Pompoli F (2018) Vibro-acoustic condition monitoring of internal combustion engines: a critical review of existing techniques. Mech Syst Signal Process 99:661–683. https://doi.org/10.1016/j.ymssp.2017.06.033

Dui H, Zhang C, Zheng X (2020) Component joint importance measures for maintenances in submarine blowout preventer system. J Loss Prev Process Ind 63:104003. https://doi.org/10.1016/j.jlp.2019.104003

Engeler M, Treyer D, Zogg D, Wegener K, Kunz A (2016) Condition-based maintenance: model vs. statistics a performance comparison. Proced CIRP 57:253–258

Frangopol D, Dan M, Bocchini D, Decò A, Kim D, Kwon D, Okasha D, Nader M, Saydam D et al (2012) Integrated life-cycle framework for maintenance, monitoring, and reliability of naval ship structures. Nav Eng J 124(1):89–99

Fred HL (2012) Chaos in the cockpit: a medical correlative. Tex Heart Inst J 39(5):614

Glanz J, Creswell J, Kaplan T, Wichter Z (2019) After a Lion Air 737 MAX Crashed in October, Questions About the Plane Arose. The New York Times. February, 3.

Goossens AJ, Basten RJ (2015) Exploring maintenance policy selection using the analytic hierarchy process; an application for naval ships. Reliab Eng Syst Saf 142:31–41

Herkert J, Borenstein J, Miller K (2020) The boeing 737 max: lessons for engineering ethics. Sci Eng Ethics 26(6):2957–2974. https://doi.org/10.1007/s11948-020-00252-y

Jing L, Zhao M, Li P, Xu X (2017) A convolutional neural network based feature learning and fault diagnosis method for the condition monitoring of gearbox. Measurement 111:1–10

Jonge B, Teunter R, Tinga T (2017) The influence of practical factors on the benefits of condition-based maintenance over time-based maintenance. Reliab Eng Syst Saf 158:21–30. https://doi.org/10.1016/j.ress.2016.10.002

Kadak AC, Matsuo T (2007) The nuclear industry’s transition to risk-informed regulation and operation in the United States. Reliab Eng Syst Saf 92(5):609–618. https://doi.org/10.1016/j.ress.2006.02.004

Kane P, Andhare A (2016) Application of psychoacoustics for gear fault diagnosis using artificial neural network. J Low Freq Noise Vib Active Control 35(3):207–220. https://doi.org/10.1177/0263092316660915

Kane P, Andhare A (2020) Critical evaluation and comparison of psychoacoustics, acoustics and vibration features for gear fault correlation and classification. Measurement 154:107495. https://doi.org/10.1016/j.measurement.2020.107495

Knowledgebase (2019) Flight Envelope. https://www.uavnavigation.com/support/kb/general/general-system-info/flight-envelope. [Online; accessed 1-May-2021]

Li Y, Wang X, Liu Z, Liang X, Si S (2018a) The entropy algorithm and its variants in the fault diagnosis of rotating machinery: a review. IEEE Access 6:66723–66741. https://doi.org/10.3390/e22030367

Li J, Wang Z, Zhang Y, Liu C, Fu H (2018b) A nonlinear wiener process degradation model with autoregressive errors. Reliab Eng Syst Saf 173:48–57. https://doi.org/10.1016/j.ress.2017.11.003

Mann L, Saxena A, Knapp GM (1995) Statistical-based or condition-based preventive maintenance? J Qual Maint Eng. https://doi.org/10.1108/13552519510083156

Mobley R (1990) An introduction to preventive maintenance: plant engineering series. Van Nostrand, New York

Modarres M (2009) Advanced nuclear power plant regulation using risk-informed and performance-based methods. Reliab Eng Syst Saf 94(2):211–217. https://doi.org/10.1016/j.ress.2008.02.019

Mokashi A, Wang J, Vermar A (2002) A study of reliability-centred maintenance in maritime operations. Mar Policy 26(5):325–335

Oldfield M, Haig E (2021) Analytical modelling and UK government policy. AI and Ethics. https://doi.org/10.1007/s43681-021-00078-9

Oldfield M, Gardner A, Smith AL, Steventon A, Coughlan E (2021) Ethical funding for trustworthy AI: proposals to address the responsibilities of funders to ensure that projects adhere to trustworthy ai practice. AI and Ethics. https://doi.org/10.1007/s43681-021-00069-w

Prateepasen A, Kaewwaewnoi W, Kaewtrakulpong P (2011) Smart portable noninvasive instrument for detection of internal air leakage of a valve using acoustic emission signals. Measurement 44(2):378–384

Riley V (2018) Operator reliance on automation: theory and data. Automation and human performance: theory and applications. CRC Press, London, pp 19–35

Roadmap, Artificial Intelligence (2020) A human-centric approach to AI in aviation. Eur Aviat Saf Agency 1:1–30

Rommel D, Di Maio D, Tinga T (2020) Calculating wind turbine component loads for improved life prediction. Renew Energy 146:223–241. https://doi.org/10.1016/j.renene.2019.06.131

Shao Z, Yuan S, Wang Y (2020) Institutional collaboration and competition in artificial intelligence. IEEE Access 8:69734–69741

Shorten D (2012) Marine machinery condition monitoring. why has the shipping industry been slow to adopt. Technical Investigations Department (ed.). Lloyd’s Register EMEA

Shukla B, Fan I-S, Jennions I (2020) Opportunities for explainable artificial intelligence in aerospace predictive maintenance. PHM Soc Eur Conf 5:11–11. https://doi.org/10.1080/01621459.2017.1307116

Stott J, Britton P, Ring R, Hark F, Hatfield G (2018) Common cause failure modeling: aerospace versus nuclear. 10th international probalistic safety assessment and management conference. No. M10-0548. 2010.

Sullenberger C (2019) My Letter to the Editor of New York Times Magazine. http://www.sullysullenberger.com/my-letter-to-the-editor-of-new-york-times-magazine/. Accessed 01 May 2021

Takata S, Kirnura F, Houten FJ, Westkamper E, Shpitalni M, Ceglarek D, Lee J (2004) Maintenance: changing role in life cycle management. CIRP Ann 53(2):643–655

Tchakoua P, Wamkeue R, Ouhrouche M, Slaoui-Hasnaoui F, Tameghe TA, Ekemb G (2014) Wind turbine condition monitoring: state-of-the-art review, new trends, and future challenges. Energies 7:2595–2630. https://doi.org/10.3390/en7042595

Tran VT, AlThobiani F, Tinga T, Ball A, Niu G (2018) Single and combined fault diagnosis of reciprocating compressor valves using a hybrid deep belief network. Proc Inst Mech Eng C 232(20):3767–3780. https://doi.org/10.1177/0954406217740929

Travis G (2019) How the Boeing 737 max disaster looks to a software developer. IEEE Spectr 18:2–10

Wang L, Qian Y, Li Y, Liu Y (2017) Research on CBM information system architecture based on multi-dimensional operation and maintenance data. IEEE Int Conf Progn Health Manag (ICPHM). https://doi.org/10.1109/ICPHM.2017.7998323

Wang D, Churchill E, Maes P, Fan X, Shneiderman B, Shi Y, Wang Q (2020) From human-human collaboration to human-AI collaboration: designing AI systems that can work together with people. In: Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, pp 1–6

Widodo A, Yang B-S (2007) Support vector machine in machine condition monitoring and fault diagnosis. Mech Syst Signal Process 21(6):2560–2574. https://doi.org/10.1016/j.ymssp.2006.12.007

Wu F, Niknam SA, Kobza JE (2015) A cost effective degradation-based maintenance strategy under imperfect repair. Reliab Eng Syst Saf 144:234–243

Xia T, Xi L, Zhou X, Lee J (2013) Condition-based maintenance for intelligent monitored series system with independent machine failure modes. Int J Prod Res 51(15):4585–4596. https://doi.org/10.1080/00207543.2013.775524

Yang W, Tavner PJ, Crabtree CJ, Feng Y, Qiu Y (2014) Wind turbine condition monitoring: technical and commercial challenges. Wind Energy 17(5):673–693. https://doi.org/10.1002/we.1508

Ye Z-S, Xie M (2015) Stochastic modelling and analysis of degradation for highly reliable products. Appl Stoch Model Bus Ind 31(1):16–32. https://doi.org/10.1002/asmb.2063

Zang Y, Shangguan W, Cai B, Wang H, Pecht MG (2019) Methods for fault diagnosis of high-speed railways: a review. Proce Inst Mech Eng Part O 233(5):908–922. https://doi.org/10.1177/1748006X18823932

Zhang Z, Si X, Hu C, Lei Y (2018) Degradation data analysis and remaining useful life estimation: a review on wiener-process-based methods. Eur J Oper Res 271(3):775–796. https://doi.org/10.1016/j.ejor.2018.02.033

Funding

Not applicable

Author information

Authors and Affiliations

Contributions

Not applicable

Corresponding author

Ethics declarations

Conflict of interest

Not applicable

Ethical approval

Not applicable

Consent to participate

Not applicable

Consent for publication

Not applicable

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Oldfield, M., McMonies, M. & Haig, E. The future of condition based monitoring: risks of operator removal on complex platforms. AI & Soc 39, 465–476 (2024). https://doi.org/10.1007/s00146-022-01521-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00146-022-01521-z