Abstract

The cardinal features of inflammation were identified many centuries ago, and the subsequent demonstration of the cellular basis of these features first relied upon light microscopy and the development of histological processes. As the complexity of inflammation becomes more understood, tools are required to access ever smaller and deeper compartments of tissue, in vitro and in vivo, in animals and in man, at the same time maintaining specificity for the particular process under investigation. Whilst imaging modalities can rely solely upon the endogenous features of the tissue under investigation, commonly some form of contrast agent is added, and the qualities of the tissue, the imaging technique and the contrast agent can be exploited together to answer specific questions about inflammation.

Final manuscript submitted on April 18, 2018.

Similar content being viewed by others

1 Introduction

The cardinal features of inflammation were identified many centuries ago, and the subsequent demonstration of the cellular basis of these features first relied upon light microscopy and the development of histological processes. As the complexity of inflammation becomes more understood, tools are required to access ever smaller and deeper compartments of tissue, in vitro and in vivo, in animals and in man, at the same time maintaining specificity for the particular process under investigation. Whilst imaging modalities can rely solely upon the endogenous features of the tissue under investigation, commonly some form of contrast agent is added, and the qualities of the tissue, the imaging technique and the contrast agent can be exploited together to answer specific questions about inflammation.

Molecular imaging refers to “the visualization, characterization, and measurement of biological processes at the molecular and cellular levels in humans and other living systems” [1]. The imaging of individual molecules is beyond the resolution of the vast majority of imaging technologies, but the application of specific “contrast” agents can amplify molecular processes, so they can be detected by systems otherwise not equipped to resolve at the molecular level.

Classically, in clinical practice, diseases have been observed as the late physiological manifestations of much earlier biological and molecular changes, and imaging has consisted primarily of detecting structural change [2]. Molecular imaging has the potential for earlier detection, translating laboratory understanding of disease processes into the clinical arena where strategies may be used for diagnosis and surveillance, as well as treatment planning and monitoring.

The vast majority of imaging modalities employ electromagnetic radiation, which is transmitted by the elementary particle: the photon. Like all elementary particles, the photon exhibits wave-particle duality, that is, it possesses the characteristics of both a wave and a particle. Travelling as a wave, it has a wavelength, a frequency and an intensity or energy. As wavelength elongates, frequency and intensity drop. The electromagnetic spectrum covers all possible types of electromagnetic radiation and ranges from short wavelength, high-frequency gamma rays, through the visible portion of the spectrum, through microwaves to radio waves, which are low frequency and long wavelength. The majority of imaging technologies described utilize energy from the electromagnetic spectrum and have characteristics that rely upon the features of the wave that is used to obtain the image (Fig. 18.1).

2 Considerations of Choosing an Imaging Modality

Each imaging modality has its own unique characteristics that make it more or less suitable for the application at hand. A few characteristics are outlined below.

2.1 Resolution

RESOLUTION refers to the ability of an imaging system to separate two distinct points when illustrating an image to the user and can be classified into spatial (or angular) resolution, temporal resolution and contrast resolution. Spatial resolution refers to the ability of an imaging system to resolve two points in space and is divided into two components, lateral and axial resolution; lateral resolution referring to two points side-by-side in a plane perpendicular to the wave used to obtain the image, and axial resolution referring to two points side-by-side along the axis of wave. Temporal resolution requires two points separated only in time to be resolved from one another and is especially important in real-time imaging and where the source material is mobile relative to the detection device. Contrast resolution refers to the ability of an imaging system to detect different intensities of signal from a sample and then portrays them accurately to the investigator. An example of contrast resolution is the dynamic range of a system, which refers to the ratio of the smallest and largest usable signal that is transmitted from object to user. Whilst extremes of resolution are fundamentally limited by the physics of the wave used to obtain an image, they can often be adjusted over a large working range to meet the requirements of a particular experiment.

2.2 Depth of Penetration

Photons travelling in a vacuum will travel unimpeded with no change in direction or energy. If a particle is placed in the path of the beam of photons, some will be absorbed and some will be scattered. The beam of photons continuing on from the particle will have been attenuated. The rate at which a beam is attenuated depends upon the energy of the beam, the number of barrier particles and the capability of those particles to absorb or scatter that particular beam. For a beam or wave to be absorbed, there must be subatomic particles within the medium with energy levels that correspond to the photon energy contained within the wave. Low-frequency waves such as radio waves pass straight through the human body because the body contains no material with the appropriate energy level to absorb them. Visible light is readily absorbed or scattered, and so waves are attenuated very rapidly, and penetration depth is limited to just a few millimetres. As the wavelength drops even shorter towards X-rays and gamma rays, there is less and less material to absorb or scatter the high-energy photons, and penetration depth again becomes very high.

2.3 Safety

Higher frequency waves in the electromagnetic spectrum such as ultraviolet, X- and gamma rays have sufficient energy to ionize molecules within the sample, which can lead to biological toxicity including DNA mutation. Some lasers used in optical imaging could potentially cause tissue destruction through the generation of heat in an extremely small area. In general, the power of lasers used to investigate biological tissue is not in the order of magnitude required to produce tissue damage. Whilst there are specific safety concerns with some of the imaging modalities detailed below such as eye safety when using magnets and lasers, they predominantly concern the investigator rather than the biological sample under investigation.

2.4 Image Processing, Storage and Analysis

Apart from the simplest light microscopes, most device detectors convert analogue continuous signal into a digital signal, which is used for storage and analysis. Because our eyes and brains cannot interpret digital images directly, they are converted back to analogue for visual interpretation. Each stage in the processing may lead to the introduction of error or noise, which may lead to misinterpretation. Analysis is inherently subjective, and methodologies to entirely remove sources of bias are fraught with technical difficulty, and procedures of image acquisition, processing and analysis should be subject to critical appraisal. Imaging devices are also capable of producing an enormous quantity of data in a short space of time. Consideration should be given to file format used for storage where no international standard is established, as data loss can occur with some lossy compression algorithms (which reduce file size by eliminating unnecessary information) such as JPEG and MPEG. Even if data are economically stored, analysts can easily become overwhelmed by the acquired data, and even the simplest interpretation can become time-consuming.

3 Contrast Agents for Molecular Imaging

Whilst many insights into inflammation and other biological processes can be gained during label-free imaging, the development of contrast agents has vastly increased the capability of molecular imaging. Each molecular imaging agent comprises a targeting segment that confers localization and a component that facilitates imaging by the chosen imaging modality [3]. The primary function is to identify a particular biological process occurring at a molecular or cellular level and amplify it to produce a signal which is detectable by modalities which otherwise do not possess an adequate resolution. The scope of molecular imaging agents is vast, from progressing the understanding of disease right across the experimental spectrum of in vitro to in vivo work, to high throughput drug discovery and toxicity assays, to clinical applications in the search of person-specific disease detection, stratification and treatment. Careful design of the contrast agent is imperative and is a multidisciplinary undertaking. There are four broad considerations in the selection and design of a contrast agent for a particular experiment (Box 18.1).

Box 18.1: Considerations in the Selection and Design of a Contrast Agent

What is the method of localization to the target of interest?

There are three main types of agent. Ones that:

-

Bind directly to the target molecule.

-

Accumulate by the molecular or cellular activity of the target.

-

Become detectable only upon activation by the target.

What is the nature of the biological sample?

-

Will the agent be able to access the target?

-

Is the sample fixed?

-

For in-vivo work, will pharmacokinetic issues permit sufficient exposure of the target to the agent?

What are the limitations of the imaging system in question?

-

Does the resolution of the system deliver adequate information to answer the question at hand?

-

Is there adequate depth of penetration to image the molecular agent?

Will the agent cause any adverse affects?

-

Will the agent cause artifact that leads to false interpretation?

-

Are there are tissue pharmacodynamic or toxicological issues?

4 Imaging Modalities

There are numerous molecular imaging modalities available, each with advantages and disadvantages. They can be classified into optical, magnetic resonance imaging, nuclear medicine and ultrasound techniques [4]. An overview of some of the more common imaging modalities is provided in Table 18.1.

4.1 Optical Imaging

OPTICAL IMAGING relies upon light from the ultraviolet, visible and infrared portions of the electromagnetic spectrum. Ultraviolet light has a short wavelength in the region of about 100–400 nm, whilst infrared light has a wavelength of up to approximately 1 mm. The spatial resolution of an optical system depends upon the wavelength of the light in use and the characteristics of the lens used to focus the image on the detector.

4.1.1 An Overview of Light Microscopy

As light from an infinitely sharp point on a sample is focused through an objective to be perfectly focused on a detector, the propagating waves diffract and will blur to a finitely sized disk on the detector. The pattern of blurring as light propagates from the objective to the detector is known as the point spread function (PSF), and it forms a series of concentric light and dark rings as it strikes the detector. This two-dimensional representation of the infinitely sharp point is known as the Airy disk. As two distinct points on the sample get closer together, their Airy disks on the detector will begin to overlap and there comes a point (known as the Rayleigh criterion) where they cannot be resolved from one another. For an optical system with no aberration, resolution is therefore said to be diffraction limited, and Abbe’s diffraction limit states:

where d is the maximum resolvable feature size, λ is the wavelength, n is the refractive index of the medium in which images are obtained and θ is the angular aperture of the objective lens. n sin θ is known as the numerical aperture (NA) and is determined by the technical specification of the microscope objective. Numerical apertures rarely fall below approximately 1.4 [5]. Considering a system with NA = 1, then d = λ/2, and for green light with a wavelength of 500 nm, the maximum resolvable feature size is approximately 250 nm. Axial resolution is typically worse than lateral resolution for a given wavelength because light is usually only collected from one side of the sample, and so a large amount of information is lost. This limitation can be overcome by illuminating and collecting light from additional locations [5].

The main advantages conferred by using the visible spectrum to obtain microscopic images are the low cost and simplicity of the system, the ability to multiplex many different wavelengths into one image and the inherent safety, although ultraviolet wavelengths do cause tissue damage. Another restriction placed upon optical imaging by the properties of the light wave is the lack of penetration depth, which is just a few millimetres for visible light. Infrared microscopes extend the penetration depth to several centimetres at the expense of a reduction in resolution. Penetration depth may also be increased by measuring ballistic photons, which are not scattered or absorbed on their passage through a turbid medium such as biological tissue, but this requires detectors that are extremely sensitive and time resolved especially at tissue depths beyond a few millimetres.

4.1.2 An Overview of Fluorescence Microscopy

FLUORESCENCE occurs when an orbital electron of a substance (known as a FLUOROPHORE) becomes excited by the absorbance of a photon (or photons) and subsequently relaxes to its resting state through the emission of a photon. For absorbance to occur, the photon must have energy approximately equal to the difference between the excited and ground state of the electron. Once an orbital electron is excited, there are several potential fates. By far the most common is a return to the ground state through radiation-free dissipation of energy in the form of heat. Occasionally, however, the electron will return to its ground state by emitting a photon. The emitted photon will have a lower energy and longer wavelength than the absorbed photon due to the liberation of some energy through non-radiative relaxation by the excited electron before photon emission, a phenomenon known as Stokes shift. Each fluorophore has characteristic excitation and emission spectra, which describe the efficiency of photon absorption and emission as the wavelength of stimulating light varies. The time taken for a photon to be emitted after the fluorophore has absorbed energy is known as the fluorescence lifetime and is independent of concentration of fluorophore or the intensity of the stimulating light, unlike the quantity of liberated photons (Fig. 18.2).

Jablonski’s diagram of energy states of a molecule. For an electron to leave the ground state and enter an excited state, absorbance has to occur. This event happens when the energy of the photon has approximately the equal energy to the difference between the ground and the excited state. The excited electron will return to the ground state the majority of times, emitting a photon at a lower energy and longer wavelength. The time it takes for the emitted photon to be released from the moment the electron was excited is known as the fluorescence lifetime

Lasers are often used to stimulate fluorophores as they produce monochromatic light, and the appropriate part of the emission spectrum of a fluorophore can be targeted without spilling over into other wavelengths. Lasers also produce collimated light, which means each point on a biological sample can be precisely stimulated without affecting adjacent tissue. Light-emitting diodes (LEDs) provide a cheaper and more reliable alternative to lasers at the expense of producing non-collimated (i.e. naturally diverging) wave of light, less power and an emission of light over a slightly broader wavelength.

The field of fluorescence, the precision of the behaviour of the fluorophores, the technical accuracy and simplicity of fluorescence excitation and emission detection systems all combine to make this an extremely potent field for the multiplexing of detectable information from within the same biological sample.

4.1.3 Some Strategies and Examples for Molecular Imaging Using Fluorescent Agents

Fluorophores conjugated to a molecule that binds a particular target presents by far the commonest and simplest method for molecular targeting. There are thousands of biological ligand and fluorophore combinations available commercially, and typically samples must be washed before imaging in order to remove unbound fluorophore. Examples include cell surface marker and nucleic acid stains used in cellular imaging.

Fluorescent imaging agents can be designed specifically to accumulate in the tissue of interest. Examples include fluorescent agents conjugated to large molecular dendrimeric constructs. Such agents have been used to provide spatiotemporal imaging of the process of phagocytosis [6].

Fluorescent imaging agents can be designed to remain silent until reaching the target of interest. Strategies include relying upon environmental changes [7] or employing a quenching mechanism. An example of quenching would be a Förster resonance energy transfer (FRET) quenched system. FRET refers to the radiationless transfer of photon energy from one fluorophore to another through dipole-dipole interactions [8]. A FRET quenched system employs photon donor and acceptor moieties in close proximity. The donor moiety absorbs a photon as normal, but the energy is transferred to the acceptor, which then either emits a photon with wavelength outside of the range of interest or returns to a ground state through non-radiative means [9]. The efficiency of a FRET system is proportional to 1/R6 where R = the donor-acceptor distance, so the effect disappears rapidly as the two moieties become separated. FRET systems have been employed in many biological applications. One example is the investigation of spatiotemporal function in enzyme biology. A molecular agent for a specific protease may comprise of a FRET quenched system that is separated by a specific peptide and does not yield fluorescence until the peptide sequence is digested by a specific protease and the components of the FRET system become separated in space [6, 10].

In addition to the general considerations of selecting a molecular agent for a particular application, the following additional concerns apply to fluorescent imaging agents:

-

What is the process of fluorophore excitation and emission and how can this be managed amongst other competing exogenous fluorophores?

-

What are the autofluorescent characteristics of endogenous biological molecules and how can these be de-convolved from the molecular imaging agents?

Whilst exploiting the ability of optical platforms to multiplex numerous different fluorophores into one investigation, there is a requirement to maintain specificity. One problem is broadness of the spectra of many fluorophores that confers a degree of overlap from the excitation of one fluorophore to detection of the other, thereby introducing a potential source of error. There are several potential strategies that may be employed to overcome this such as the use of tandem dyes, quantum dots or the temporal separation of the interrogation of each fluorophore. Tandem dyes use a FRET system to transfer exciting photons from one wavelength from a donor to an acceptor that emits a photon at a different wavelength than the donor would otherwise be capable of doing. The development of quantum dots has led to brighter fluorophores with much narrower spectra, which allows multiple dots to be used without spilling over into the emission of other fluorophores, although these dots are known to exhibit cellular toxicity [11].

4.1.4 Confocal Microscopy

One of the main limitations in normal (or widefield) light microscopy is the collection of out-of-focus light onto the detector, which reduces the axial resolution of the collected image as this light is overlaid on the focused image. CONFOCAL MICROSCOPY overcomes this limitation by placing a pinhole in the path of collected light, therefore eliminating any out-of-focus light from proceeding to the detector. Modern confocal microscopy combines widefield light microscopy with laser scanning confocal microscopy, whereby lasers of a particular wavelength are sequentially scanned across the entire field of view so the fluorescence of each point, at each wavelength, can be built up to yield a multi-faceted image relying on the endogenous fluorescence of the sample and on the properties of exogenously applied fluorescent compounds. 3D images can be developed by accurately moving the objective up and down, the so-called z-stacking .

4.1.5 Multiphoton Microscopy

MULTIPHOTON MICROSCOPY or multiphoton excitation microscopy is a non-linear optical imaging technique. Two-photon microscopy is an example of multiphoton microscopy and refers to the excitation of a fluorophore by the simultaneous arrival of two relatively low-energy photons. Both photons stimulate the same electron to a higher energy level than a single photon alone. When the electron relaxes, the fluorophore emits a photon that has a shorter wavelength than the exciting photons. Such non-linear excitation requires enormous photon flux, and therefore stimulating photons from an infrared laser are focused to a point in the sample, which also minimizes photo damage. It is only at this point that there are a sufficient number of photons to produce appropriate stimulation of the fluorophore. In this way off-target signal is rejected by restricting fluorescence excitation volume rather than by restricting fluorescence collection volume, which is the case for confocal microscopy [12]. Two-photon microscopy is well established for in vitro molecular imaging and has been used during intravital imaging, typically through a body surface window, such as the investigation of neutrophil infiltration during lung injury [13] and imaging of the gastrointestinal tract [14] (Fig. 18.3).

Two-photon microscopy. Images taken from normal small intestine with white light imaging (a) and two-photon microscopy (b). Two-photon microscopy generates a more detailed image, providing an insight to the cellular morphology of the small intestine as opposed to the tissue outline of whilst light imaging. Adapted from Grosberg et al. [14]

4.1.6 Fluorescence Molecular Tomography

There remains an experimental need to study thick or whole biological tissue samples. Even if fluorescent light can be detected at the surface of a sample, it cannot be assigned to a precise location deep to the surface because of the aforementioned effects of scatter and absorption, which cannot be predicted or modelled in anything but the most uniform of transmission media. FLUORESCENCE MOLECULAR TOMOGRAPHY comprises a detector and narrow excitation beam, which is scanned over the sample in order to collect photons which have propagated through the tissue [15]. Through the collection of multiple data points from multiple orientations, a tomographic three-dimensional image can be developed, albeit with relatively low resolution. The images are still limited by the depth of photon penetration, and so infrared light and infrared-stimulated fluorophores are often used which can be detected at a depth of several centimetres [16]. Examples of the use of this technology have been the detection of specific protease activity [17, 18] and the tracking of inflammatory cells in inflammatory disorders [19]. The technique is often limited to small animal work because of depth penetration restrictions but has found use in some clinical applications especially in breast cancer because of the low attenuation of infrared light by breast tissue [20].

4.1.7 Raman Spectroscopy

In contrast to fluorescence microscopy where molecules absorb photons to become electronically excited, Raman scattering occurs when a molecule interacts with a photon and changes its vibrational excitation. Typically when this occurs, the molecule will scatter a photon at the same wavelength of the absorbed photon (elastic or Rayleigh scattering). Much less frequently, the molecule will scatter a photon with a different wavelength (fewer than 1 in 19 of all incident photons), either longer (Stokes shifted) or shorter (anti-Stokes shifted). The precise shift is unique to each molecule under investigation and can be used to build a unique “fingerprint” spectrum for each. The information derived from the Raman spectrum from a particular point in the sample can be overlaid on traditional light microscopy images to give additional label-free information about the nature of the biological sample [21]. RAMAN SPECTROSCOPY has been employed to provide label-free live imaging of inflammatory cells [22] and stochastic surveillance of apoptosis [23] (Fig. 18.4).

Confocal Raman microscopy (CRM). CRM is a cellular imaging technique which provides an insight to the molecular composition of a cell. Figure (a) is a CRM image of a neutrophil with (b) a hierarchical cluster that visualizes regions with high Raman spectral similarities. Sections with high lipid content in (a) and (b) are seen as red and blue regions, respectively. Lipids have been shown to be powerful mediators of inflammation in leukocytes. Figure (c) is an average Raman spectra from the various colours seen in (b). The strong bands (1267, 1441, 1658) are Raman shift peaks associated with lipids. Adapted by van Manen et al. [24]

4.1.8 Fibre-Based Imaging

One method of overcoming the limited penetration depth of optical imaging modalities is simply to access the tissue under investigation using an optical fibre. White light widefield endoscopes have been used for many years to provide macroscopic imaging of any endoscopically accessible biological tissue. A fibre optic cable is a bundle of many thousand individual optical fibres, with each fibre employing an inner core and outer cladding, each with a different refractive index. The interface of the outer cladding and the inner core provides the opportunity for total internal reflection to propagate light from one end of the fibre to the other. It is possible to add a gradient index (GRIN) lens to add focusing ability to the distal tip of the fibre bundle, but this adds bulk and reduces tissue accessibility. Fibre bundles without lenses therefore have a fixed and very short working distance. The lateral resolution of the transmitted image in FIBRE-BASED IMAGING is restricted by the diameter of the fibre cladding, as the inner cores that conduct light move further apart. Thinner cladding leads to optical cross talk as light leaks from one core to an adjacent core although this problem can be overcome by randomly aligning the cores or illuminating each core in random sequence at the proximal end of the fibre. Each fibre can be calibrated to adjust for intrinsic autofluorescence, and each individual fibre is mapped before use to improve image accuracy. Fibre bundles may include a channel for the local delivery of contrast agents and molecular agents. Optical endomicroscopy has been used in human patients for label-free imaging of gastrointestinal tract [25] or lung architecture [26] but also for the detection of neutrophilic inflammation in the diseased human lung [27]. Fibres have long lent themselves to fluorescent imaging [28], but two-photon microscopy [29, 30], fluorescent lifetime and Raman spectroscopy [31] as well as hybrid devices [32] have also been demonstrated.

4.1.9 Super-Resolution Microscopy

Abbe’s diffraction limit remains the theoretical limit for spatial resolution, but several technologies have been developed to penetrate the diffraction limit for SUPER-RESOLUTION MICROSCOPY and provide novel insights into molecular biology.

4.1.9.1 Near-Field Scanning Optical Microscopy

Because diffraction only occurs when light has propagated into the far field (i.e. beyond a distance equal to one wavelength of the illuminating light), near-field imaging has been used to avoid the diffraction limit entirely [33, 34]. The sample is placed in the near field, adjacent to the device aperture, and light is collected before it begins to diffract. Lateral resolutions in the order of 20–50 nm have been achieved [35], far in excess of a typical diffraction-limited light microscope. However, because imaging is limited to the diffraction-free near field, the depth of penetration into the sample is incredibly small, and because the aperture used to illuminate the sample and collect light is tiny, image construction is time-consuming.

4.1.9.2 Super Resolution by Spatially Patterned Excitation

Spatially patterned excitation super-resolution microscopy covers several different techniques including STIMULATED EXCITATION DEPLETION (STED) MICROSCOPY. Although the stimulation laser excites a large number of fluorophores, a second laser is employed to drive all off-target fluorophores (i.e. those away from the centre of the stimulation area) into their dark state. The remaining fluorescence emanates only from those fluorophores in the very centre of the excitation field [36]. Lateral resolutions of approximately 30 nm have been demonstrated, but image construction is again relatively time-consuming.

Typically imaging takes place in fixed, prepared tissue although it is possible to perform imaging on live tissue, for example, combination with a two-photon technique facilitates a better depth of penetration [37].

4.1.9.3 Super Resolution by Single-Molecule Imaging

By using fluorescent agents that are able to switch rapidly and reversibly from fluorescent to dark states, it is possible to resolve two points that would otherwise be too close, by distinguishing them in time and collating images to generate a resolution which beats the diffraction limit [5]. Examples of this approach include stochastic optical reconstruction microscopy [38] (STORM) and photoactivated localization microscopy [39] (PALM). In order to accept the assumption that each collected source of light does not overlap with another, there must only be a few activated fluorophores per imaging frame. Several thousand frames are therefore required to build up a resolved image of even the smallest field of view, which increases the time taken for image acquisition. Brighter fluorophores (less time required to produce a detectable number of photons), rapidly switchable lasers and high-density data analysis will lead to shorter acquisition times and facilitate the imaging of live cellular process which occur over short time scales (Fig. 18.5) [41].

Stimulated emission depletion (STED) microscopy. The images show the tagging and detection of SNAP-25, a plasma membrane protein, on a neuronal cell with confocal (conf) and stimulated emission depletion (STED) microscopy. STED provides super resolution by spatially patterned excitation and unlimited diffraction, resulting in a clearer image of SNAP-25 in comparison to confocal microscopy. Adapted from Willig et al. [40]

4.2 Non-optical Imaging

4.2.1 Magnetic Resonance Imaging

Magnetic resonance imaging (MRI) uses powerful magnetic fields to align magnetically susceptible spinning protons in the specimen, before deflecting them from their axis with a radio wave. As the protons return to their alignment, they emit a radio signal that can be detected by multiple receiver coils placed throughout the scanner. The signal from a relaxing proton has two dimensions, the time taken to relax to their original vector (T1 relaxation) and the time taken for axial spin to return to normal (T2 relaxation). Different tissues relax at different time constants, and the received information is used to build up a greyscale image composed of multiple tissue slices [42]. Whilst there are no known biological hazards associated with the use of MRI, the equipment remains bulky and expensive, and there are safety dangers associated with the inadvertent juxtaposition of loose ferrous metal within the scanning zone. MRI remains invaluable for the imaging of tissue structure and diseases associated with structural deviation, but relative to optical technologies, the resolution is poor, and there are few contrast agents to target molecular and cellular processes. Contrast agents that do exist need to be paramagnetic in order to be susceptible to the magnetic field of the scanner and most work by shortening the T1 relaxation time of nearby protons [43]. Gadolinium is commonly used in clinical investigations to provide additional structural contrast. Super-paramagnetic particles of iron oxide (SPIO) or ultrasmall super-paramagnetic particles of iron oxide (USPIO) have been used as contrast agents [44]. They are taken up by macrophages when injected in order to track cell s to sites of inflammation including atherosclerotic plaque formation [45] and rupture [46] and can be conjugated in a variety of ways to study biological processes [47].

4.2.2 Nuclear Medicine

4.2.2.1 Single-Photon Emission Computed Tomography (SPECT)

SPECT functions in similar way to simple planar gamma camera imaging (scintigraphy), in that it relies upon the emission of high energy photons (or gamma rays) from a decaying radionuclide which has been conjugated to a biologically relevant ligand. Unlike scintigraphy, which has a fixed camera, the rotational gantry used in SPECT provides additional photon vector information, which allows the production of 3D images and images with a better resolution. SPECT scanners can use radionuclides with long half-lives and which have been widely available since the advent of nuclear imaging, such as Technetium-99 and Gallium-67, which have been extensively used to study inflammation when paired with a biologically functional conjugate [48]. SPECT and CT scanners can be incorporated onto the same gantry to provide accurate structural information about the location of the biological process of interest (Fig. 18.6).

Single-photon emission computed tomography (SPECT). SPECT can be used to evaluate myocardial perfusion and viability to assess inducible ischemia due to flow-limiting coronary stenosis. This is achieved by intravenously administering minute levels of radioactive trace and recording images after exercise. Stenosis is observed in the first image of a man with angina after exercise, which is reversed after a period of rest (arrows). Adapted from He et al. [49]

4.2.2.2 Positron Emission Tomography (PET)

Like SPECT, PET relies upon the production of gamma rays from a decaying radionuclide that has been conjugated to a biologically active molecule. The radionuclide is transmitted to its target of interest by the biologically active conjugate and emits two positrons as it undergoes radioactive decay. Within a small distance of emission, the positrons annihilate with electrons resulting in the emission of two gamma rays (high-energy photons) in opposite directions, which are detected by a rotating gamma camera [50, 51]. Because two photons are produced within close proximity to the location of positron emission and because they are exactly coincident, the location of emission can be detected with a higher degree of accuracy compared to SPECT imaging. The distance travelled by the positrons before annihilation occurs is the predominant limiting factor of spatial resolution. Radionuclides suitable for PET typically have a very short half-life, so they need to be manufactured in an on-site cyclotron, increasing expense and restricting use to research or specialist centres. Several suitable radionuclides exist, but by far the most commonly used is fluorine-18, which is usually incorporated into a biologically inactive analogue of glucose (18-fluorodeoxyglucose, 18FDG) and so accumulates in rapidly metabolizing tissues such as cancer or inflammatory cells and has a wide repertoire of clinical uses [52]. Like SPECT, PET scanners can be incorporated with CT scanners to provide excellent co-localized structural information (Fig. 18.7) [54].

Positron emission tomography and computed tomography (PET/CT). The images show the incorporation of computed tomography (CT) and positron emission tomography (PET) imaging of a deep vein thrombosis (DVT) mouse model. Neutrophil-depleted mice (left) showed a decreased signal (yellow arrow) in comparison to the control (right), suggesting the importance of neutrophils in thrombus-associated inflammation. White arrow = sham-operated jugular vein. Adapted from Hara et al. [53]

4.3 Non-electromagnetic Imaging

4.3.1 Ultrasound

Sound waves, unlike radiation on the electromagnetic spectrum, are physical waves and require a medium through which to be propagated. ULTRASOUND refers to any sound frequency above the limit of human hearing, about 20 kHz, but medical ultrasound machines typically use sound with frequencies in the range of 1–20 MHz. In the head of the ultrasound transducer, the application of a precise electric current across a piezoelectric crystal transduces electrical energy to mechanical energy in the form of sound waves. The sound waves are propagated through the tissue of interest and scattered at the interfaces of tissues with different acoustic impendence. Some scattered sound reflects back to the transducer that converts the returning mechanical energy back to an electric current to be processed into a visible image. The speed and intensity with which sound is reflected back will depend upon the type and depth of tissue in the path of the beam. Higher-frequency, shorter wavelength sound waves provide better spatial resolution than lower-frequency waves but lack penetration depth because of acoustic attenuation (Fig. 18.8). Ultrasound is low cost, low complexity, low size and readily transportable and has no biological safety concerns, but there is a paucity of biological contrast agents. Microbubbles have long been used as molecular contrast agents in ultrasound, and recent advances in manufacturing technology have enabled the conjugation of microbubbles to a biological ligand, increasing the specificity to particular biological targets [56].

Ultrasound. The images show ultrasound imaging of the ileum of a healthy individual (a) and a patient with Crohn’s disease (b). In a healthy individual the ileum has a thin wall (arrows) as opposed to the thick wall seen in a diseased ileum. C caecum, * iliac vessel. Adapted from Dillman et al. [55]

4.3.2 Hybrid Systems

Virtually any of the modalities described can be hybridized to produce techniques that confer additional advantages whilst overcoming some of the disadvantages if each technique were used alone. Images from two or more different technologies need to be co-registered in order to provide accurate and precise information that it is both reliably quantified and localized. As the speed of acquisition increases, the size of the devices falls and processing power increases, these devices become more and more useful. Molecular agents can be designed to offer detection through more than one technique, reducing the number of the agents that need to be applied [57]. Examples include PET-Optical [58, 59] and MRI-Optical [60, 61]. If structural information is not already available, then any single or hybrid technique can be coupled with a structural imaging technique such as CT or MRI. One hybrid technique that does not result from the co-registration of images is photoacoustic imaging.

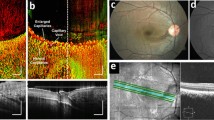

4.3.3 Photoacoustic

Unlike hybrid systems which co-register two images from two independent techniques, PHOTOACOUSTIC IMAGING generates a single image by combining optical excitation and acoustic detection [62]. Tissue is excited by a pulsed laser, which induces a small temperature change. Thermo-elastic expansion occurs and creates a pressure wave, which can be detected by one or more ultrasonic transducers outside the specimen that process the information to build up a tomographic image. By relying on ultrasound for detection, only the absorption spectrum of the target tissue is important, not the emission spectrum, and smaller numbers of photons are required to be absorbed to produce an acoustic effect. Photoacoustic imaging can be implemented in a variety of ways, including via endoscopy [63], with spatial resolution being approximately equal to 1/200th of the imaging depth, which can reach 7 cm [62]. Contrast agents that absorb infrared light avidly can be used, and their structure can be manipulated to alter their physical and biological effects [64]. Commercially available fluorescent dyes, active in the infrared region, have also been used for their photoacoustic properties, for example, for the detection of matrix metalloproteinase activity in atherosclerotic plaque formation (Fig. 18.9) [65].

Multispectral photoacoustic tomography. The images show human carotid plaques obtained by multispectral photoacoustic tomography technology. A matrix metalloproteinase (MMP)-sensitive, activatable fluorescent probe was applied to samples (a) and (b) in order to detect MMP levels as elevated expression is associated with plaque instability compared to control (c). Adapted from Razansky et al. [65]

5 Conclusion

Imaging has progressed from the simplest light microscope to complex and multiplexed hybrid systems with capability across the entire experimental spectrum from the bench to the clinic. Remarkable insights into inflammatory processes have been achieved, and the discipline continues to develop rapidly as funders recognize the ability of such techniques to improve understanding of disease and clinical care. One emerging field of molecular imaging is the possible merger of therapeutic and diagnostic imaging agents, so investigators might visualize the delivery of a novel therapeutic and determine its disease-modulating ability [56].

References

Mankoff D. A definition of molecular imaging. J Nucl Med. 2007;48(18N):21N.

Weissleder R, Mahmood U. Molecular imaging. Radiology. 2001;219:316–33.

Miller JC, Thrall JH. Clinical molecular imaging. J Am Coll Radiol. 2004;1:4–23.

Pysz MA, Gambhir SS, Willmann JK. Molecular imaging: current status and emerging strategies. Clin Radiol. 2010;65:500–16.

Huang B, Bates M, Zhuang X. Super-resolution fluorescence microscopy. Annu Rev Biochem. 2009;78:993–1016.

Avlonitis N, Debunne M, Aslam T, et al. Highly specific, multi-branched fluorescent reporters for analysis of human neutrophil elastase. Org Biomol Chem. 2013;11:4414–8.

Akram AR, Avlonitis N, Lilienkampf A, et al. A labelled-ubiquicidin antimicrobial peptide for immediate in situ optical detection of live bacteria in human alveolar lung tissue. Chem Sci. 2015;6:6971–9.

Ding S, Cargill A, Das S, et al. Biosensing with Förster resonance energy transfer coupling between fluorophores and nanocarbon allotropes. Sensors. 2015;15:14766–87.

Le Reste L, Hohlbein J, Gryte K, Kapanidis AN. Characterization of dark quencher chromophores as nonfluorescent acceptors for single-molecule FRET. Biophys J. 2012;102:2658–68.

Gehrig S, Mall MA, Schultz C. Spatially resolved monitoring of neutrophil elastase activity with ratiometric fluorescent reporters. Angew Chem Int Ed Engl. 2012;51:6258–61.

Hoshino A, Hanada S, Yamamoto K. Toxicity of nanocrystal quantum dots: the relevance of surface modifications. Arch Toxicol. 2011;85:707–20.

Oheim M, Michael DJ, Geisbauer M, et al. Principles of two-photon excitation fluorescence microscopy and other nonlinear imaging approaches. Adv Drug Deliv Rev. 2006;58:788–808.

Kreisel D, Nava RG, Li W, et al. In vivo two-photon imaging reveals monocyte-dependent neutrophil extravasation during pulmonary inflammation. Proc Natl Acad Sci U S A. 2010;107:18073–8.

Grosberg LE, Radosevich AJ, Asfaha S, et al. Spectral characterization and unmixing of intrinsic contrast in intact normal and diseased gastric tissues using hyperspectral two-photon microscopy. PLoS One. 2011;6:e19925.

Stuker F, Ripoll J, Rudin M. Fluorescence molecular tomography: principles and potential for pharmaceutical research. Pharmaceutics. 2011;3:229–74.

Ntziachristos V. Fluorescence molecular imaging. Annu Rev Biomed Eng. 2006;8:1–33.

Kossodo S, Zhang J, Groves K, et al. Noninvasive in vivo quantification of neutrophil elastase activity in acute experimental mouse lung injury. Int J Mol Imaging. 2011;2011:581406.

Ntziachristos V, Tung C-H, Bremer C, Weissleder R. Fluorescence molecular tomography resolves protease activity in vivo. Nat Med. 2002;8:757–61.

Larmann J, Frenzel T, Hahnenkamp A, et al. In vivo fluorescence-mediated tomography for quantification of urokinase receptor-dependent leukocyte trafficking in inflammation. Anesthesiology. 2010;113:1.

Ntziachristos V, Bremer C, Weissleder R. Fluorescence imaging with near-infrared light: new technological advances that enable in vivo molecular imaging. Eur Radiol. 2003;13:195–208.

Movasaghi Z, Rehman S, Rehman IU. Raman spectroscopy of biological tissues. Appl Spectrosc Rev. 2007;42:493–541.

Bauer M, Popp J. Toward a spectroscopic hemogram: Raman spectroscopic differentiation of the two most abundant leukocytes from peripheral blood. Anal Chem. 2012;84:5335–42.

Zoladek A, Pascut FC, Patel P, Notingher I. Non-invasive time-course imaging of apoptotic cells by confocal Raman micro-spectroscopy. J Raman Spectrosc. 2011;42:251–8.

van Manen H-J, Kraan YM, Roos D, Otto C. Single-cell Raman and fluorescence microscopy reveal the association of lipid bodies with phagosomes in leukocytes. Proc Natl Acad Sci U S A. 2005;102:10159–64.

Napoléon B, Lemaistre A-I, Pujol B, et al. A novel approach to the diagnosis of pancreatic serous cystadenoma: needle-based confocal laser endomicroscopy. Endoscopy. 2014;47:26–32.

Thiberville L, Salaün M, Lachkar S, et al. Confocal fluorescence endomicroscopy of the human airways. Proc Am Thorac Soc. 2012;6:444–9.

Craven T, Walton T, Akram A, et al. In-situ imaging of neutrophil activation in the human alveolar space with neutrophil activation probe and pulmonary optical endomicroscopy. Lancet. 2016;387:S31.

Flusberg BA, Cocker ED, Piyawattanametha W, et al. Fiber-optic fluorescence imaging. Nat Methods. 2005;2:941–50.

Gu M, Kang H, Li X. Breaking the diffraction-limited resolution barrier in fiber-optical two-photon fluorescence endoscopy by an azimuthally-polarized beam. Sci Rep. 2014;4:3627.

Helmchen F, Denk W, Kerr JND. Miniaturization of two-photon microscopy for imaging in freely moving animals. Cold Spring Harb Protoc. 2013;2013:904–13.

Santos LF, Wolthuis R, Koljenović S, et al. Fiber-optic probes for in vivo Raman spectroscopy in the high-wavenumber region. Anal Chem. 2005;77:6747–52.

Dochow S, Ma D, Latka I, et al. Combined fiber probe for fluorescence lifetime and Raman spectroscopy. Anal Bioanal Chem. 2015;407:8291–301.

Dürig U, Pohl DW, Rohner F. Near-field optical-scanning microscopy. J Appl Phys. 1986;59:3318.

de Lange F, Cambi A, Huijbens R, et al. Cell biology beyond the diffraction limit: near-field scanning optical microscopy. J Cell Sci. 2001;114:4153–60.

Huckabay HA, Armendariz KP, Newhart WH, et al. Near-field scanning optical microscopy for high-resolution membrane studies. Methods Mol Biol. 2013;950:373–94.

Blom H, Widengren J. STED microscopy—towards broadened use and scope of applications. Curr Opin Chem Biol. 2014;20:127–33.

Takasaki KT, Ding JB, Sabatini BL. Live-cell superresolution imaging by pulsed STED two-photon excitation microscopy. Biophys J. 2013;104:770–7.

Rust MJ, Bates M, Zhuang X. Sub-diffraction-limit imaging by stochastic optical reconstruction microscopy (STORM). Nat Methods. 2006;3:793–5.

Betzig E, Patterson GH, Sougrat R, et al. Imaging intracellular fluorescent proteins at nanometer resolution. Science. 2006;313:1642–5.

Willig KI, Keller J, Bossi M, Hell SW. STED microscopy resolves nanoparticle assemblies. New J Phys. 2006;8:106.

Cox S. Super-resolution imaging in live cells. Dev Biol. 2015;401:175–81.

Berger A. How does it work?: magnetic resonance imaging. BMJ. 2002;324:35.

Shokrollahi H. Contrast agents for MRI. Mater Sci Eng C Mater Biol Appl. 2013;33:4485–97.

Jin R, Lin B, Li D, Ai H. Superparamagnetic iron oxide nanoparticles for MR imaging and therapy: design considerations and clinical applications. Curr Opin Pharmacol. 2014;18:18–27.

Metz S, Beer AJ, Settles M, et al. Characterization of carotid artery plaques with USPIO-enhanced MRI: assessment of inflammation and vascularity as in vivo imaging biomarkers for plaque vulnerability. Int J Cardiovasc Imaging. 2011;27:901–12.

Qi C, Deng L, Li D, et al. Identifying vulnerable atherosclerotic plaque in rabbits using DMSA-USPIO enhanced magnetic resonance imaging to investigate the effect of atorvastatin. PLoS One. 2015;10:e0125677.

Bull E, Madani SY, Sheth R, et al. Stem cell tracking using iron oxide nanoparticles. Int J Nanomedicine. 2014;9:1641–53.

Basu S, Zhuang H, Torigian DA, et al. Functional imaging of inflammatory diseases using nuclear medicine techniques. Semin Nucl Med. 2009;39:124–45.

He Z-X, Shi R-F, Wu Y-J, et al. Direct imaging of exercise-induced myocardial ischemia with fluorine-18-labeled deoxyglucose and Tc-99m-sestamibi in coronary artery disease. Circulation. 2003;108:1208–13.

Ter-Pogossian MM, Phelps ME, Hoffman EJ, Mullani NA. A positron-emission transaxial tomograph for nuclear imaging (PETT). Radiology. 1975;114:89–98.

Nolting DD, Nickels ML, Guo N, Pham W. Molecular imaging probe development: a chemistry perspective. Am J Nucl Med Mol Imaging. 2012;2:273–306.

Vaidyanathan S, Patel CN, Scarsbrook AF, Chowdhury FU. FDG PET/CT in infection and inflammation-current and emerging clinical applications. Clin Radiol. 2015;70:787–800.

Hara T, Truelove J, Tawakol A, et al. 18F-fluorodeoxyglucose positron emission tomography/computed tomography enables the detection of recurrent same-site deep vein thrombosis by illuminating recently formed, neutrophil-rich thrombus. Circulation. 2014;130:1044–52.

Townsend DW, Cherry SR. Combining anatomy and function: the path to true image fusion. Eur Radiol. 2001;11:1968–74.

Dillman JR, Smith EA, Sanchez RJ, et al. Pediatric small bowel Crohn disease: correlation of US and MR enterography. Radiographics. 2015;35:835–48.

Gessner R, Dayton PA. Advances in molecular imaging with ultrasound. Mol Imaging. 2010;9:117–27.

Heidt T, Nahrendorf M. Multimodal iron oxide nanoparticles for hybrid biomedical imaging. NMR Biomed. 2013;26:756–65.

Nahrendorf M, Keliher E, Marinelli B, et al. Hybrid PET-optical imaging using targeted probes. Proc Natl Acad Sci U S A. 2010;107:7910–5.

Phillips E, Penate-Medina O, Zanzonico PB, et al. Clinical translation of an ultrasmall inorganic optical-PET imaging nanoparticle probe. Sci Transl Med. 2014;6:260ra149.

Lee WW, Marinelli B, van der Laan AM, et al. PET/MRI of inflammation in myocardial infarction. J Am Coll Cardiol. 2012;59:153–63.

Kim HS, Cho HR, Choi SH, et al. In vivo imaging of tumor transduced with bimodal lentiviral vector encoding human ferritin and green fluorescent protein on a 1.5T clinical magnetic resonance scanner. Cancer Res. 2010;70:7315–24.

Wang LV, Hu S. Photoacoustic tomography: in vivo imaging from organelles to organs. Science. 2012;335:1458–62.

Yang J-M, Favazza C, Chen R, et al. Simultaneous functional photoacoustic and ultrasonic endoscopy of internal organs in vivo. Nat Med. 2012;18:1297–302.

Zackrisson S, van de Ven SMWY, Gambhir SS. Light in and sound out: emerging translational strategies for photoacoustic imaging. Cancer Res. 2014;74:979–1004.

Razansky D, Harlaar NJ, Hillebrands JL, et al. Multispectral optoacoustic tomography of matrix metalloproteinase activity in vulnerable human carotid plaques. Mol Imaging Biol. 2012;14:277–85.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer International Publishing AG

About this chapter

Cite this chapter

Craven, T.H., Potey, P.M.D., Dorward, D.A., Rossi, A.G. (2019). Imaging Inflammation. In: Parnham, M., Nijkamp, F., Rossi, A. (eds) Nijkamp and Parnham's Principles of Immunopharmacology. Springer, Cham. https://doi.org/10.1007/978-3-030-10811-3_18

Download citation

DOI: https://doi.org/10.1007/978-3-030-10811-3_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-10809-0

Online ISBN: 978-3-030-10811-3

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)